|

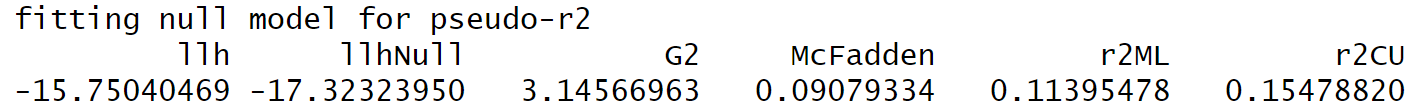

Pseudo-R-Squared Outputted Values From R

In statistics, pseudo-R-squared values are used when the outcome variable is nominal or ordinal such that the coefficient of determination 2 cannot be applied as a measure for goodness of fit and when a likelihood function is used to fit a model. In linear regression, the squared multiple correlation, 2 is used to assess goodness of fit as it represents the proportion of variance in the criterion that is explained by the predictors. In logistic regression analysis, there is no agreed upon analogous measure, but there are several competing measures each with limitations. Four of the most commonly used indices and one less commonly used one are examined in this article: * Likelihood ratio 2 * Cox and Snell 2 * Nagelkerke 2 * McFadden 2 * Tjur 2 2L by Cohen 2L is given by Cohen: ::R^2_\text = \frac . This is the most analogous index to the squared multiple correlations in linear regression. It represents the proportional reduction in the deviance wherein the deviance is tr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coefficient Of Determination

In statistics, the coefficient of determination, denoted ''R''2 or ''r''2 and pronounced "R squared", is the proportion of the variation in the dependent variable that is predictable from the independent variable(s). It is a statistic used in the context of statistical models whose main purpose is either the prediction of future outcomes or the testing of hypotheses, on the basis of other related information. It provides a measure of how well observed outcomes are replicated by the model, based on the proportion of total variation of outcomes explained by the model. There are several definitions of ''R''2 that are only sometimes equivalent. In simple linear regression (which includes an intercept), ''r''2 is simply the square of the sample ''correlation coefficient'' (''r''), between the observed outcomes and the observed predictor values. If additional regressors are included, ''R''2 is the square of the '' coefficient of multiple correlation''. In both such cases, the coeffi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Function

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the joint probability distribution of the random variable that (presumably) generated the observations. When evaluated on the actual data points, it becomes a function solely of the model parameters. In maximum likelihood estimation, the argument that maximizes the likelihood function serves as a point estimate for the unknown parameter, while the Fisher information (often approximated by the likelihood's Hessian matrix at the maximum) gives an indication of the estimate's precision. In contrast, in Bayesian statistics, the estimate of interest is the ''converse'' of the likelihood, the so-called posterior probability of the parameter given the observed data, which is calculated via Bayes' rule. Definition The likelihood function, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Regression

In statistics, linear regression is a statistical model, model that estimates the relationship between a Scalar (mathematics), scalar response (dependent variable) and one or more explanatory variables (regressor or independent variable). A model with exactly one explanatory variable is a ''simple linear regression''; a model with two or more explanatory variables is a multiple linear regression. This term is distinct from multivariate linear regression, which predicts multiple correlated dependent variables rather than a single dependent variable. In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimation theory, estimated from the data. Most commonly, the conditional mean of the response given the values of the explanatory variables (or predictors) is assumed to be an affine function of those values; less commonly, the conditional median or some other quantile is used. Like all forms of regression analysis, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, a logistic model (or logit model) is a statistical model that models the logit, log-odds of an event as a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) estimation theory, estimates the parameters of a logistic model (the coefficients in the linear or non linear combinations). In binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, hence the name. The unit of measurement for the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. It is the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for example, the variance of a sum of uncorrelated random variables is equal to the sum of their variances. A disadvantage of the variance for practical applications is that, unlike the standard deviation, its units differ from the random variable, which is why the standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joyce Snell

E. Joyce Snell (born 1930) is a British statistician who taught in the mathematics department at Imperial College London. She is known for her work on residuals and ordered categorical data, and for her books on statistics. Books Snell is the author or editor of: *''Analysis of Binary Data'' (with David R. Cox, 1969; 2nd ed., Chapman & Hall/CRC, 1989) *''Applied Statistics: Principles and Examples'' (with David R. Cox, Chapman & Hall/CRC, 1981) *''Applied Statistics: A Handbook of GENSTAT Analyses'' (with H. R. Simpson, 1982) *''Applied Statistics: A Handbook of BMDP Analyses'' (Chapman & Hall/CRC, 1987) *''Statistical Theory and Modelling: in Honour of Sir David Cox, FRS'' (edited with David Hinkley and Nancy Reid, Chapman & Hall/CRC, 1991) Recognition Snell was given the of the Royal Statistical Society The Royal Statistical Society (RSS) is an established statistical society. It has three main roles: a British learned society for statistics, a professional body for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nico Nagelkerke

Nicolaas Jan Dirk "Nico" Nagelkerke (born 1951) is a retired Dutch biostatistician and epidemiologist. He was a professor of biostatistics at the United Arab Emirates University and previously taught at the University of Leiden in the Netherlands , Terminology of the Low Countries, informally Holland, is a country in Northwestern Europe, with Caribbean Netherlands, overseas territories in the Caribbean. It is the largest of the four constituent countries of the Kingdom of the Nether .... In 1991, he defined the Nagelkerke R-squared, an equation adjusting the Cox and Snell R-squared value to be comparable to the traditional coefficient of determination. References Living people 1951 births Dutch statisticians Dutch epidemiologists Biostatisticians Leiden University alumni University of Amsterdam alumni Academic staff of Leiden University Academic staff of United Arab Emirates University {{Netherlands-academic-bio-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood Ratio

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the joint probability distribution of the random variable that (presumably) generated the observations. When evaluated on the actual data points, it becomes a function solely of the model parameters. In maximum likelihood estimation, the argument that maximizes the likelihood function serves as a point estimate for the unknown parameter, while the Fisher information (often approximated by the likelihood's Hessian matrix at the maximum) gives an indication of the estimate's precision. In contrast, in Bayesian statistics, the estimate of interest is the ''converse'' of the likelihood, the so-called posterior probability of the parameter given the observed data, which is calculated via Bayes' rule. Definition The likelihood function, parameter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |