|

Progressive Refinement

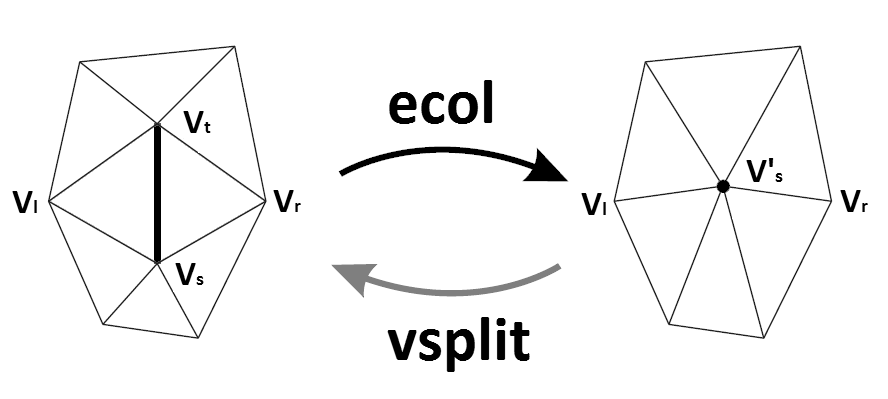

Progressive meshes is one of the techniques of dynamic level of detail (LOD). This technique was introduced by Hugues Hoppe in 1996. This method uses saving a model to the structure - the progressive mesh, which allows a smooth choice of detail levels depending on the current view. Practically, this means that it is possible to display whole model with the lowest level of detail at once and then it gradually shows even more details. Among the disadvantages belongs considerable memory consumption. The advantage is that it can work in real time. Progressive meshes could be used also in other areas of computer technology such as a gradual transfer of data through the Internet or compression. D. Luebke, M. Reddy, J. D. Cohen, A. Varshney, B. Watson, R. Huebner: Level of Detail for 3D Graphics, Morgan Kaufmann, 2002, Basic principle A progressive mesh is a data structure which is created as the original model of the best quality simplifies a suitable decimation algorithm, which removes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Level Of Detail (computer Graphics)

In computer graphics, level of detail (LOD) refers to the complexity of a 3D model representation. LOD can be decreased as the model moves away from the viewer or according to other metrics such as object importance, viewpoint-relative speed or position. LOD techniques increase the efficiency of rendering by decreasing the workload on graphics pipeline stages, usually vertex transformations. The reduced visual quality of the model is often unnoticed because of the small effect on object appearance when distant or moving fast. Although most of the time LOD is applied to geometry detail only, the basic concept can be generalized. Recently, LOD techniques also included shader management to keep control of pixel complexity. A form of level of detail management has been applied to texture maps for years, under the name of mipmapping, also providing higher rendering quality. It is commonplace to say that "an object has been ''LOD-ed''" when the object is simplified by the underlying ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for efficient access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data, i.e., it is an algebraic structure about data. Usage Data structures serve as the basis for abstract data types (ADT). The ADT defines the logical form of the data type. The data structure implements the physical form of the data type. Different types of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, relational databases commonly use B-tree indexes for data retrieval, while compiler implementations usually use hash tables to look up identifiers. Data structures provide a means to manage large amounts of data efficiently for uses such as large databases and internet indexing services. Usually, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Downsampling (signal Processing)

In digital signal processing, downsampling, compression, and decimation are terms associated with the process of ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''compression'', or they can describe an entire process of bandwidth reduction (filtering) and sample-rate reduction. When the process is performed on a sequence of samples of a ''signal'' or a continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a lower rate (or density, as in the case of a photograph). ''Decimation'' is a term that historically means the '' removal of every tenth one''. But in signal processing, ''decimation by a factor of 10'' actually means ''keeping'' only every tenth sample. This factor multiplies the sampling interval or, equivalently, divides the sampling rate. For example, if compact disc audio at 44,100 samples/second is ''decimated'' by a factor of 5 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a Heuristic (computer science), heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vertex (computer Graphics)

A vertex (plural vertices) in computer graphics is a data structure that describes certain attributes, like the position of a point in 2D or 3D space, or multiple points on a surface. Application to 3D models 3D models are most often represented as triangulated polyhedra forming a triangle mesh. Non-triangular surfaces can be converted to an array of triangles through tessellation. Attributes from the vertices are typically interpolated across mesh surfaces. Vertex attributes The vertices of triangles are associated not only with spatial position but also with other values used to render the object correctly. Most attributes of a vertex represent vectors in the space to be rendered. These vectors are typically 1 (''x''), 2 (''x, y''), or 3 (''x, y, z'') dimensional and can include a fourth homogeneous coordinate (''w''). These values are given meaning by a material description. In realtime rendering these properties are used by a vertex shader or vertex pipeline. Such attr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

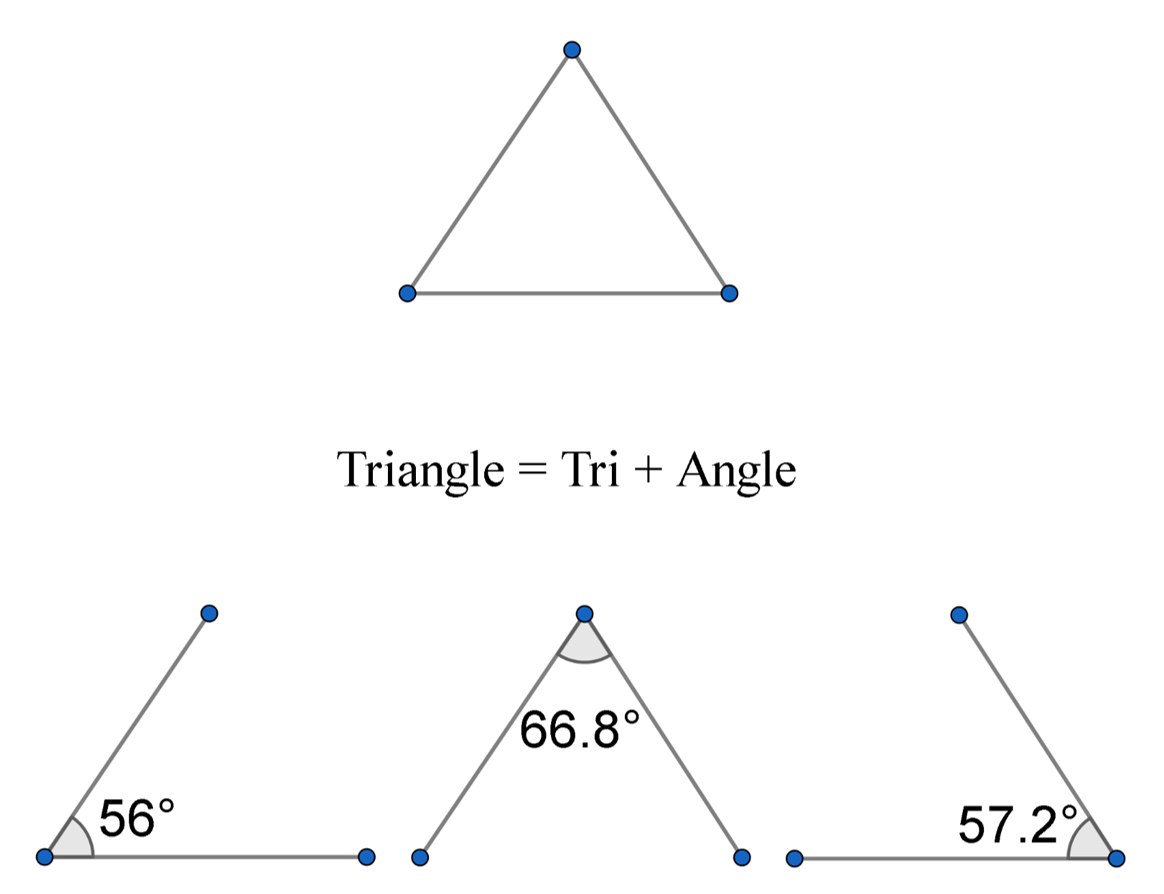

Triangles

A triangle is a polygon with three edges and three vertices. It is one of the basic shapes in geometry. A triangle with vertices ''A'', ''B'', and ''C'' is denoted \triangle ABC. In Euclidean geometry, any three points, when non-collinear, determine a unique triangle and simultaneously, a unique plane (i.e. a two-dimensional Euclidean space). In other words, there is only one plane that contains that triangle, and every triangle is contained in some plane. If the entire geometry is only the Euclidean plane, there is only one plane and all triangles are contained in it; however, in higher-dimensional Euclidean spaces, this is no longer true. This article is about triangles in Euclidean geometry, and in particular, the Euclidean plane, except where otherwise noted. Types of triangle The terminology for categorizing triangles is more than two thousand years old, having been defined on the very first page of Euclid's Elements. The names used for modern classification are eith ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Progressive Refinement

Progressive meshes is one of the techniques of dynamic level of detail (LOD). This technique was introduced by Hugues Hoppe in 1996. This method uses saving a model to the structure - the progressive mesh, which allows a smooth choice of detail levels depending on the current view. Practically, this means that it is possible to display whole model with the lowest level of detail at once and then it gradually shows even more details. Among the disadvantages belongs considerable memory consumption. The advantage is that it can work in real time. Progressive meshes could be used also in other areas of computer technology such as a gradual transfer of data through the Internet or compression. D. Luebke, M. Reddy, J. D. Cohen, A. Varshney, B. Watson, R. Huebner: Level of Detail for 3D Graphics, Morgan Kaufmann, 2002, Basic principle A progressive mesh is a data structure which is created as the original model of the best quality simplifies a suitable decimation algorithm, which removes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |