|

Probabilistic Parsing

Grammar theory to model symbol strings originated from work in computational linguistics aiming to understand the structure of natural languages. Probabilistic context free grammars (PCFGs) have been applied in probabilistic modeling of RNA structures almost 40 years after they were introduced in computational linguistics. PCFGs extend context-free grammars similar to how hidden Markov models extend regular grammars. Each production is assigned a probability. The probability of a derivation (parse) is the product of the probabilities of the productions used in that derivation. These probabilities can be viewed as parameters of the model, and for large problems it is convenient to learn these parameters via machine learning. A probabilistic grammar's validity is constrained by context of its training dataset. PCFGs have application in areas as diverse as natural language processing to the study the structure of RNA molecules and design of programming languages. Designing efficient P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar Theory

In linguistics, syntax () is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure ( constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals. Etymology The word ''syntax'' comes from Ancient Greek roots: "coordination", which consists of ''syn'', "together", and ''táxis'', "ordering". Topics The field of syntax contains a number of various topics that a syntactic theory is often designed to handle. The relation between the topics is treated differently in different theories, and some of them may not be considered to be distinct but instead to be derived from one another (i.e. word order can be seen as the result of movement rules derived from grammatical relations). S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context-free Grammar

In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules are of the form :A\ \to\ \alpha with A a ''single'' nonterminal symbol, and \alpha a string of terminals and/or nonterminals (\alpha can be empty). A formal grammar is "context-free" if its production rules can be applied regardless of the context of a nonterminal. No matter which symbols surround it, the single nonterminal on the left hand side can always be replaced by the right hand side. This is what distinguishes it from a context-sensitive grammar. A formal grammar is essentially a set of production rules that describe all possible strings in a given formal language. Production rules are simple replacements. For example, the first rule in the picture, :\langle\text\rangle \to \langle\text\rangle = \langle\text\rangle ; replaces \langle\text\rangle with \langle\text\rangle = \langle\text\rangle ;. There can be multiple replacement rules for a given nonterminal symbol. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty."Kendall's Advanced Theory of Statistics, Volume 1: Distribution Theory", Alan Stuart and Keith Ord, 6th Ed, (2009), .William Feller, ''An Introduction to Probability Theory and Its Applications'', (Vol 1), 3rd Ed, (1968), Wiley, . The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithm

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a number to the base is the exponent to which must be raised, to produce . For example, since , the ''logarithm base'' 10 of is , or . The logarithm of to ''base'' is denoted as , or without parentheses, , or even without the explicit base, , when no confusion is possible, or when the base does not matter such as in big O notation. The logarithm base is called the decimal or common logarithm and is commonly used in science and engineering. The natural logarithm has the number e (mathematical constant), as its base; its use is widespread in mathematics and physics, because of its very simple derivative. The binary logarithm uses base and is frequently used in computer science. Logarithms were introduced by John Napier in 1614 as a means of simplifying calculations. They were rapidly adopted by navigators, scientists, engineers, surveyors and oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parse Tree

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars (phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such as programming languages. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Historical Linguistics

Historical linguistics, also termed diachronic linguistics, is the scientific study of language change over time. Principal concerns of historical linguistics include: # to describe and account for observed changes in particular languages # to reconstruct the pre-history of languages and to determine their relatedness, grouping them into language families (comparative linguistics) # to develop general theories about how and why language changes # to describe the history of speech communities # to study the history of words, i.e. etymology Historical linguistics is founded on the Uniformitarian Principle, which is defined by linguist Donald Ringe as: History and development Western modern historical linguistics dates from the late-18th century. It grew out of the earlier discipline of philology, the study of ancient texts and documents dating back to antiquity. At first, historical linguistics served as the cornerstone of comparative linguistics, primarily as a tool ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pāṇini

, era = ;;6th–5th century BCE , region = Indian philosophy , main_interests = Grammar, linguistics , notable_works = ' ( Classical Sanskrit) , influenced= , notable_ideas= Descriptive linguistics (Devanagari: पाणिनि, ) was a Sanskrit philologist, grammarian, and revered scholar in ancient India, variously dated between the 6th and 4th century BCE. Since the discovery and publication of his work by European scholars in the nineteenth century, Pāṇini has been considered the "first descriptive linguist", François & Ponsonnet (2013: 184). and even labelled as “the father of linguistics”. Pāṇini's grammar was influential on such foundational linguists as Ferdinand de Saussure and Leonard Bloomfield. Legacy Pāṇini is known for his text '' Aṣṭādhyāyī'', a sutra-style treatise on Sanskrit grammar, 3,996 verses or rules on linguistics, syntax and semantics in "eight chapters" which is the foundational text of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

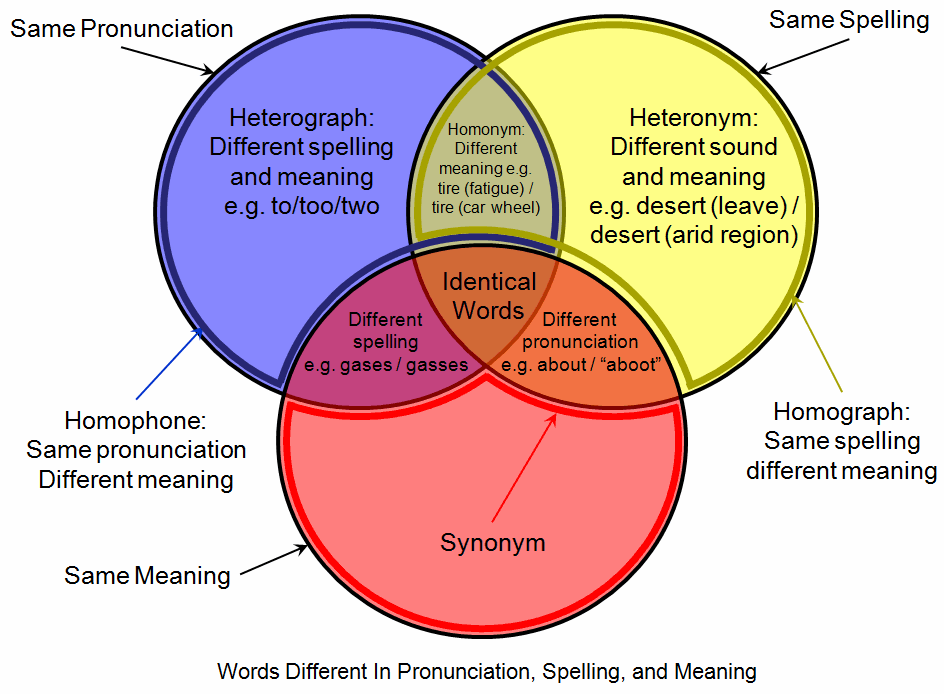

Homograph

A homograph (from the el, ὁμός, ''homós'', "same" and γράφω, ''gráphō'', "write") is a word that shares the same written form as another word but has a different meaning. However, some dictionaries insist that the words must also be pronounced differently, while the Oxford English Dictionary says that the words should also be of "different origin". In this vein, ''The Oxford Guide to Practical Lexicography'' lists various types of homographs, including those in which the words are discriminated by being in a different ''word class'', such as ''hit'', the verb ''to strike'', and ''hit'', the noun ''a blow''. If, when spoken, the meanings may be distinguished by different pronunciations, the words are also heteronyms. Words with the same writing ''and'' pronunciation (i.e. are both homographs and homophones) are considered homonyms. However, in a looser sense the term "homonym" may be applied to words with the same writing ''or'' pronunciation. Homograph disambigua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ambiguous Grammar

In computer science, an ambiguous grammar is a context-free grammar for which there exists a string that can have more than one leftmost derivation or parse tree, while an unambiguous grammar is a context-free grammar for which every valid string has a unique leftmost derivation or parse tree. Many languages admit both ambiguous and unambiguous grammars, while some languages admit only ambiguous grammars. Any non-empty language admits an ambiguous grammar by taking an unambiguous grammar and introducing a duplicate rule or synonym (the only language without ambiguous grammars is the empty language). A language that only admits ambiguous grammars is called an inherently ambiguous language, and there are inherently ambiguous context-free languages. Deterministic context-free grammars are always unambiguous, and are an important subclass of unambiguous grammars; there are non-deterministic unambiguous grammars, however. For computer programming languages, the reference grammar is o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backus–Naur Form

In computer science, Backus–Naur form () or Backus normal form (BNF) is a metasyntax notation for context-free grammars, often used to describe the syntax of languages used in computing, such as computer programming languages, document formats, instruction sets and communication protocols. It is applied wherever exact descriptions of languages are needed: for instance, in official language specifications, in manuals, and in textbooks on programming language theory. Many extensions and variants of the original Backus–Naur notation are used; some are exactly defined, including extended Backus–Naur form (EBNF) and augmented Backus–Naur form (ABNF). Overview A BNF specification is a set of derivation rules, written as ::= __expression__ where: * is a '' nonterminal'' (variable) and the __expression__ consists of one or more sequences of either terminal or nonterminal symbols; * means that the symbol on the left must be replaced with the expression on the right. * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

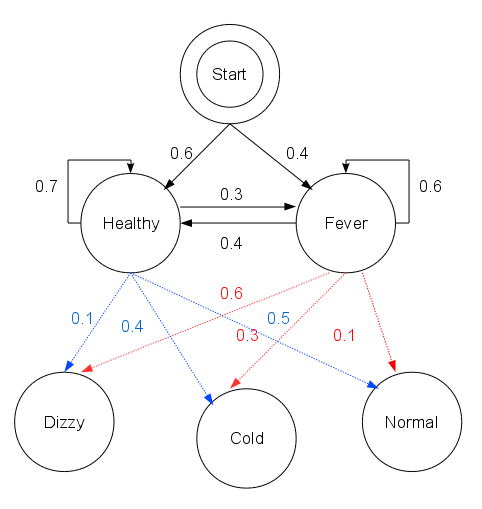

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. History The Viterb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamic Programming

Dynamic programming is both a mathematical optimization method and a computer programming method. The method was developed by Richard Bellman in the 1950s and has found applications in numerous fields, from aerospace engineering to economics. In both contexts it refers to simplifying a complicated problem by breaking it down into simpler sub-problems in a recursive manner. While some decision problems cannot be taken apart this way, decisions that span several points in time do often break apart recursively. Likewise, in computer science, if a problem can be solved optimally by breaking it into sub-problems and then recursively finding the optimal solutions to the sub-problems, then it is said to have '' optimal substructure''. If sub-problems can be nested recursively inside larger problems, so that dynamic programming methods are applicable, then there is a relation between the value of the larger problem and the values of the sub-problems.Cormen, T. H.; Leiserson, C. E.; Riv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |