|

Post Silicon Validation

{{no footnotes, date=July 2011 Post-silicon validation and debug is the last step in the development of a semiconductor integrated circuit. Pre-silicon process During the pre-silicon process, engineers test devices in a virtual environment with sophisticated simulation, emulation, and formal verification tools. In contrast, post-silicon validation tests occur on actual devices running at-speed in commercial, real-world system boards using logic analyzer and assertion-based tools. Reasoning Large semiconductor companies spend millions creating new components; these are the "sunk costs In economics and business decision-making, a sunk cost (also known as retrospective cost) is a cost that has already been incurred and cannot be recovered. Sunk costs are contrasted with '' prospective costs'', which are future costs that may be ..." of design implementation. Consequently, it is imperative that the new chip function in full and perfect compliance to its specification, and be de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integrated Circuit

An integrated circuit or monolithic integrated circuit (also referred to as an IC, a chip, or a microchip) is a set of electronic circuits on one small flat piece (or "chip") of semiconductor material, usually silicon. Large numbers of tiny MOSFETs (metal–oxide–semiconductor field-effect transistors) integrate into a small chip. This results in circuits that are orders of magnitude smaller, faster, and less expensive than those constructed of discrete electronic components. The IC's mass production capability, reliability, and building-block approach to integrated circuit design has ensured the rapid adoption of standardized ICs in place of designs using discrete transistors. ICs are now used in virtually all electronic equipment and have revolutionized the world of electronics. Computers, mobile phones and other home appliances are now inextricable parts of the structure of modern societies, made possible by the small size and low cost of ICs such as modern computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simulation

A simulation is the imitation of the operation of a real-world process or system over time. Simulations require the use of Conceptual model, models; the model represents the key characteristics or behaviors of the selected system or process, whereas the simulation represents the evolution of the model over time. Often, computers are used to execute the computer simulation, simulation. Simulation is used in many contexts, such as simulation of technology for performance tuning or optimizing, safety engineering, testing, training, education, and video games. Simulation is also used with scientific modelling of natural systems or human systems to gain insight into their functioning, as in economics. Simulation can be used to show the eventual real effects of alternative conditions and courses of action. Simulation is also used when the real system cannot be engaged, because it may not be accessible, or it may be dangerous or unacceptable to engage, or it is being designed bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Emulator

In computing, an emulator is Computer hardware, hardware or software that enables one computer system (called the ''host'') to behave like another computer system (called the ''guest''). An emulator typically enables the host system to run software or use peripheral devices designed for the guest system. Emulation refers to the ability of a computer program in an electronic device to emulate (or imitate) another program or device. Many Printer (computing), printers, for example, are designed to emulate Hewlett-Packard, HP LaserJet printers because so much software is written for HP printers. If a non-HP printer emulates an HP printer, any software written for a real HP printer will also run in the non-HP printer emulation and produce equivalent printing. Since at least the 1990s, many video game enthusiasts and hobbyists have used emulators to play classic arcade games from the 1980s using the games' original 1980s machine code and data, which is interpreted by a current-era s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Verification

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of intended algorithms underlying a system with respect to a certain formal specification or property, using formal methods of mathematics. Formal verification can be helpful in proving the correctness of systems such as: cryptographic protocols, combinational circuits, digital circuits with internal memory, and software expressed as source code. The verification of these systems is done by providing a formal proof on an abstract mathematical model of the system, the correspondence between the mathematical model and the nature of the system being otherwise known by construction. Examples of mathematical objects often used to model systems are: finite-state machines, labelled transition systems, Petri nets, vector addition systems, timed automata, hybrid automata, process algebra, formal semantics of programming languages such as operational semantics, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

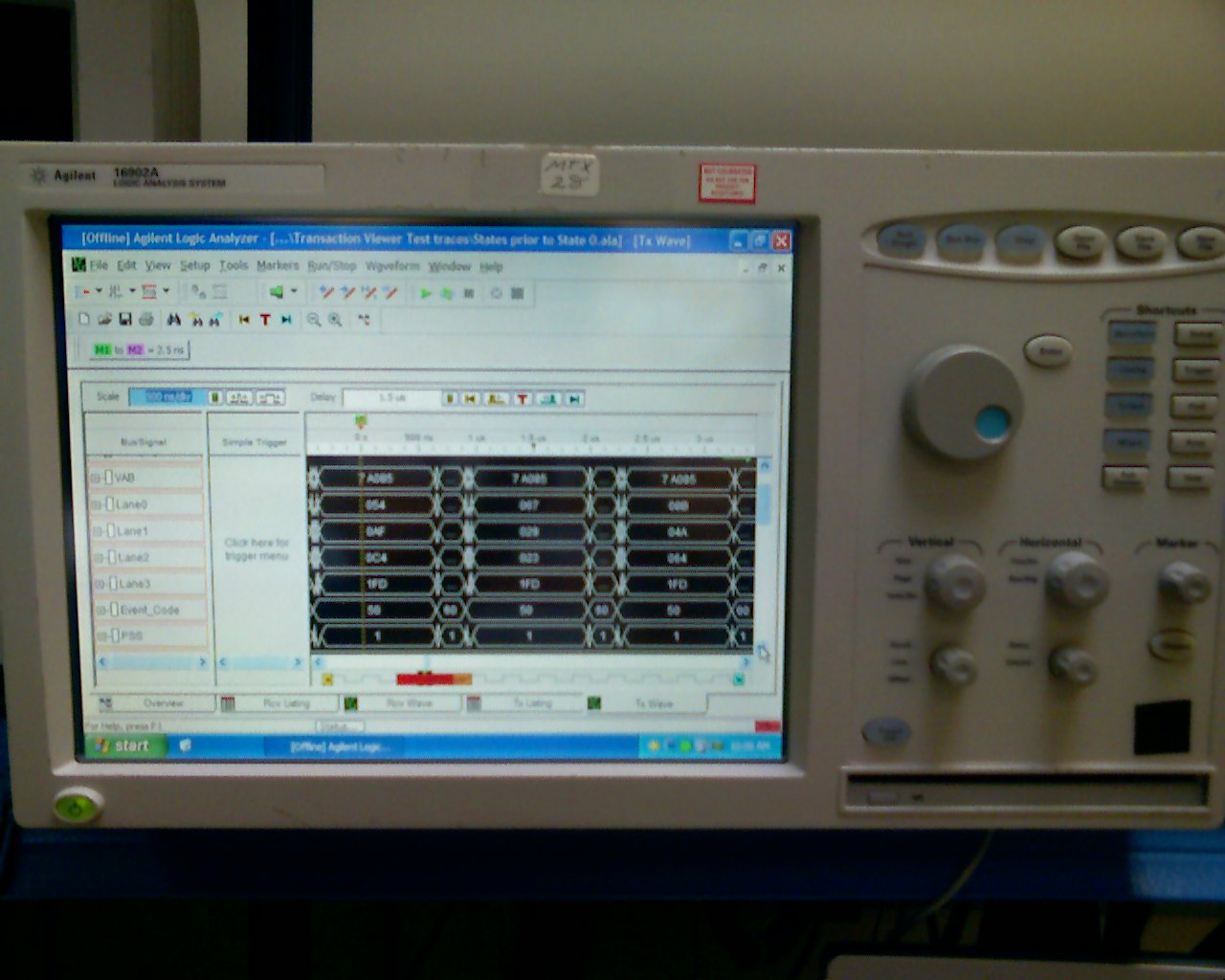

Logic Analyzer

A logic analyzer is an electronic instrument that captures and displays multiple signals from a digital system or digital circuit. A logic analyzer may convert the captured data into timing diagrams, protocol decodes, state machine traces, assembly language, or may correlate assembly with source-level software. Logic analyzers have advanced triggering capabilities, and are useful when a user needs to see the timing relationships between many signals in a digital system. Overview Presently, there are three distinct categories of logic analyzers available on the market: * Modular LAs, which consist of both a chassis or mainframe and logic analyzer modules. The mainframe/chassis contains the display, controls, control computer, and multiple slots into which the actual data-capturing hardware is installed. The modules each have a specific number of channels, and multiple modules may be combined to obtain a very high channel count. While modular logic analyzers are typically mor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Assertion (computing)

In computer programming, specifically when using the imperative programming paradigm, an assertion is a predicate (a Boolean-valued function over the state space, usually expressed as a logical proposition using the variables of a program) connected to a point in the program, that always should evaluate to true at that point in code execution. Assertions can help a programmer read the code, help a compiler compile it, or help the program detect its own defects. For the latter, some programs check assertions by actually evaluating the predicate as they run. Then, if it is not in fact true – an assertion failure – the program considers itself to be broken and typically deliberately crashes or throws an assertion failure exception. Details The following code contains two assertions, x > 0 and x > 1, and they are indeed true at the indicated points during execution: x = 1; assert x > 0; x++; assert x > 1; Programmers can use assertions to help specify programs and to reas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sunk Costs

In economics and business decision-making, a sunk cost (also known as retrospective cost) is a cost that has already been incurred and cannot be recovered. Sunk costs are contrasted with '' prospective costs'', which are future costs that may be avoided if action is taken. In other words, a sunk cost is a sum paid in the past that is no longer relevant to decisions about the future. Even though economists argue that sunk costs are no longer relevant to future rational decision-making, people in everyday life often take previous expenditures in situations, such as repairing a car or house, into their future decisions regarding those properties. Bygones principle According to classical economics and standard microeconomic theory, only prospective (future) costs are relevant to a rational decision. At any moment in time, the best thing to do depends only on ''current'' alternatives. The only things that matter are the ''future'' consequences. Past mistakes are irrelevant. Any cost ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logic In Computer Science

Logic in computer science covers the overlap between the field of logic and that of computer science. The topic can essentially be divided into three main areas: * Theoretical foundations and analysis * Use of computer technology to aid logicians * Use of concepts from logic for computer applications Theoretical foundations and analysis Logic plays a fundamental role in computer science. Some of the key areas of logic that are particularly significant are computability theory (formerly called recursion theory), modal logic and category theory. The theory of computation is based on concepts defined by logicians and mathematicians such as Alonzo Church and Alan Turing. Church first showed the existence of algorithmically unsolvable problems using his notion of lambda-definability. Turing gave the first compelling analysis of what can be called a mechanical procedure and Kurt Gödel asserted that he found Turing's analysis "perfect." In addition some other major areas of theor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Observability

Observability is a measure of how well internal states of a system can be inferred from knowledge of its external outputs. In control theory, the observability and controllability of a linear system are mathematical duals. The concept of observability was introduced by the Hungarian-American engineer Rudolf E. Kálmán for linear dynamic systems. A dynamical system designed to estimate the state of a system from measurements of the outputs is called a state observer or simply an observer for that system. Definition Consider a physical system modeled in state-space representation. A system is said to be observable if, for every possible evolution of state and control vectors, the current state can be estimated using only the information from outputs (physically, this generally corresponds to information obtained by sensors). In other words, one can determine the behavior of the entire system from the system's outputs. On the other hand, if the system is not observable, there ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Field-programmable Gate Array

A field-programmable gate array (FPGA) is an integrated circuit designed to be configured by a customer or a designer after manufacturinghence the term '' field-programmable''. The FPGA configuration is generally specified using a hardware description language (HDL), similar to that used for an application-specific integrated circuit (ASIC). Circuit diagrams were previously used to specify the configuration, but this is increasingly rare due to the advent of electronic design automation tools. FPGAs contain an array of programmable logic blocks, and a hierarchy of reconfigurable interconnects allowing blocks to be wired together. Logic blocks can be configured to perform complex combinational functions, or act as simple logic gates like AND and XOR. In most FPGAs, logic blocks also include memory elements, which may be simple flip-flops or more complete blocks of memory. Many FPGAs can be reprogrammed to implement different logic functions, allowing flexible reconfigur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Electronic Design Automation

Electronic design automation (EDA), also referred to as electronic computer-aided design (ECAD), is a category of software tools for designing Electronics, electronic systems such as integrated circuits and printed circuit boards. The tools work together in a Design flow (EDA), design flow that chip designers use to design and analyze entire semiconductor chips. Since a modern semiconductor chip can have billions of components, EDA tools are essential for their design; this article in particular describes EDA specifically with respect to integrated circuits (ICs). History Early days Prior to the development of EDA, integrated circuits were designed by hand and manually laid out. Some advanced shops used geometric software to generate tapes for a Gerber format, Gerber photoplotter, responsible for generating a monochromatic exposure image, but even those copied digital recordings of mechanically drawn components. The process was fundamentally graphic, with the translation f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of EDA Companies

A list of notable electronic design automation (EDA) companies. Existing companies Software companies FPGA companies Electronics distribution companies Development communities Defunct companies See also * List of items in the category Electronic Design Automation companies * Comparison of EDA software This page is a comparison of electronic design automation (EDA) software which is used today to design the near totality of electronic devices. Modern electronic devices are too complex to be designed without the help of a computer. Electronic dev ... * Cadence Design Systems: Acquisitions and mergers * Synopsys: Acquisitions, mergers, spinoffs References {{DEFAULTSORT:Eda Companies Lists of technology companies ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)

_last_flight.jpg)