|

Poly-APX

In computational complexity theory, the class APX (an abbreviation of "approximable") is the set of NP optimization problems that allow polynomial-time approximation algorithms with approximation ratio bounded by a constant (or constant-factor approximation algorithms for short). In simple terms, problems in this class have efficient algorithms that can find an answer within some fixed multiplicative factor of the optimal answer. An approximation algorithm is called an f(n)-approximation algorithm for input size n if it can be proven that the solution that the algorithm finds is at most a multiplicative factor of f(n) times worse than the optimal solution. Here, f(n) is called the ''approximation ratio''. Problems in APX are those with algorithms for which the approximation ratio f(n) is a constant c. The approximation ratio is conventionally stated greater than 1. In the case of minimization problems, f(n) is the found solution's score divided by the optimum solution's score, whi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Max/min CSP/Ones Classification Theorems

In computational complexity theory, a branch of computer science, the Max/min CSP/Ones classification theorems state necessary and sufficient conditions that determine the complexity classes of problems about satisfying a subset ''S'' of boolean relations. They are similar to Schaefer's dichotomy theorem, which classifies the complexity of satisfying finite sets of relations; however, the Max/min CSP/Ones classification theorems give information about the complexity of approximating an optimal solution to a problem defined by ''S''. Given a set ''S'' of clauses, the ''Max constraint satisfaction problem (CSP)'' is to find the maximum number (in the weighted case: the maximal sum of weights) of satisfiable clauses in ''S''. Similarly, the ''Min CSP problem'' is to minimize the number of unsatisfied clauses. The ''Max Ones problem'' is to maximize the number of boolean variables in ''S'' that are set to 1 under the restriction that all clauses are satisfied, and the ''Min Ones proble ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation-preserving Reduction

In computability theory and computational complexity theory, especially the study of approximation algorithms, an approximation-preserving reduction is an algorithm for transforming one optimization problem into another problem, such that the distance of solutions from optimal is preserved to some degree. Approximation-preserving reductions are a subset of more general reductions in complexity theory; the difference is that approximation-preserving reductions usually make statements on approximation problems or optimization problems, as opposed to decision problems. Intuitively, problem A is reducible to problem B via an approximation-preserving reduction if, given an instance of problem A and a (possibly approximate) solver for problem B, one can convert the instance of problem A into an instance of problem B, apply the solver for problem B, and recover a solution for problem A that also has some guarantee of approximation. Definition Unlike reductions on decision problems, an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Set (graph Theory)

In graph theory, an independent set, stable set, coclique or anticlique is a set of vertices in a graph, no two of which are adjacent. That is, it is a set S of vertices such that for every two vertices in S, there is no edge connecting the two. Equivalently, each edge in the graph has at most one endpoint in S. A set is independent if and only if it is a clique in the graph's complement. The size of an independent set is the number of vertices it contains. Independent sets have also been called "internally stable sets", of which "stable set" is a shortening. A maximal independent set is an independent set that is not a proper subset of any other independent set. A maximum independent set is an independent set of largest possible size for a given graph G. This size is called the independence number of ''G'' and is usually denoted by \alpha(G). The optimization problem of finding such a set is called the maximum independent set problem. It is a strongly NP-hard problem. As suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dominating Set

In graph theory, a dominating set for a graph is a subset of its vertices, such that any vertex of is either in , or has a neighbor in . The domination number is the number of vertices in a smallest dominating set for . The dominating set problem concerns testing whether for a given graph and input ; it is a classical NP-complete decision problem in computational complexity theory. Therefore it is believed that there may be no efficient algorithm that can compute for all graphs . However, there are efficient approximation algorithms, as well as efficient exact algorithms for certain graph classes. Dominating sets are of practical interest in several areas. In wireless networking, dominating sets are used to find efficient routes within ad-hoc mobile networks. They have also been used in document summarization, and in designing secure systems for electrical grids. Formal definition Given an undirected graph , a subset of vertices D\subseteq V is called a dominating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gerhard J

Gerhard is a name of Germanic origin and may refer to: Given name * Gerhard (bishop of Passau) (fl. 932–946), German prelate * Gerhard III, Count of Holstein-Rendsburg (1292–1340), German prince, regent of Denmark * Gerhard Barkhorn (1919–1983), German World War II flying ace * Gerhard Berger (born 1959), Austrian racing driver * Gerhard Boldt (1918–1981), German soldier and writer * Gerhard de Beer (born 1994), South African football player * Gerhard Diephuis (1817–1892), Dutch jurist * Gerhard Domagk (1895–1964), German pathologist and bacteriologist and Nobel Laureate * Gerhard Dorn (c.1530–1584), Flemish philosopher, translator, alchemist, physician and bibliophile * Gerhard Ertl (born 1936), German physicist and Nobel Laureate * Gerhard Fieseler (1896–1987), German World War I flying ace * Gerhard Flesch (1909–1948), German Nazi Gestapo and SS officer executed for war crimes * Gerhard Gentzen (1909–1945), German mathematician and logician * Gerhard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

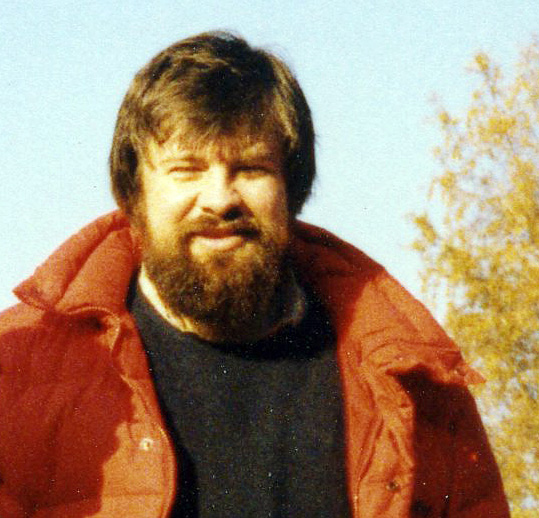

Marek Karpinski

Marek Karpinski at the Hausdorff Center for Mathematics, Excellence Cluster is a and known for his research in the theory of s and their applications, , |

MaxSNP

In computational complexity theory, SNP (from Strict NP) is a complexity class containing a limited subset of NP based on its logical characterization in terms of graph-theoretical properties. It forms the basis for the definition of the class MaxSNP of optimization problems. It is defined as the class of problems that are properties of relational structures (such as Graph (discrete mathematics), graphs) expressible by a second-order logic formula of the following form: : \exists S_1 \dots \exists S_\ell \, \forall v_1 \dots \forall v_m \,\phi(R_1,\dots,R_k,S_1,\dots,S_\ell,v_1,\dots,v_m), where R_1,\dots,R_k are relations of the structure (such as the adjacency relation, for a graph), S_1,\dots,S_\ell are unknown relations (sets of tuples of vertices), and \phi is a quantifier-free formula: any boolean combination of the relations. That is, only existential second-order quantification (over relations) is allowed and only universal first-order quantification (over vertices) is all ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Algorithm

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems (in particular NP-hard problems) with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complexity Class

In computational complexity theory, a complexity class is a set of computational problems of related resource-based complexity. The two most commonly analyzed resources are time and memory. In general, a complexity class is defined in terms of a type of computational problem, a model of computation, and a bounded resource like time or memory. In particular, most complexity classes consist of decision problems that are solvable with a Turing machine, and are differentiated by their time or space (memory) requirements. For instance, the class P is the set of decision problems solvable by a deterministic Turing machine in polynomial time. There are, however, many complexity classes defined in terms of other types of problems (e.g. counting problems and function problems) and using other models of computation (e.g. probabilistic Turing machines, interactive proof systems, Boolean circuits, and quantum computers). The study of the relationships between complexity class ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Edge Coloring

In graph theory, an edge coloring of a graph is an assignment of "colors" to the edges of the graph so that no two incident edges have the same color. For example, the figure to the right shows an edge coloring of a graph by the colors red, blue, and green. Edge colorings are one of several different types of graph coloring. The edge-coloring problem asks whether it is possible to color the edges of a given graph using at most different colors, for a given value of , or with the fewest possible colors. The minimum required number of colors for the edges of a given graph is called the chromatic index of the graph. For example, the edges of the graph in the illustration can be colored by three colors but cannot be colored by two colors, so the graph shown has chromatic index three. By Vizing's theorem, the number of colors needed to edge color a simple graph is either its maximum degree or . For some graphs, such as bipartite graphs and high-degree planar graphs, the number of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Token Reconfiguration

In computational complexity theory and combinatorics, the token reconfiguration problem is a reconfiguration problem on a graph with both an initial and desired state for tokens. Given a graph G, an initial state of tokens is defined by a subset V \subset V(G) of the vertices of the graph; let n = , V, . Moving a token from vertex v_1 to vertex v_2 is valid if v_1 and v_2 are joined by a path in G that does not contain any other tokens; note that the distance traveled within the graph is inconsequential, and moving a token across multiple edges sequentially is considered a single move. A desired end state is defined as another subset V' \subset V(G). The goal is to minimize the number of valid moves to reach the end state from the initial state. Motivation The problem is motivated by so-called sliding puzzles, which are in fact a variant of this problem, often restricted to rectangular grid graphs with no holes. The most famous such puzzle, the 15 puzzle, is a variant of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |