|

Pittsburgh Supercomputing Center

The Pittsburgh Supercomputing Center (PSC) is a high performance computing and networking center founded in 1986 and one of the original five NSF Supercomputing Centers.The Pennsylvania Center for the Book - PGH Supercomputing Center Pabook.libraries.psu.edu. Retrieved on 2013-07-17. PSC is a joint effort of and the in |

High Performance Computing

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems. Overview HPC integrates systems administration (including network and security knowledge) and parallel programming into a multidisciplinary field that combines digital electronics, computer architecture, system software, programming languages, algorithms and computational techniques. HPC technologies are the tools and systems used to implement and create high performance computing systems. Recently, HPC systems have shifted from supercomputing to computing clusters and grids. Because of the need of networking in clusters and grids, High Performance Computing Technologies are being promoted by the use of a collapsed network backbone, because the collapsed backbone architecture is simple to troubleshoot and upgrades can be applied to a single router as opposed to multiple ones. The term is most commonly associated with computing used for scientific research or com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Commonwealth Of Pennsylvania

Pennsylvania (; (Pennsylvania Dutch: )), officially the Commonwealth of Pennsylvania, is a state spanning the Mid-Atlantic, Northeastern, Appalachian, and Great Lakes regions of the United States. It borders Delaware to its southeast, Maryland to its south, West Virginia to its southwest, Ohio to its west, Lake Erie and the Canadian province of Ontario to its northwest, New York to its north, and the Delaware River and New Jersey to its east. Pennsylvania is the fifth-most populous state in the nation with over 13 million residents as of 2020. It is the 33rd-largest state by area and ranks ninth among all states in population density. The southeastern Delaware Valley metropolitan area comprises and surrounds Philadelphia, the state's largest and nation's sixth most populous city. Another 2.37 million reside in Greater Pittsburgh in the southwest, centered around Pittsburgh, the state's second-largest and Western Pennsylvania's largest city. The state's subsequent five m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

2006 Establishments In Pennsylvania

6 (six) is the natural number following 5 and preceding 7. It is a composite number and the smallest perfect number. In mathematics Six is the smallest positive integer which is neither a square number nor a prime number; it is the second smallest composite number, behind 4; its proper divisors are , and . Since 6 equals the sum of its proper divisors, it is a perfect number; 6 is the smallest of the perfect numbers. It is also the smallest Granville number, or \mathcal-perfect number. As a perfect number: *6 is related to the Mersenne prime 3, since . (The next perfect number is 28.) *6 is the only even perfect number that is not the sum of successive odd cubes. *6 is the root of the 6-aliquot tree, and is itself the aliquot sum of only one other number; the square number, . Six is the only number that is both the sum and the product of three consecutive positive numbers. Unrelated to 6's being a perfect number, a Golomb ruler of length 6 is a "perfect ruler". Six is a con ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cyberinfrastructure

United States federal research funders use the term cyberinfrastructure to describe research environments that support advanced data acquisition, data storage, data management, data integration, data mining, data visualization and other computing and information processing services distributed over the Internet beyond the scope of a single institution. In scientific usage, cyberinfrastructure is a technological and sociological solution to the problem of efficiently connecting laboratories, data, computers, and people with the goal of enabling derivation of novel scientific theories and knowledge. Origin The term National Information Infrastructure had been popularized by Al Gore in the 1990s. This use of the term "cyberinfrastructure" evolved from the same thinking that produced Presidential Decision Directive NSC-63 on Protecting America's Critical Infrastructures (PDD-63). PDD-63 focuses on the security and vulnerability of the nation's "cyber-based information systems" as well ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

E-Science

E-Science or eScience is computationally intensive science that is carried out in highly distributed network environments, or science that uses immense data sets that require grid computing; the term sometimes includes technologies that enable distributed collaboration, such as the Access Grid. The term was created by John Taylor, the Director General of the United Kingdom's Office of Science and Technology in 1999 and was used to describe a large funding initiative starting in November 2000. E-science has been more broadly interpreted since then, as "the application of computer technology to the undertaking of modern scientific investigation, including the preparation, experimentation, data collection, results dissemination, and long-term storage and accessibility of all materials generated through the scientific process. These may include data modeling and analysis, electronic/digitized laboratory notebooks, raw and fitted data sets, manuscript production and draft versions, pre-p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer Sites

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior Exascale computing, exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intens ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Molecular Dynamics

Molecular dynamics (MD) is a computer simulation method for analyzing the physical movements of atoms and molecules. The atoms and molecules are allowed to interact for a fixed period of time, giving a view of the dynamic "evolution" of the system. In the most common version, the trajectories of atoms and molecules are determined by numerically solving Newton's equations of motion for a system of interacting particles, where forces between the particles and their potential energies are often calculated using interatomic potentials or molecular mechanical force fields. The method is applied mostly in chemical physics, materials science, and biophysics. Because molecular systems typically consist of a vast number of particles, it is impossible to determine the properties of such complex systems analytically; MD simulation circumvents this problem by using numerical methods. However, long MD simulations are mathematically ill-conditioned, generating cumulative errors in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anton (computer)

Anton is a massively parallel supercomputer designed and built by D. E. Shaw Research in New York, first running in 2008. It is a special-purpose system for molecular dynamics (MD) simulations of proteins and other biological macromolecules. An Anton machine consists of a substantial number of application-specific integrated circuits (ASICs), interconnected by a specialized high-speed, three-dimensional torus network. Unlike earlier special-purpose systems for MD simulations, such as MDGRAPE-3 developed by RIKEN in Japan, Anton runs its computations entirely on specialized ASICs, instead of dividing the computation between specialized ASICs and general-purpose host processors. Each Anton ASIC contains two computational subsystems. Most of the calculation of electrostatic and van der Waals forces is performed by the high-throughput interaction subsystem (HTIS). This subsystem contains 32 deeply pipelined modules running at 800 MHz arranged much like a systolic arr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Altix

Altix is a line of server computers and supercomputers produced by Silicon Graphics (and successor company Silicon Graphics International), based on Intel processors. It succeeded the MIPS/IRIX-based Origin 3000 servers. History The line was first announced on January 7, 2003, with the Altix 3000 series, based on Intel Itanium 2 processors and SGI's NUMAlink processor interconnect. At product introduction, the system supported up to 64 processors running Linux as a single system image and shipped with a Linux distribution called SGI Advanced Linux Environment, which was compatible with Red Hat Advanced Server. By August 2003, many SGI Altix customers were running Linux on 128- and 256-processor SGI Altix systems. SGI officially announced 256-processor support within a single system image of Linux on March 10, 2004, using a 2.4-based Linux kernel. The SGI Advanced Linux Environment was eventually dropped after support using a standard, unmodified SUSE Linux Enterprise Server ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Science Foundation

The National Science Foundation (NSF) is an independent agency of the United States government that supports fundamental research and education in all the non-medical fields of science and engineering. Its medical counterpart is the National Institutes of Health. With an annual budget of about $8.3 billion (fiscal year 2020), the NSF funds approximately 25% of all federally supported basic research conducted by the United States' colleges and universities. In some fields, such as mathematics, computer science, economics, and the social sciences, the NSF is the major source of federal backing. The NSF's director and deputy director are appointed by the President of the United States and confirmed by the United States Senate, whereas the 24 president-appointed members of the National Science Board (NSB) do not require Senate confirmation. The director and deputy director are responsible for administration, planning, budgeting and day-to-day operations of the foundation, while t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Science Foundation Network

The National Science Foundation Network (NSFNET) was a program of coordinated, evolving projects sponsored by the National Science Foundation (NSF) from 1985 to 1995 to promote advanced research and education networking in the United States. The program created several nationwide backbone computer networks in support of these initiatives. Initially created to link researchers to the NSF-funded supercomputing centers, through further public funding and private industry partnerships it developed into a major part of the Internet backbone. The National Science Foundation permitted only government agencies and universities to use the network until 1989 when the first commercial Internet service provider emerged. By 1991, the NSF removed access restrictions and the commercial ISP business grew rapidly. History Following the deployment of the Computer Science Network (CSNET), a network that provided Internet services to academic computer science departments, in 1981, the U.S. National ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

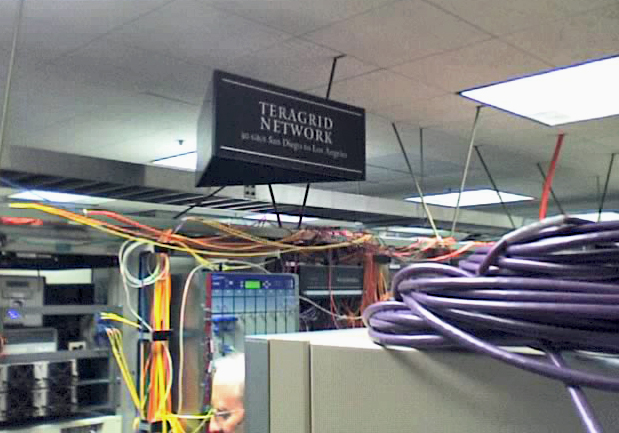

Extreme Science And Engineering Discovery Environment

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |