|

Parse Tree

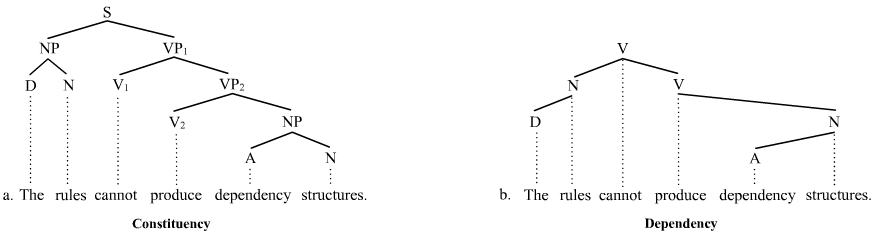

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars (phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such as programming languages. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phrase Structure Rules

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories ( parts of speech) and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a ''constituency grammar''; as such, it stands in contrast to ''dependency grammars'', which are based on the dependency relation. Definition and examples Phrase structure rules are usually of the following form: :A \to B \quad C meaning that the constituent A is separated into the two subconstituents B and C. Some examples for English are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predicate (grammar)

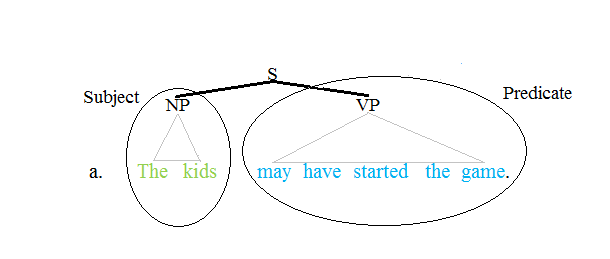

The term predicate is used in one of two ways in linguistics and its subfields. The first defines a predicate as everything in a standard declarative sentence except the subject, and the other views it as just the main content verb or associated predicative expression of a clause. Thus, by the first definition the predicate of the sentence ''Frank likes cake'' is ''likes cake''. By the second definition, the predicate of the same sentence is just the content verb ''likes'', whereby ''Frank'' and ''cake'' are the arguments of this predicate. Differences between these two definitions can lead to confusion. Syntax Traditional grammar The notion of a predicate in traditional grammar traces back to Aristotelian logic. A predicate is seen as a property that a subject has or is characterized by. A predicate is therefore an expression that can be ''true of'' something. Thus, the expression "is moving" is true of anything that is moving. This classical understanding of predicate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Verb Phrase

In linguistics, a verb phrase (VP) is a syntactic unit composed of a verb and its arguments except the subject of an independent clause or coordinate clause. Thus, in the sentence ''A fat man quickly put the money into the box'', the words ''quickly put the money into the box'' constitute a verb phrase; it consists of the verb ''put'' and its arguments, but not the subject ''a fat man''. A verb phrase is similar to what is considered a '' predicate'' in traditional grammars. Verb phrases generally are divided among two types: finite, of which the head of the phrase is a finite verb; and nonfinite, where the head is a nonfinite verb, such as an infinitive, participle or gerund. Phrase structure grammars acknowledge both types, but dependency grammars treat the subject as just another verbal dependent, and they do not recognize the finite verbal phrase constituent. Understanding verb phrase analysis depends on knowing which theory applies in context. In phrase structure grammar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Object (grammar)

In linguistics, an object is any of several types of arguments. In subject-prominent, nominative-accusative languages such as English, a transitive verb typically distinguishes between its subject and any of its objects, which can include but are not limited to direct objects, indirect objects, and arguments of adpositions ( prepositions or postpositions); the latter are more accurately termed ''oblique arguments'', thus including other arguments not covered by core grammatical roles, such as those governed by case morphology (as in languages such as Latin) or relational nouns (as is typical for members of the Mesoamerican Linguistic Area). In ergative-absolutive languages, for example most Australian Aboriginal languages, the term "subject" is ambiguous, and thus the term " agent" is often used instead to contrast with "object", such that basic word order is often spoken of in terms such as Agent-Object-Verb (AOV) instead of Subject-Object-Verb (SOV). Topic-prominent l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subject (grammar)

The subject in a simple English sentence such as ''John runs'', ''John is a teacher'', or ''John drives a car'', is the person or thing about whom the statement is made, in this case ''John''. Traditionally the subject is the word or phrase which controls the verb in the clause, that is to say with which the verb agrees (''John is'' but ''John and Mary are''). If there is no verb, as in ''John what an idiot!'', or if the verb has a different subject, as in ''John I can't stand him!'', then 'John' is not considered to be the grammatical subject, but can be described as the ''topic'' of the sentence. While these definitions apply to simple English sentences, defining the subject is more difficult in more complex sentences and in languages other than English. For example, in the sentence ''It is difficult to learn French'', the subject seems to be the word ''it'', and yet arguably the real subject (the thing that is difficult) is ''to learn French''. A sentence such as ''It was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noun Phrase

In linguistics, a noun phrase, or nominal (phrase), is a phrase that has a noun or pronoun as its head or performs the same grammatical function as a noun. Noun phrases are very common cross-linguistically, and they may be the most frequently occurring phrase type. Noun phrases often function as verb subjects and objects, as predicative expressions and as the complements of prepositions. Noun phrases can be embedded inside each other; for instance, the noun phrase ''some of his constituents'' contains the shorter noun phrase ''his constituents''. In some more modern theories of grammar, noun phrases with determiners are analyzed as having the determiner as the head of the phrase, see for instance Chomsky (1995) and Hudson (1990). Identification Some examples of noun phrases are underlined in the sentences below. The head noun appears in bold. ::This election-year's politics are annoying for many people. ::Almost every sentence contains at least one noun phrase. ::Current ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parse Tree 1

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information ( p-values). Some parsing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

English Language

English is a West Germanic language of the Indo-European language family, with its earliest forms spoken by the inhabitants of early medieval England. It is named after the Angles, one of the ancient Germanic peoples that migrated to the island of Great Britain. Existing on a dialect continuum with Scots, and then closest related to the Low Saxon and Frisian languages, English is genealogically West Germanic. However, its vocabulary is also distinctively influenced by dialects of France (about 29% of Modern English words) and Latin (also about 29%), plus some grammar and a small amount of core vocabulary influenced by Old Norse (a North Germanic language). Speakers of English are called Anglophones. The earliest forms of English, collectively known as Old English, evolved from a group of West Germanic ( Ingvaeonic) dialects brought to Great Britain by Anglo-Saxon settlers in the 5th century and further mutated by Norse-speaking Viking settlers starting in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Terminal Symbol

In computer science, terminal and nonterminal symbols are the lexical elements used in specifying the production rules constituting a formal grammar. ''Terminal symbols'' are the elementary symbols of the language defined by a formal grammar. ''Nonterminal symbols'' (or ''syntactic variables'') are replaced by groups of terminal symbols according to the production rules. The terminals and nonterminals of a particular grammar are two disjoint sets. Terminal symbols Terminal symbols are literal symbols that may appear in the outputs of the production rules of a formal grammar and which cannot be changed using the rules of the grammar. Applying the rules recursively to a source string of symbols will usually terminate in a final output string consisting only of terminal symbols. Consider a grammar defined by two rules. Using pictoric marks interacting with each other: # The symbol ר can become ди # The symbol ר can become д Here д is a terminal symbol because no rule ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Leaf Node

In computer science, a tree is a widely used abstract data type that represents a hierarchical tree structure with a set of connected nodes. Each node in the tree can be connected to many children (depending on the type of tree), but must be connected to exactly one parent, except for the ''root'' node, which has no parent. These constraints mean there are no cycles or "loops" (no node can be its own ancestor), and also that each child can be treated like the root node of its own subtree, making recursion a useful technique for tree traversal. In contrast to linear data structures, many trees cannot be represented by relationships between neighboring nodes in a single straight line. Binary trees are a commonly used type, which constrain the number of children for each parent to exactly two. When the order of the children is specified, this data structure corresponds to an ordered tree in graph theory. A value or pointer to other data may be associated with every node in the tr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonterminal

In computer science, terminal and nonterminal symbols are the lexical elements used in specifying the production rules constituting a formal grammar. ''Terminal symbols'' are the elementary symbols of the language defined by a formal grammar. ''Nonterminal symbols'' (or ''syntactic variables'') are replaced by groups of terminal symbols according to the production rules. The terminals and nonterminals of a particular grammar are two disjoint sets. Terminal symbols Terminal symbols are literal symbols that may appear in the outputs of the production rules of a formal grammar and which cannot be changed using the rules of the grammar. Applying the rules recursively to a source string of symbols will usually terminate in a final output string consisting only of terminal symbols. Consider a grammar defined by two rules. Using pictoric marks interacting with each other: # The symbol ר can become ди # The symbol ר can become д Here д is a terminal symbol because no rule ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |