|

PSeven

pSeven is a DSE (Design Space Exploration) software platform developed by DATADVANCE, extending design, simulation and analysis capabilities and assisting in faster design decisions. It provides integration with third party CAD and CAE software tools, multi-objective and robust optimization algorithms, data analysis, and uncertainty quantification tools. pSeven comes under the notion of PIDO (Process Integration and Design Optimization) software. Design Space Exploration functionality is based on the mathematical algorithms of pSeven Core (formerly known as MACROS) Python library, also developed by DATADVANCE. SmartSelection technology implemented in pSeven automatically selects the most efficient method for a given data or optimization problem that makes advance math easy to use to a wide range of experts. History The foundation for the pSeven Core library as pSeven's background was laid in 2003, when the researchers from the Institute for Information Transmission Problems ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DATADVANCE

DATADVANCE Is a software development company, evolved out of a collaborative research program between Airbus and Institute for Information Transmission Problems of the Russian Academy of Sciences (IITP RAS). Product pSeven Core, embedded in company main product pSeven, provides unique proprietary and state-of-the-art algorithms for dimension reduction, design of experiments, sensitivity analysis, meta-modeling, uncertainty quantification as well as modern single, multi-objective and robust Robustness is the property of being strong and healthy in constitution. When it is transposed into a system, it refers to the ability of tolerating perturbations that might affect the system’s functional body. In the same line ''robustness'' ca ... optimization strategies. References External links * {{Official website, http://www.datadvance.net/ Computer system optimization software Mathematical optimization software Optimization algorithms and methods ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PIDO

PIDO stands for Process Integration and Design Optimization. Process Integration is needed as many software tools are used in a multi-domain system design. Control software is developed in a different toolchain than the mechanical properties of a system, where structural analysis is done using again some different tools. To be able to optimize a certain design, communication between these tools is required. The end goal, design optimization, can be reached by defining cost functions and defining the parameters which can be changed to fit the design within the cost function(s). See also * ModelCenter from Phoenix Integration, Inc. * ModeFRONTIER from Esteco * Optimus from Noesis Solutions * pSeven from DATADVANCE * optiSLang optiSLang is a software platform for CAE-based sensitivity analysis, multi-disciplinary optimization (MDO) and robustness evaluation. It is developed by Dynardo GmbH and provides a framework for numerical Robust Design Optimization (RDO) and s ... from A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Sequence

The concept of a random sequence is essential in probability theory and statistics. The concept generally relies on the notion of a sequence of random variables and many statistical discussions begin with the words "let ''X''1,...,''Xn'' be independent random variables...". Yet as D. H. Lehmer stated in 1951: "A random sequence is a vague notion... in which each term is unpredictable to the uninitiated and whose digits pass a certain number of tests traditional with statisticians". Axiomatic probability theory ''deliberately'' avoids a definition of a random sequence. Traditional probability theory does not state if a specific sequence is random, but generally proceeds to discuss the properties of random variables and stochastic sequences assuming some definition of randomness. The Bourbaki school considered the statement "let us consider a random sequence" an abuse of language. Early history Émile Borel was one of the first mathematicians to formally address randomness in 190 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Engineering Optimization

Engineering optimization is the subject which uses optimization techniques to achieve design goals in engineering. It is sometimes referred to as design optimization. Topics * structural design (including pressure vessel design and welded beam design) * shape optimization * topology optimization (including airfoils) * inverse optimization (a subset of the inverse problem) * processing planning * product designs * electromagnetic optimization * space mapping * aggressive space mapping The space mapping methodology for modeling and design optimization of engineering systems was first discovered by John Bandler in 1993. It uses relevant existing knowledge to speed up model generation and design optimization of a system. The kno ...J.E. Rayas-Sanche"Power in simplicity with ASM: tracing the aggressive space mapping algorithm over two decades of development and engineering applications" IEEE Microwave Magazine, vol. 17, no. 4, pp. 64-76, April 2016. * yield-driven design * opt ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Analytics

Predictive analytics encompasses a variety of statistical techniques from data mining, predictive modeling, and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events. In business, predictive models exploit patterns found in historical and transactional data to identify risks and opportunities. Models capture relationships among many factors to allow assessment of risk or potential associated with a particular set of conditions, guiding decision-making for candidate transactions. The defining functional effect of these technical approaches is that predictive analytics provides a predictive score (probability) for each individual (customer, employee, healthcare patient, product SKU, vehicle, component, machine, or other organizational unit) in order to determine, inform, or influence organizational processes that pertain across large numbers of individuals, such as in marketing, credit risk assessment, fraud det ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dimension Reduction

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable (hard to control or deal with). Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics. Methods are commonly divided into linear and nonlinear approaches. Approaches can also be divided into feature selection and feature extraction. Dimensionality reduction can be used for noise reduction, data visualization, cluster analysis, or as an intermedi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Quantification

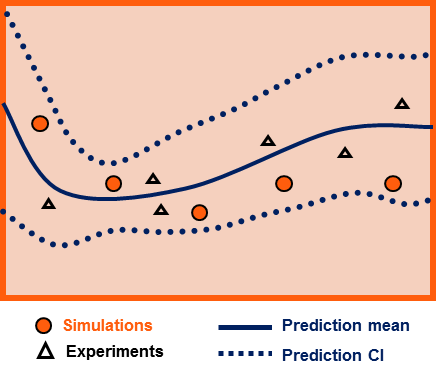

Uncertainty quantification (UQ) is the science of quantitative characterization and reduction of uncertainties in both computational and real world applications. It tries to determine how likely certain outcomes are if some aspects of the system are not exactly known. An example would be to predict the acceleration of a human body in a head-on crash with another car: even if the speed was exactly known, small differences in the manufacturing of individual cars, how tightly every bolt has been tightened, etc., will lead to different results that can only be predicted in a statistical sense. Many problems in the natural sciences and engineering are also rife with sources of uncertainty. Computer experiments on computer simulations are the most common approach to study problems in uncertainty quantification. Sources Uncertainty can enter mathematical models and experimental measurements in various contexts. One way to categorize the sources of uncertainty is to consider: ; Parame ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependence Analysis

In compiler theory, dependence analysis produces execution-order constraints between statements/instructions. Broadly speaking, a statement ''S2'' depends on ''S1'' if ''S1'' must be executed before ''S2''. Broadly, there are two classes of dependencies--control dependencies and data dependencies. Dependence analysis determines whether it is safe to reorder or parallelize statements. Control dependencies Control dependency is a situation in which a program instruction executes if the previous instruction evaluates in a way that allows its execution. A statement ''S2'' is ''control dependent'' on ''S1'' (written S1\ \delta^c\ S2) if and only if ''S2s execution is conditionally guarded by ''S1''. ''S2'' is ''control dependent'' on ''S1'' if and only if S1 \in PDF(S2) where PDF(S) is the post dominance frontier of statement S. The following is an example of such a control dependence: S1 if x > 2 goto L1 S2 y := 3 S3 L1: z := y + 1 Here, ''S2'' only runs if ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sensitivity Analysis

Sensitivity analysis is the study of how the uncertainty in the output of a mathematical model or system (numerical or otherwise) can be divided and allocated to different sources of uncertainty in its inputs. A related practice is uncertainty analysis, which has a greater focus on uncertainty quantification and propagation of uncertainty; ideally, uncertainty and sensitivity analysis should be run in tandem. The process of recalculating outcomes under alternative assumptions to determine the impact of a variable under sensitivity analysis can be useful for a range of purposes, including: * Testing the robustness of the results of a model or system in the presence of uncertainty. * Increased understanding of the relationships between input and output variables in a system or model. * Uncertainty reduction, through the identification of model input that cause significant uncertainty in the output and should therefore be the focus of attention in order to increase robustness (perh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Design Of Experiments

The design of experiments (DOE, DOX, or experimental design) is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. The term is generally associated with experiments in which the design introduces conditions that directly affect the variation, but may also refer to the design of quasi-experiments, in which natural conditions that influence the variation are selected for observation. In its simplest form, an experiment aims at predicting the outcome by introducing a change of the preconditions, which is represented by one or more independent variables, also referred to as "input variables" or "predictor variables." The change in one or more independent variables is generally hypothesized to result in a change in one or more dependent variables, also referred to as "output variables" or "response variables." The experimental design may also identify control variables that must ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |