|

PLAPACK

LAPACK ("Linear Algebra Package") is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines. LAPACK was designed as the successor to the linear equations and linear least-squares routines of LINPACK and the eigenvalue routines of EISPACK. LINPACK, written in the 1970s and 1980s, was designed to run on the then-modern vector computers with shared memory. LAPACK, in contrast, was designed to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Number

In mathematics, a complex number is an element of a number system that extends the real numbers with a specific element denoted , called the imaginary unit and satisfying the equation i^= -1; every complex number can be expressed in the form a + bi, where and are real numbers. Because no real number satisfies the above equation, was called an imaginary number by René Descartes. For the complex number is called the , and is called the . The set of complex numbers is denoted by either of the symbols \mathbb C or . Despite the historical nomenclature, "imaginary" complex numbers have a mathematical existence as firm as that of the real numbers, and they are fundamental tools in the scientific description of the natural world. Complex numbers allow solutions to all polynomial equations, even those that have no solutions in real numbers. More precisely, the fundamental theorem of algebra asserts that every non-constant polynomial equation with real or complex coefficie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BSD Licenses

BSD licenses are a family of permissive free software licenses, imposing minimal restrictions on the use and distribution of covered software. This is in contrast to copyleft licenses, which have share-alike requirements. The original BSD license was used for its namesake, the Berkeley Software Distribution (BSD), a Unix-like operating system. The original version has since been revised, and its descendants are referred to as modified BSD licenses. BSD is both a license and a class of license (generally referred to as BSD-like). The modified BSD license (in wide use today) is very similar to the license originally used for the BSD version of Unix. The BSD license is a simple license that merely requires that all code retain the BSD license notice if redistributed in source code format, or reproduce the notice if redistributed in binary format. The BSD license (unlike some other licenses e.g. GPL) does not require that source code be distributed at all. Terms In addition to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Texas At Austin

The University of Texas at Austin (UT Austin, UT, or Texas) is a public university, public research university in Austin, Texas, United States. Founded in 1883, it is the flagship institution of the University of Texas System. With 53,082 students as of fall 2023, it is also the largest institution in the system. The university is a major center for academic research, with research expenditures totaling $1.06 billion for the 2023 fiscal year. It joined the Association of American Universities in 1929. The university houses seven museums and seventeen libraries, including the Lyndon Baines Johnson Library and Museum, Lyndon B. Johnson Presidential Library and the Blanton Museum of Art, and operates various auxiliary research facilities, such as the J. J. Pickle Research Campus and McDonald Observatory. UT Austin's athletics constitute the Texas Longhorns. The Longhorns have won four NCAA Division I National Football Championships, six NCAA Division I National Baseball Champions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ScaLAPACK

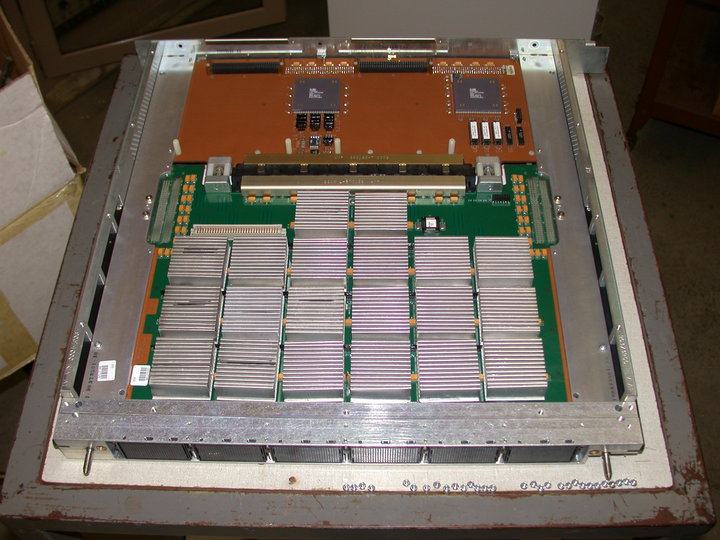

The ScaLAPACK (or Scalable LAPACK) library includes a subset of LAPACK routines redesigned for distributed memory MIMD parallel computers. It is currently written in a Single-Program-Multiple-Data style using explicit message passing for interprocessor communication. It assumes matrices are laid out in a two-dimensional block cyclic decomposition. ScaLAPACK is designed for heterogeneous computing and is portable on any computer that supports MPI or PVM. ScaLAPACK depends on PBLAS operations in the same way LAPACK depends on BLAS. As of version 2.0, the code base directly includes PBLAS and BLACS and has dropped support for PVM. After two decades of operation, a new library was created to replace ScaLAPACK, which was not suitable for modern accelerated architectures. Slate is written in C++ and was designed primarily to serve as a dense linear algebra library to the United States Department of Energy and to the high-performance computing community at large. Examples * Pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Memory

In computer science, distributed memory refers to a Multiprocessing, multiprocessor computer system in which each Central processing unit, processor has its own private Computer memory, memory. Computational tasks can only operate on local data, and if remote data are required, the computational task must communicate with one or more remote processors. In contrast, a Shared memory architecture, shared memory multiprocessor offers a single memory space used by all processors. Processors do not have to be aware where data resides, except that there may be performance penalties, and that race conditions are to be avoided. In a distributed memory system there is typically a processor, a memory, and some form of interconnection that allows programs on each processor to interact with each other. The interconnect can be organised with Network_topology#Point-to-point, point to point links or separate hardware can provide a switching network. The network topology is a key factor in deter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Superscalar Processor

A superscalar processor (or multiple-issue processor) is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor, which can execute at most one single instruction per clock cycle, a superscalar processor can execute or start executing more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows more throughput (the number of instructions that can be executed in a unit of time which can even be less than 1) than would otherwise be possible at a given clock rate. Each execution unit is not a separate processor (or a core if the processor is a multi-core processor), but an execution resource within a single CPU such as an arithmetic logic unit. While a superscalar CPU is typically also pipelined, superscalar and pipelining execution are considered different performance enhancement techniq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction-level Parallelism

Instruction-level parallelism (ILP) is the Parallel computing, parallel or simultaneous execution of a sequence of Instruction set, instructions in a computer program. More specifically, ILP refers to the average number of instructions run per step of this parallel execution. Discussion ILP must not be confused with Concurrency (computer science), concurrency. In ILP, there is a single specific Thread (computing), thread of execution of a Process (computing), process. On the other hand, concurrency involves the assignment of multiple threads to a Central processing unit, CPU's core in a strict alternation, or in true parallelism if there are enough CPU cores, ideally one core for each runnable thread. There are two approaches to instruction-level parallelism: Computer hardware, hardware and software. Hardware-level ILP works upon dynamic parallelism, whereas software-level ILP works on static parallelism. Dynamic parallelism means that the processor decides at run time whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processor

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, compression and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |