|

PERMANOVA

Permutational multivariate analysis of variance (PERMANOVA), is a non-parametric multivariate statistical permutation test. PERMANOVA is used to compare groups of objects and test the null hypothesis that the centroids and dispersion of the groups as defined by measure space are equivalent for all groups. A rejection of the null hypothesis means that either the centroid and/or the spread of the objects is different between the groups. Hence the test is based on the prior calculation of the distance between any two objects included in the experiment. PERMANOVA shares some resemblance to ANOVA where they both measure the sum-of-squares within and between group and make use of F test to compare within-group to between-group variance. However, while ANOVA bases the significance of the result on assumption of normality, PERMANOVA draws tests for significance by comparing the actual F test result to that gained from random permutations of the objects between the groups. Moreover, whilst P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primer-E Primer

Plymouth Routines In Multivariate Ecological Research (PRIMER) is a statistical package that is a collection of specialist univariate, multivariate, and graphical routines for analyzing species sampling data for community ecology. Types of data analyzed are typically species abundance, biomass, presence/absence, and percent area cover, among others. It is primarily used in the scientific community for ecological and environmental studies. Multivariate routines include: *grouping (CLUSTER) *sorting (MDS) *principal component identification (PCA) *hypothesis testing (ANOSIM) *sample discrimination (SIMPER) *trend correlation (BEST) *comparisons (RELATE) *diversity, dominance, and distribution calculating * Permutational multivariate analysis of variance ( PERMANOVA) Routines can be resource intensive due to their non-parametric and permutation-based nature. Programmed in the VB.Net environment. References {{reflist See also * Comparison of statistical packages The following ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation Test

A permutation test (also called re-randomization test) is an exact statistical hypothesis test making use of the proof by contradiction. A permutation test involves two or more samples. The null hypothesis is that all samples come from the same distribution H_0: F=G. Under the null hypothesis, the distribution of the test statistic is obtained by calculating all possible values of the test statistic under possible rearrangements of the observed data. Permutation tests are, therefore, a form of resampling. Permutation tests can be understood as surrogate data testing where the surrogate data under the null hypothesis are obtained through permutations of the original data. In other words, the method by which treatments are allocated to subjects in an experimental design is mirrored in the analysis of that design. If the labels are exchangeable under the null hypothesis, then the resulting tests yield exact significance levels; see also exchangeability. Confidence intervals can th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distribution-free or having a specified distribution but with the distribution's parameters unspecified. Nonparametric statistics includes both descriptive statistics and statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are violated. Definitions The term "nonparametric statistics" has been imprecisely defined in the following two ways, among others: Applications and purpose Non-parametric methods are widely used for studying populations that take on a ranked order (such as movie reviews receiving one to four stars). The use of non-parametric methods may be necessary when data have a ranking but no clear numerical interpretation, such as when assessing preferences. In terms of levels of me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Statistics

Multivariate statistics is a subdivision of statistics encompassing the simultaneous observation and analysis of more than one outcome variable. Multivariate statistics concerns understanding the different aims and background of each of the different forms of multivariate analysis, and how they relate to each other. The practical application of multivariate statistics to a particular problem may involve several types of univariate and multivariate analyses in order to understand the relationships between variables and their relevance to the problem being studied. In addition, multivariate statistics is concerned with multivariate probability distributions, in terms of both :*how these can be used to represent the distributions of observed data; :*how they can be used as part of statistical inference, particularly where several different quantities are of interest to the same analysis. Certain types of problems involving multivariate data, for example simple linear regression a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Centroids

In mathematics and physics, the centroid, also known as geometric center or center of figure, of a plane figure or solid figure is the arithmetic mean position of all the points in the surface of the figure. The same definition extends to any object in ''n''-dimensional Euclidean space. In geometry, one often assumes uniform mass density, in which case the ''barycenter'' or '' center of mass'' coincides with the centroid. Informally, it can be understood as the point at which a cutout of the shape (with uniformly distributed mass) could be perfectly balanced on the tip of a pin. In physics, if variations in gravity are considered, then a ''center of gravity'' can be defined as the weighted mean of all points weighted by their specific weight. In geography, the centroid of a radial projection of a region of the Earth's surface to sea level is the region's geographical center. History The term "centroid" is of recent coinage (1814). It is used as a substitute for the olde ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Dispersion

In statistics, dispersion (also called variability, scatter, or spread) is the extent to which a distribution is stretched or squeezed. Common examples of measures of statistical dispersion are the variance, standard deviation, and interquartile range. For instance, when the variance of data in a set is large, the data is widely scattered. On the other hand, when the variance is small, the data in the set is clustered. Dispersion is contrasted with location or central tendency, and together they are the most used properties of distributions. Measures A measure of statistical dispersion is a nonnegative real number that is zero if all the data are the same and increases as the data become more diverse. Most measures of dispersion have the same units as the quantity being measured. In other words, if the measurements are in metres or seconds, so is the measure of dispersion. Examples of dispersion measures include: * Standard deviation * Interquartile range (IQR) * Range * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ANOVA

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

F Test

An ''F''-test is any statistical test in which the test statistic has an ''F''-distribution under the null hypothesis. It is most often used when comparing statistical models that have been fitted to a data set, in order to identify the model that best fits the population from which the data were sampled. Exact "''F''-tests" mainly arise when the models have been fitted to the data using least squares. The name was coined by George W. Snedecor, in honour of Ronald Fisher. Fisher initially developed the statistic as the variance ratio in the 1920s. Common examples Common examples of the use of ''F''-tests include the study of the following cases: * The hypothesis that the means of a given set of normally distributed populations, all having the same standard deviation, are equal. This is perhaps the best-known ''F''-test, and plays an important role in the analysis of variance (ANOVA). * The hypothesis that a proposed regression model fits the data well. See Lack-of-fit sum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

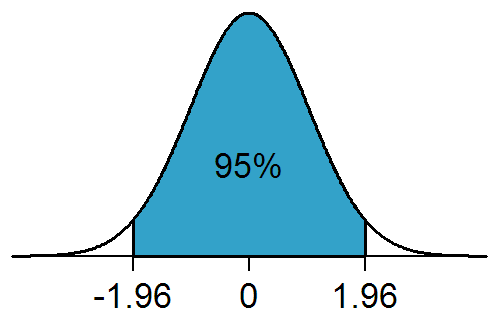

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Averages

In ordinary language, an average is a single number taken as representative of a list of numbers, usually the sum of the numbers divided by how many numbers are in the list (the arithmetic mean). For example, the average of the numbers 2, 3, 4, 7, and 9 (summing to 25) is 5. Depending on the context, an average might be another statistic such as the median, or mode. For example, the average personal income is often given as the median—the number below which are 50% of personal incomes and above which are 50% of personal incomes—because the mean would be higher by including personal incomes from a few billionaires. For this reason, it is recommended to avoid using the word "average" when discussing measures of central tendency. General properties If all numbers in a list are the same number, then their average is also equal to this number. This property is shared by each of the many types of average. Another universal property is monotonicity: if two lists of numbers ''A'' an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |