|

Noise-predictive Maximum-likelihood Detection

Noise-Predictive Maximum-Likelihood (NPML) is a class of digital signal-processing methods suitable for magnetic data storage systems that operate at high linear recording densities. It is used for retrieval of data recorded on magnetic media. Data are read back by the read head, producing a weak and noisy analog signal. NPML aims at minimizing the influence of noise in the detection process. Successfully applied, it allows recording data at higher areal densities. Alternatives include peak detection, partial-response maximum-likelihood (PRML), and extended partial-response maximum likelihood (EPRML) detection. Although advances in head and media technologies historically have been the driving forces behind the increases in the areal recording density, digital signal processing and coding established themselves as cost-efficient techniques for enabling additional increases in areal density while preserving reliability. Accordingly, the deployment of sophisticated detection scheme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, subjective video quality and to also detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was published in the Bell System Technical Journal. The paper laid the groundwork for later development of information c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain

A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs ''now''." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov. Markov chains have many applications as statistical models of real-world processes, such as studying cruise control systems in motor vehicles, queues or lines of customers arriving at an airport, currency exchange rates and animal population dynamics. Markov processes are the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partial-response Maximum-likelihood

In computer data storage, partial-response maximum-likelihood (PRML) is a method for recovering the Digital signal (electronics), digital data from the weak analog read-back signal picked up by the Disk_read-and-write_head, head of a magnetic Hard disk drive, disk drive or tape drive. PRML was introduced to recover data more reliably or at a greater areal_density_(computer_storage), areal-density than earlier simpler schemes such as peak-detection. These advances are important because most of the digital data in the world is stored using magnetic storage on hard disk drive, hard disk or tape drives. Ampex introduced PRML in a tape drive in 1984. IBM introduced PRML in a disk drive in 1990 and also coined the acronym PRML. Many advances have taken place since the initial introduction. Recent read/write channels operate at much higher data-rates, are fully adaptive, and, in particular, include the ability to handle nonlinear signal distortion and non-stationary, colored, data-depen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Tape-Open

Linearity is the property of a mathematical relationship (''function Function or functionality may refer to: Computing * Function key, a type of key on computer keyboards * Function model, a structured representation of processes in a system * Function object or functor or functionoid, a concept of object-oriente ...'') that can be graphically represented as a straight line. Linearity is closely related to ''Proportionality (mathematics), proportionality''. Examples in physics include rectilinear motion, the linear relationship of voltage and Electric current, current in an electrical conductor (Ohm's law), and the relationship of mass and weight. By contrast, more complicated relationships are ''nonlinear''. Generalized for functions in more than one dimension (mathematics), dimension, linearity means the property of a function of being compatible with addition and scale analysis (mathematics), scaling, also known as the superposition principle. The word linear comes fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive–moving-average Model

In the statistical analysis of time series, autoregressive–moving-average (ARMA) models provide a parsimonious description of a (weakly) stationary stochastic process in terms of two polynomials, one for the autoregression (AR) and the second for the moving average (MA). The general ARMA model was described in the 1951 thesis of Peter Whittle, ''Hypothesis testing in time series analysis'', and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins. Given a time series of data X_t, the ARMA model is a tool for understanding and, perhaps, predicting future values in this series. The AR part involves regressing the variable on its own lagged (i.e., past) values. The MA part involves modeling the error term as a linear combination of error terms occurring contemporaneously and at various times in the past. The model is usually referred to as the ARMA(''p'',''q'') model where ''p'' is the order of the AR part and ''q'' is the order of the MA part (as defined b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive Model

In statistics, econometrics and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it is used to describe certain time-varying processes in nature, economics, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a stochastic term (an imperfectly predictable term); thus the model is in the form of a stochastic difference equation (or recurrence relation which should not be confused with differential equation). Together with the moving-average (MA) model, it is a special case and key component of the more general autoregressive–moving-average (ARMA) and autoregressive integrated moving average (ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one evolving random vari ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Coding

Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms (usually in a chosen programming language, commonly referred to as coding). The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task (which can be as complex as an operating system) on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algorith ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

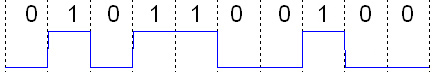

Digital Signal

A digital signal is a signal that represents data as a sequence of discrete values; at any given time it can only take on, at most, one of a finite number of values. This contrasts with an analog signal, which represents continuous values; at any given time it represents a real number within a continuous range of values. Simple digital signals represent information in discrete bands of analog levels. All levels within a band of values represent the same information state. In most digital circuits, the signal can have two possible valid values; this is called a binary signal or logic signal. They are represented by two voltage bands: one near a reference value (typically termed as ''ground'' or zero volts), and the other a value near the supply voltage. These correspond to the two values "zero" and "one" (or "false" and "true") of the Boolean domain, so at any given time a binary signal represents one binary digit (bit). Because of this discretization, relatively small chan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BCJR

The BCJR algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Jelinek and Raviv.L.Bahl, J.Cocke, F.Jelinek, and J.Raviv, "Optimal Decoding of Linear Codes for minimizing symbol error rate", IEEE Transactions on Information Theory, vol. IT-20(2), pp. 284-287, March 1974. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR P. Robertson, P. Hoeher and E. Villebrun, "Optimal and Sub-Optimal Maximum A Poster ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum A Posteriori

In Bayesian statistics, a maximum a posteriori probability (MAP) estimate is an estimate of an unknown quantity, that equals the mode of the posterior distribution. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior distribution (that quantifies the additional information available through prior knowledge of a related event) over the quantity one wants to estimate. MAP estimation can therefore be seen as a regularization of maximum likelihood estimation. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. Then the function: :\theta \mapsto f(x \mid \theta) \! is known as th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LDPC

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph (subclass of the bipartite graph). LDPC codes are capacity-approaching codes, which means that practical constructions exist that allow the noise threshold to be set very close to the theoretical maximum (the Shannon limit) for a symmetric memoryless channel. The noise threshold defines an upper bound for the channel noise, up to which the probability of lost information can be made as small as desired. Using iterative belief propagation techniques, LDPC codes can be decoded in time linear to their block length. LDPC codes are finding increasing use in applications requiring reliable and highly efficient information transfer over bandwidth-constrained or return-channel-constrained links in the presence of corrupting noise. Implementation of LDPC codes has lag ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |