|

Navigation Function

Navigation function usually refers to a function of position, velocity, acceleration and time which is used to plan robot trajectories through the environment. Generally, the goal of a navigation function is to create feasible, safe paths that avoid obstacles while allowing a robot to move from its starting configuration to its goal configuration. Potential functions as navigation functions Potential functions assume that the environment or work space is known. Obstacles are assigned a high potential value, and the goal position is assigned a low potential. To reach the goal position, a robot only needs to follow the negative gradient of the surface. We can formalize this concept mathematically as following: Let X be the state space of all possible configurations of a robot. Let X_g \subset X denote the goal region of the state space. Then a potential function \phi(x) is called a (feasible) navigation function if #\phi(x)=0\ \forall x \in X_g # \phi(x) = \infty if and onl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pf As Navigation Function

PF may refer to: Arts and entertainment * Pianoforte, full original name of the piano instrument * Project Fanboy, a comic book news website Businesses * PF Flyers, a brand of shoes * Palestinian Airlines (IATA airline designator), a defunct airline * Pell Frischmann, a multi-disciplinary engineering consultancy Economics and finance * Point and figure chart in the technical analysis of securities * Pfennig, a unit of currency formerly used in Germany, symbol ₰ * Provident Fund (other), name of various pension funds Language * Phonetic form, a level of syntactic representation in linguistics * Voiceless labiodental affricate (⟨p̪͡f⟩, ⟨p̪͜f⟩, or ⟨p̪f⟩), a type of consonant sound * Pf (digraph), a German digraph People * Phineas Flynn Organizations * Pheasants Forever, a non-profit habitat conservation organization * Federal Police (''Polícia Federal''), in Brazil * Patients' Front, a late 1960s to early 1970s West German pro-illness movement * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to maximize a function by gradient ascent. In coordinate-free terms, the gradient of a function f(\bf) may be defined by: :df=\nabla f \cdot d\bf where ''df'' is the total infinitesimal change in ''f'' for an infinitesimal displacement d\bf, and is seen to be maximal when d\bf is in the direction of the gradi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cost Functional

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maximizing or minimizing a real function by systematically choosing input values from within an allowed set and computing the value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, optimization includes finding "best available" values of some objective function given a define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimal Control

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in science, engineering and operations research. For example, the dynamical system might be a spacecraft with controls corresponding to rocket thrusters, and the objective might be to reach the moon with minimum fuel expenditure. Or the dynamical system could be a nation's economy, with the objective to minimize unemployment; the controls in this case could be fiscal and monetary policy. A dynamical system may also be introduced to embed operations research problems within the framework of optimal control theory. Optimal control is an extension of the calculus of variations, and is a mathematical optimization method for deriving control policies. The method is largely due to the work of Lev Pontryagin and Richard Bellman in the 1950s, after contributions to calc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bellman Equation

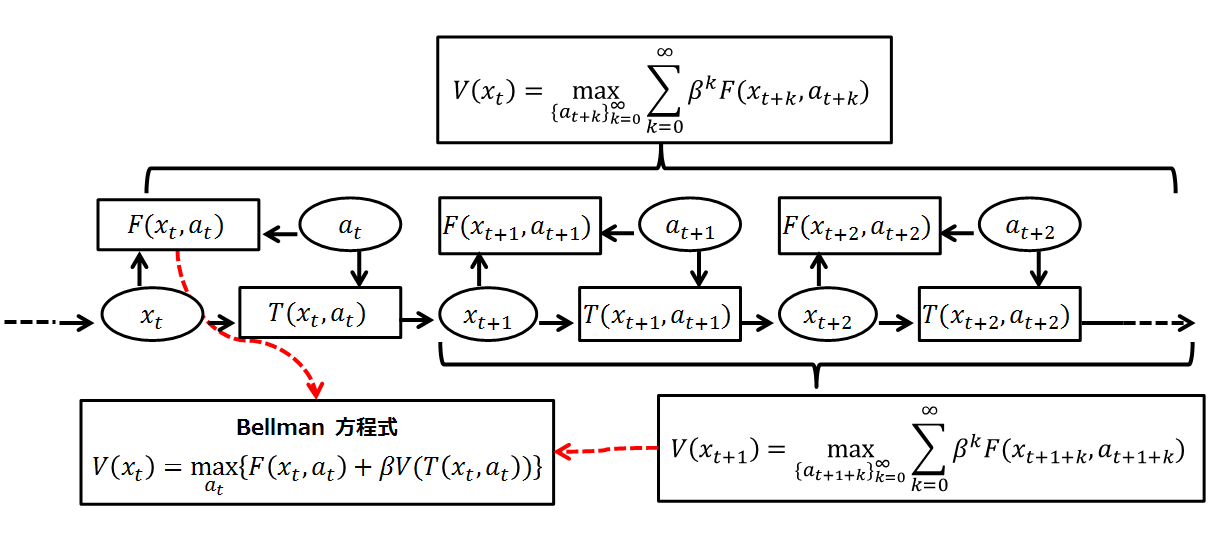

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used. The Bellman equation was first applied to engineering control theory and to other topics in applied mathematics, and subsequently became an important tool in economic theory; though the basic concepts of dynamic programming are prefigured in John von Neumann and Oskar Morgenstern's '' Theory of Games and Econ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge). The environment is typically stated in the form of a Markov decision process (MDP), because many reinforcement learning algorithms for this context use dynamic programming techniques. The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Theory

Control theory is a field of mathematics that deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any ''delay'', ''overshoot'', or ''steady-state error'' and ensuring a level of control stability; often with the aim to achieve a degree of optimality. To do this, a controller with the requisite corrective behavior is required. This controller monitors the controlled process variable (PV), and compares it with the reference or set point (SP). The difference between actual and desired value of the process variable, called the ''error'' signal, or SP-PV error, is applied as feedback to generate a control action to bring the controlled process variable to the same value as the set point. Other aspects which are also studied are controllability and observability. Control theory is used in control system eng ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimal Control

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in science, engineering and operations research. For example, the dynamical system might be a spacecraft with controls corresponding to rocket thrusters, and the objective might be to reach the moon with minimum fuel expenditure. Or the dynamical system could be a nation's economy, with the objective to minimize unemployment; the controls in this case could be fiscal and monetary policy. A dynamical system may also be introduced to embed operations research problems within the framework of optimal control theory. Optimal control is an extension of the calculus of variations, and is a mathematical optimization method for deriving control policies. The method is largely due to the work of Lev Pontryagin and Richard Bellman in the 1950s, after contributions to calc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robot Control

Robotic control is the system that contributes to the movement of robots. This involves the mechanical aspects and programmable systems that makes it possible to control robots. Robotics could be controlled in various ways, which includes using manual control, wireless control, semi-autonomous (which is a mix of fully automatic and wireless control), and fully autonomous (which is when it uses artificial intelligence to move on its own, but there could be options to make it manually controlled). In the present day, as technological advancements progress, robots and their methods of control continue to develop and advance. Modern robots (2000-present) Medical and surgical In the medical field, robots are used to make precise movements that are humanly difficult. Robotic surgery involves the use of less-invasive surgical methods, which are “procedures performed through tiny incisions”. Currently, robots use the da Vinci surgical method, which involves the robotic arm (whic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Motion Planning

Motion planning, also path planning (also known as the navigation problem or the piano mover's problem) is a computational problem to find a sequence of valid configurations that moves the object from the source to destination. The term is used in computational geometry, computer animation, robotics and computer games. For example, consider navigating a mobile robot inside a building to a distant waypoint. It should execute this task while avoiding walls and not falling down stairs. A motion planning algorithm would take a description of these tasks as input, and produce the speed and turning commands sent to the robot's wheels. Motion planning algorithms might address robots with a larger number of joints (e.g., industrial manipulators), more complex tasks (e.g. manipulation of objects), different constraints (e.g., a car that can only drive forward), and uncertainty (e.g. imperfect models of the environment or robot). Motion planning has several robotics applications, such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge). The environment is typically stated in the form of a Markov decision process (MDP), because many reinforcement learning algorithms for this context use dynamic programming techniques. The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |