|

Natural-language Understanding

Natural-language understanding (NLU) or natural-language interpretation (NLI) is a subtopic of natural-language processing in artificial intelligence that deals with machine reading comprehension. Natural-language understanding is considered an AI-hard problem. There is considerable commercial interest in the field because of its application to automated reasoning, machine translation, question answering, news-gathering, text categorization, voice-activation, archiving, and large-scale content analysis. History The program STUDENT, written in 1964 by Daniel Bobrow for his PhD dissertation at MIT, is one of the earliest known attempts at natural-language understanding by a computer. Eight years after John McCarthy coined the term artificial intelligence, Bobrow's dissertation (titled ''Natural Language Input for a Computer Problem Solving System'') showed how a computer could understand simple natural language input to solve algebra word problems. A year later, in 1965, Joseph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural-language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, tho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexicon

A lexicon is the vocabulary of a language or branch of knowledge (such as nautical or medical). In linguistics, a lexicon is a language's inventory of lexemes. The word ''lexicon'' derives from Koine Greek language, Greek word (), neuter of () meaning 'of or for words'. Linguistic theories generally regard human languages as consisting of two parts: a lexicon, essentially a catalogue of a language's words (its wordstock); and a grammar, a system of rules which allow for the combination of those words into meaningful sentences. The lexicon is also thought to include bound morphemes, which cannot stand alone as words (such as most affixes). In some analyses, compound words and certain classes of idiomatic expressions, collocations and other phrases are also considered to be part of the lexicon. Dictionary, Dictionaries are lists of the lexicon, in alphabetical order, of a given language; usually, however, bound morphemes are not included. Size and organization Items in the le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Terry Winograd

Terry Allen Winograd (born February 24, 1946) is an American professor of computer science at Stanford University, and co-director of the Stanford Human–Computer Interaction Group. He is known within the philosophy of mind and artificial intelligence fields for his work on natural language using the SHRDLU program. Education Winograd grew up in Colorado and graduated from Colorado College in 1966. He wrote SHRDLU as a PhD thesis at MIT in the years from 1968–70. In making the program Winograd was concerned with the problem of providing a computer with sufficient "understanding" to be able to use natural language. Winograd built a blocks world, restricting the program's intellectual world to a simulated "world of toy blocks". The program could accept commands such as, "Find a block which is taller than the one you are holding and put it into the box" and carry out the requested action using a simulated block-moving arm. The program could also respond verbally, for example, "I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite State Automata

A finite-state machine (FSM) or finite-state automaton (FSA, plural: ''automata''), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number of '' states'' at any given time. The FSM can change from one state to another in response to some inputs; the change from one state to another is called a ''transition''. An FSM is defined by a list of its states, its initial state, and the inputs that trigger each transition. Finite-state machines are of two types— deterministic finite-state machines and non-deterministic finite-state machines. A deterministic finite-state machine can be constructed equivalent to any non-deterministic one. The behavior of state machines can be observed in many devices in modern society that perform a predetermined sequence of actions depending on a sequence of events with which they are presented. Simple examples are vending machines, which dispense p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phrase Structure Rules

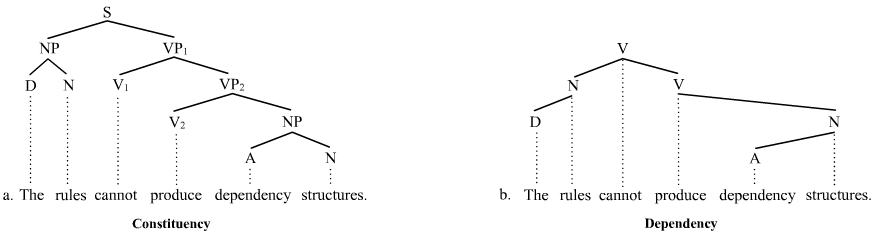

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories (parts of speech) and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a ''constituency grammar''; as such, it stands in contrast to ''dependency grammars'', which are based on the dependency relation. Definition and examples Phrase structure rules are usually of the following form: :A \to B \quad C meaning that the constituent A is separated into the two subconstituents B and C. Some examples for English are a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Augmented Transition Network

An augmented transition network or ATN is a type of graph theoretic structure used in the operational definition of formal languages, used especially in parsing relatively complex natural languages, and having wide application in artificial intelligence. An ATN can, theoretically, analyze the structure of any sentence, however complicated. ATN are modified transition networks and an extension of RTNs. ATNs build on the idea of using finite state machines ( Markov model) to parse sentences. W. A. Woods in "Transition Network Grammars for Natural Language Analysis" claims that by adding a recursive mechanism to a finite state model, parsing can be achieved much more efficiently. Instead of building an automaton for a particular sentence, a collection of transition graphs are built. A grammatically correct sentence is parsed by reaching a final state in any state graph. Transitions between these graphs are simply subroutine calls from one state to any initial state on any graph in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William Aaron Woods

William Aaron Woods (born June 17, 1942), generally known as Bill Woods, is a researcher in natural language processing, continuous speech understanding, knowledge representation, and knowledge-based search technology. He is currently a Software Engineer at Google. Education Woods received a Bachelor's degree from Ohio Wesleyan University (1964) and a Master's (1965) and Ph.D. (1968) in Applied Mathematics from Harvard University, where he then served as an Assistant Professor and later as a Gordon McKay Professor of the Practice of Computer Science. Research Woods built one of the first natural language question answering systems (LUNAR) to answer questions about the Apollo 11 moon rocks for the NASA Manned Spacecraft Center while he was at Bolt Beranek and Newman (BBN) in Cambridge, Massachusetts. At BBN, he was a Principal Scientist and manager of the Artificial Intelligence Department in the '70's and early '80's. He was the principal investigator for BBN's early work in natur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Janet Kolodner

Janet Lynne Kolodner is an American cognitive scientist and learning scientist and Regents' Professor Emerita in the School of Interactive Computing, College of Computing at the Georgia Institute of Technology. She was Founding Editor in Chief of ''The Journal of the Learning Sciences'' and served in that role for 19 years. She was Founding Executive Officer of the International Society of the Learning Sciences (ISLS). From August, 2010 through July, 2014, she was a program officer at the National Science Foundation and headed up the Cyberlearning and Future Learning Technologies program (originally called Cyberlearning: Transforming Education). Since finishing at NSF, she is working toward a set of projects that will integrate learning technologies coherently to support disciplinary and everyday learning, support project-based pedagogy that works, and connect to the best in curriculum for active learning. As of July, 2020, she is a Professor of the Practice at the Lynch Schoo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wendy Lehnert

Wendy Grace Lehnert is an American computer scientist specializing in natural language processing and known for her pioneering use of machine learning in natural language processing. She is a professor emerita at the University of Massachusetts Amherst. Education and career Lehnert earned a bachelor's degree in mathematics from Portland State University in 1972, and a master's degree from Yeshiva University in 1974. She became a student of Roger Schank at Yale University, completing her Ph.D. there in 1977 with a dissertation on ''The Process of Question Answering'', and was hired by Yale as an assistant professor. She moved to the University of Massachusetts Amherst in 1982. At Amherst, her doctoral students have included Claire Cardie and Ellen Riloff. She retired in 2011. Books Lehnert has written both scholarly and popular books on computing, including: *''The Process of Question Answering: A Computer Simulation of Cognition'' (L. Erlbaum Associates, 1978) *''Light on the Web ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert Wilensky

Robert Wilensky (26 March 1951 – 15 March 2013) was an American computer scientist and emeritus professor at the UC Berkeley School of Information, with his main focus of research in artificial intelligence. Academic career In 1971, Wilensky received his bachelor's degree in mathematics from Yale University, and in 1978, a Ph.D. in computer science from the same institution. After finishing his thesis, "Understanding Goal-Based Stories", Wilensky joined the faculty from the EECS Department of UC Berkeley. In 1986, he worked as the doctoral advisor of Peter Norvig, who then later published the standard textbook of the field: '' Artificial Intelligence: A Modern Approach''. From 1993 to 1997, Wilensky was the Berkeley Computer Science Division Chair. During this time, he also served as director of the Berkeley Cognitive Science Program, director of the Berkeley Artificial Intelligence Research Project, and board member of the International Computer Science Institute. In 1997 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yale University

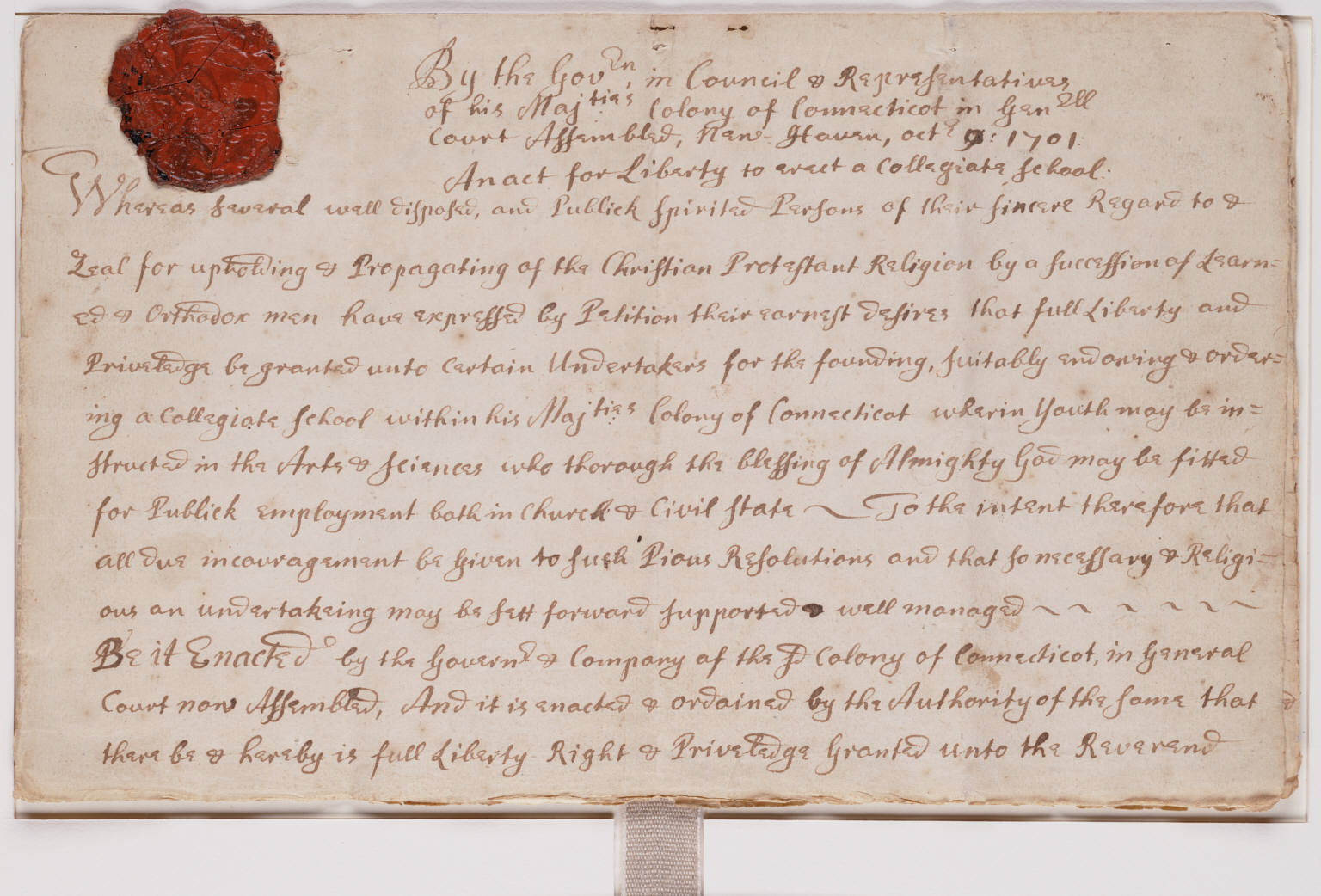

Yale University is a private research university in New Haven, Connecticut. Established in 1701 as the Collegiate School, it is the third-oldest institution of higher education in the United States and among the most prestigious in the world. It is a member of the Ivy League. Chartered by the Connecticut Colony, the Collegiate School was established in 1701 by clergy to educate Congregational ministers before moving to New Haven in 1716. Originally restricted to theology and sacred languages, the curriculum began to incorporate humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew after 1890 with rapid expansion of the physical campus and scientific research. Yale is organized into fourteen constituent schools: the original undergraduate col ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sydney Lamb

Sydney MacDonald Lamb (born May 4, 1929 in Denver, Colorado) is an American linguist and professor at Rice University, whose stratificational grammar is a significant alternative theory to Chomsky's transformational grammar. He has specialized in Neurocognitive Linguistics and a stratificational approach to language understanding. Lamb earned his Ph.D. from the University of California, Berkeley in 1958 and taught there from 1956 to 1964. His dissertation was a grammar of the Uto-Aztecan language Mono, under the direction of Mary Haas and Murray B. Emeneau. In 1964, he began teaching at Yale University before joining the Semionics Associates in Berkeley, California in 1977. Lamb did research in North American Indian languages specifically in those geographically centered on California. His contributions have been wide-ranging, including those to historical linguistics, computational linguistics, and the theory of linguistic structure. His work led to innovative designs of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |