|

Newton–Cotes Formulas

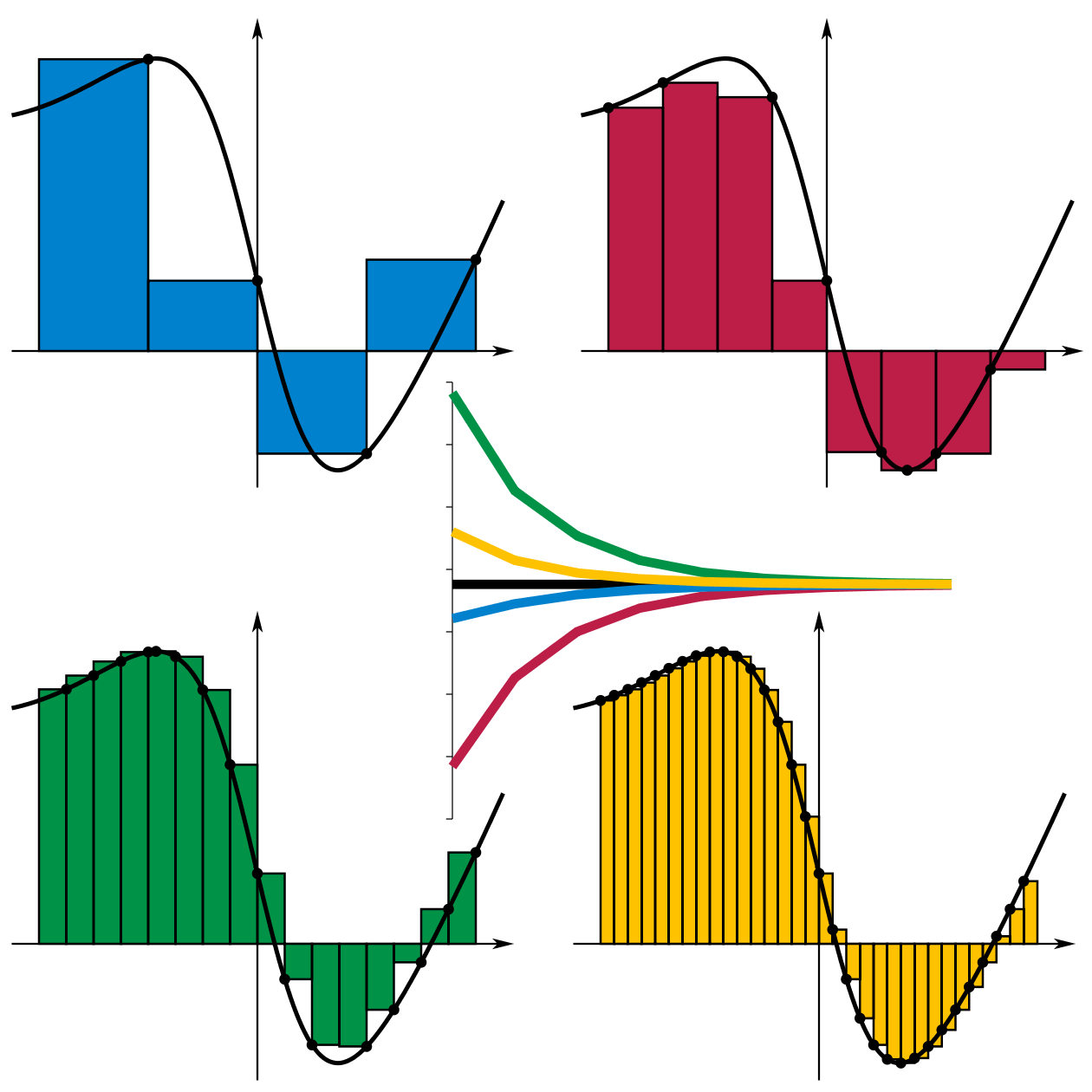

In numerical analysis, the Newton–Cotes formulas, also called the Newton–Cotes quadrature rules or simply Newton–Cotes rules, are a group of formulas for numerical integration (also called ''quadrature'') based on evaluating the integrand at equally spaced points. They are named after Isaac Newton and Roger Cotes. Newton–Cotes formulas can be useful if the value of the integrand at equally spaced points is given. If it is possible to change the points at which the integrand is evaluated, then other methods such as Gaussian quadrature and Clenshaw–Curtis quadrature are probably more suitable. Description It is assumed that the value of a function defined on , b/math> is known at n + 1 equally spaced points: a \leq x_0 < x_1 < \ldots < x_n \leq b. There are two classes of Newton–Cotes quadrature: they are called "closed" when and , i.e. they use the function values at the interval endpoints, and "open" when |

Simpson Rule

In numerical integration, Simpson's rules are several approximations for definite integrals, named after Thomas Simpson (1710–1761). The most basic of these rules, called Simpson's 1/3 rule, or just Simpson's rule, reads \int_a^b f(x) \, dx \approx \frac \left (a) + 4f\left(\frac\right) + f(b)\right In German and some other languages, it is named after Johannes Kepler, who derived it in 1615 after seeing it used for wine barrels (barrel rule, ). The approximate equality in the rule becomes exact if is a polynomial up to and including 3rd degree. If the 1/3 rule is applied to ''n'' equal subdivisions of the integration range 'a'', ''b'' one obtains the composite Simpson's 1/3 rule. Points inside the integration range are given alternating weights 4/3 and 2/3. Simpson's 3/8 rule, also called Simpson's second rule, requires one more function evaluation inside the integration range and gives lower error bounds, but does not improve on order of the error. If the 3/ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simpson's Rule

In numerical integration, Simpson's rules are several approximations for definite integrals, named after Thomas Simpson (1710–1761). The most basic of these rules, called Simpson's 1/3 rule, or just Simpson's rule, reads \int_a^b f(x) \, dx \approx \frac \left (a) + 4f\left(\frac\right) + f(b)\right In German and some other languages, it is named after Johannes Kepler, who derived it in 1615 after seeing it used for wine barrels (barrel rule, ). The approximate equality in the rule becomes exact if is a polynomial up to and including 3rd degree. If the 1/3 rule is applied to ''n'' equal subdivisions of the integration range 'a'', ''b'' one obtains the composite Simpson's 1/3 rule. Points inside the integration range are given alternating weights 4/3 and 2/3. Simpson's 3/8 rule, also called Simpson's second rule, requires one more function evaluation inside the integration range and gives lower error bounds, but does not improve on order of the error. If the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interpolation

In the mathematical field of numerical analysis, interpolation is a type of estimation, a method of constructing (finding) new data points based on the range of a discrete set of known data points. In engineering and science, one often has a number of data points, obtained by sampling or experimentation, which represent the values of a function for a limited number of values of the independent variable. It is often required to interpolate; that is, estimate the value of that function for an intermediate value of the independent variable. A closely related problem is the approximation of a complicated function by a simple function. Suppose the formula for some given function is known, but too complicated to evaluate efficiently. A few data points from the original function can be interpolated to produce a simpler function which is still fairly close to the original. The resulting gain in simplicity may outweigh the loss from interpolation error and give better performance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quadrature (mathematics)

In mathematics, quadrature is a historical term which means the process of determining area. This term is still used nowadays in the context of differential equations, where "solving an equation by quadrature" or "reduction to quadrature" means expressing its solution in terms of integrals. Quadrature problems served as one of the main sources of problems in the development of calculus, and introduce important topics in mathematical analysis. History Antiquity Greek mathematicians understood the determination of an area of a figure as the process of geometrically constructing a square having the same area (''squaring''), thus the name ''quadrature'' for this process. The Greek geometers were not always successful (see squaring the circle), but they did carry out quadratures of some figures whose sides were not simply line segments, such as the lune of Hippocrates and the parabola. By a certain Greek tradition, these constructions had to be performed using only a compass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Integration

In analysis, numerical integration comprises a broad family of algorithms for calculating the numerical value of a definite integral, and by extension, the term is also sometimes used to describe the numerical solution of differential equations. This article focuses on calculation of definite integrals. The term numerical quadrature (often abbreviated to ''quadrature'') is more or less a synonym for ''numerical integration'', especially as applied to one-dimensional integrals. Some authors refer to numerical integration over more than one dimension as cubature; others take ''quadrature'' to include higher-dimensional integration. The basic problem in numerical integration is to compute an approximate solution to a definite integral :\int_a^b f(x) \, dx to a given degree of accuracy. If is a smooth function integrated over a small number of dimensions, and the domain of integration is bounded, there are many methods for approximating the integral to the desired precision. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rectangle Method

In mathematics, a Riemann sum is a certain kind of approximation of an integral by a finite sum. It is named after nineteenth century German mathematician Bernhard Riemann. One very common application is approximating the area of functions or lines on a graph, but also the length of curves and other approximations. The sum is calculated by partitioning the region into shapes ( rectangles, trapezoids, parabolas, or cubics) that together form a region that is similar to the region being measured, then calculating the area for each of these shapes, and finally adding all of these small areas together. This approach can be used to find a numerical approximation for a definite integral even if the fundamental theorem of calculus does not make it easy to find a closed-form solution. Because the region by the small shapes is usually not exactly the same shape as the region being measured, the Riemann sum will differ from the area being measured. This error can be reduced by dividin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abramowitz And Stegun

''Abramowitz and Stegun'' (''AS'') is the informal name of a 1964 mathematical reference work edited by Milton Abramowitz and Irene Stegun of the United States National Bureau of Standards (NBS), now the ''National Institute of Standards and Technology'' (NIST). Its full title is ''Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables''. A digital successor to the Handbook was released as the " Digital Library of Mathematical Functions" (DLMF) on 11 May 2010, along with a printed version, the '' NIST Handbook of Mathematical Functions'', published by Cambridge University Press. Overview Since it was first published in 1964, the 1046 page ''Handbook'' has been one of the most comprehensive sources of information on special functions, containing definitions, identities, approximations, plots, and tables of values of numerous functions used in virtually all fields of applied mathematics. The notation used in the ''Handbook'' is the ''de facto'' stand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boole's Rule

In mathematics, Boole's rule, named after George Boole, is a method of numerical integration. Formula Simple Boole's Rule It approximates an integral: : \int_^ f(x)\,dx by using the values of at five equally spaced points: : \begin & x_0 = a\\ & x_1 = x_1 + h \\ & x_2 = x_1 + 2h \\ & x_3 = x_1 + 3h \\ & x_4 = x_1 +4h = b \end It is expressed thus in Abramowitz and Stegun: : \int_^ f(x)\,dx = \frac\bigl 7f(x_0) + 32 f(x_1) + 12 f(x_2) + 32 f(x_3) + 7f(x_4) \bigr+ \text where the error term is : -\,\frac for some number between and where . It is often known as Bode's rule, due to a typographical error that propagated from Abramowitz and Stegun. The following constitutes a very simple implementation of the method in Common Lisp which ignores the error term: (defun integrate-booles-rule (f x1 x5) "Calculates the Boole's rule numerical integral of the function F in the closed interval extending from inclusive X1 to inclusive X5 without error term inclusi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trapezoidal Rule

In calculus, the trapezoidal rule (also known as the trapezoid rule or trapezium rule; see Trapezoid for more information on terminology) is a technique for approximating the definite integral. \int_a^b f(x) \, dx. The trapezoidal rule works by approximating the region under the graph of the function f(x) as a trapezoid and calculating its area. It follows that \int_^ f(x) \, dx \approx (b-a) \cdot \tfrac(f(a)+f(b)). The trapezoidal rule may be viewed as the result obtained by averaging the left and right Riemann sums, and is sometimes defined this way. The integral can be even better approximated by partitioning the integration interval, applying the trapezoidal rule to each subinterval, and summing the results. In practice, this "chained" (or "composite") trapezoidal rule is usually what is meant by "integrating with the trapezoidal rule". Let \ be a partition of ,b/math> such that a=x_0 < x_1 < \cdots < x_ < x_N = b and be the length of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Analysis

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics (predicting the motions of planets, stars and galaxies), numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Runge's Phenomenon

In the mathematical field of numerical analysis, Runge's phenomenon () is a problem of oscillation at the edges of an interval that occurs when using polynomial interpolation with polynomials of high degree over a set of equispaced interpolation points. It was discovered by Carl David Tolmé Runge (1901) when exploring the behavior of errors when using polynomial interpolation to approximate certain functions. The discovery was important because it shows that going to higher degrees does not always improve accuracy. The phenomenon is similar to the Gibbs phenomenon in Fourier series approximations. Introduction The Weierstrass approximation theorem states that for every continuous function ''f''(''x'') defined on an interval 'a'',''b'' there exists a set of polynomial functions ''P''''n''(''x'') for ''n''=0, 1, 2, …, each of degree at most ''n'', that approximates ''f''(''x'') with uniform convergence over 'a'',''b''as ''n'' tends to infinity, that is, :\lim_ \left( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Polynomial

In numerical analysis, the Lagrange interpolating polynomial is the unique polynomial of lowest degree that interpolates a given set of data. Given a data set of coordinate pairs (x_j, y_j) with 0 \leq j \leq k, the x_j are called ''nodes'' and the y_j are called ''values''. The Lagrange polynomial L(x) has degree \leq k and assumes each value at the corresponding node, L(x_j) = y_j. Although named after Joseph-Louis Lagrange, who published it in 1795, the method was first discovered in 1779 by Edward Waring. It is also an easy consequence of a formula published in 1783 by Leonhard Euler. Uses of Lagrange polynomials include the Newton–Cotes method of numerical integration and Shamir's secret sharing scheme in cryptography. For equispaced nodes, Lagrange interpolation is susceptible to Runge's phenomenon of large oscillation. Definition Given a set of k + 1 nodes \, which must all be distinct, x_j \neq x_m for indices j \neq m, the Lagrange basis for polynomials of degre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |