|

Network Protocol Virtualization

Network Protocol Virtualization or Network Protocol Stack Virtualization is a concept of providing network connections as a service, without concerning application developer to decide the exact communication stack composition. Concept Network Protocol Virtualization (NPV) was firstly proposed by Heuschkel et al. in 2015 as a rough sketch as part of a transition concept for network protocol stacks. The concept evolved and was published in a deployable state in 2018. The key idea is to decouple applications from their communication stacks. Today the socket API requires application developer to compose the communication stack by hand by choosing between IPv4/IPv6 and UDP/TCP. NPV proposes the network protocol stack should be tailored to the observed network environment (e.g. link layer technology, or current network performance). Thus, the network stack should not be composed at development time, but at runtime and needs the possibility to be adapted if needed. Additionally the de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

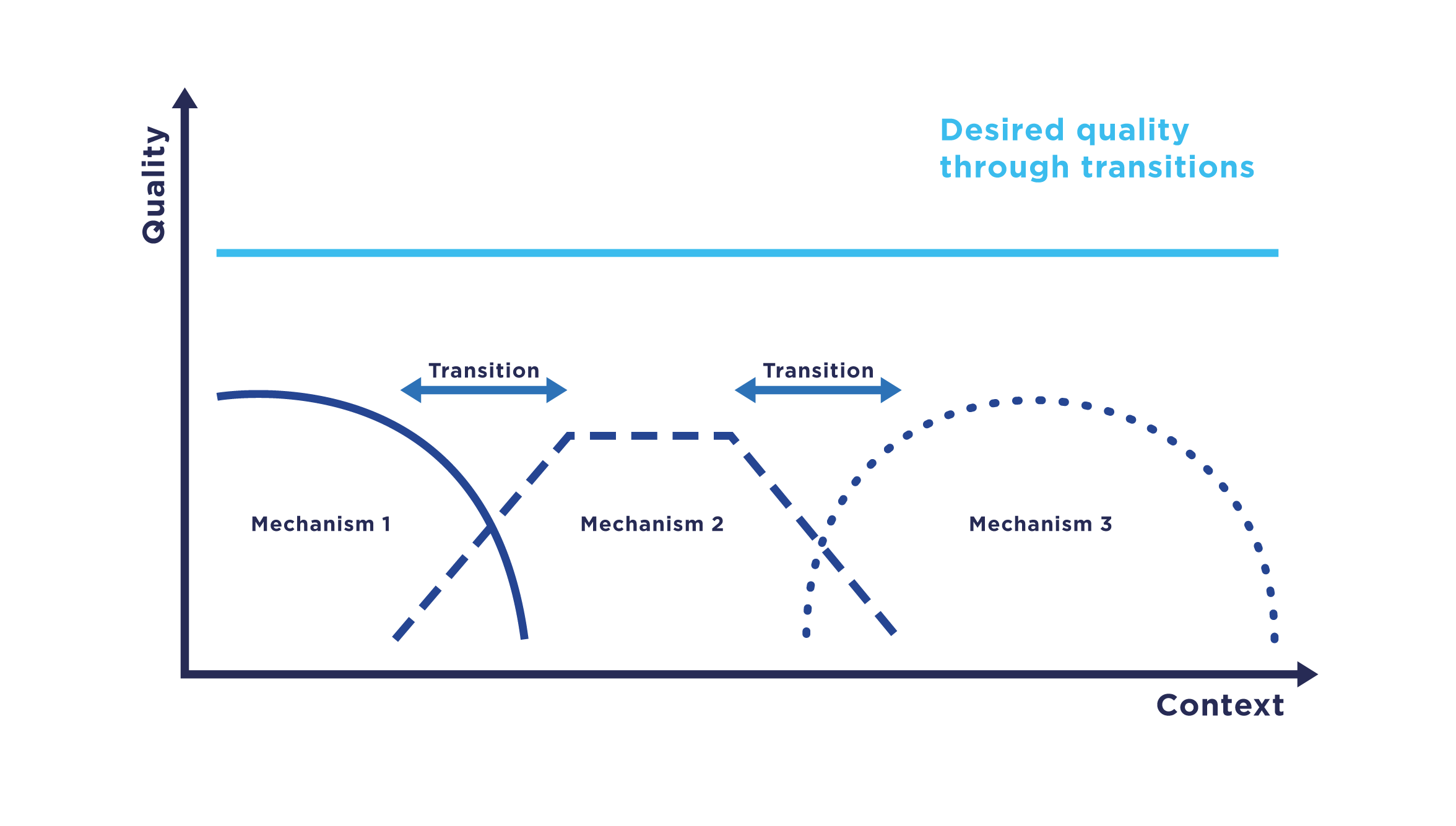

Transition (computer Science)

Transition refers to a computer science paradigm in the context of communication systems which describes the change of communication mechanisms, i.e., functions of a communication system, in particular, service and protocol components. In a transition, communication mechanisms within a system are replaced by functionally comparable mechanisms with the aim to ensure the highest possible quality, e.g., as captured by the quality of service. Transitions enable communication systems to adapt to changing conditions during runtime. This change in conditions can, for example, be a rapid increase in the load on a certain service that may be caused, e.g., by large gatherings of people with mobile devices. A transition often impacts multiple mechanisms at different communication layers of a layered architecture. Mechanisms are given as conceptual elements of a networked communication system and are linked to specific functional units, for example, as a service or protocol component. In some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OSI Model

The Open Systems Interconnection model (OSI model) is a conceptual model that 'provides a common basis for the coordination of SOstandards development for the purpose of systems interconnection'. In the OSI reference model, the communications between a computing system are split into seven different abstraction layers: Physical, Data Link, Network, Transport, Session, Presentation, and Application. The model partitions the flow of data in a communication system into seven abstraction layers to describe networked communication from the physical implementation of transmitting bits across a communications medium to the highest-level representation of data of a distributed application. Each intermediate layer serves a class of functionality to the layer above it and is served by the layer below it. Classes of functionality are realized in all software development through all and any standardized communication protocols. Each layer in the OSI model has its own well-defined functi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

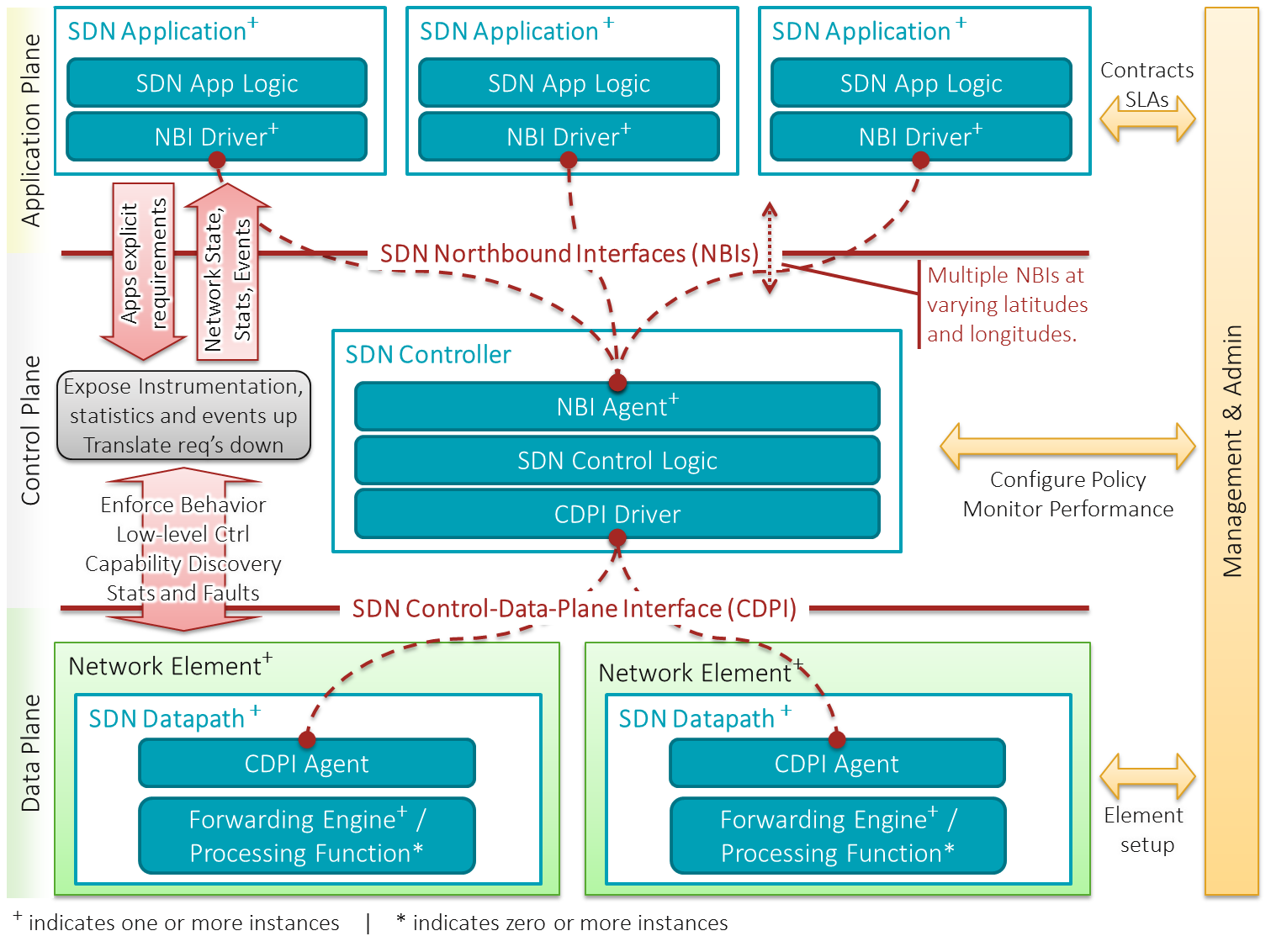

Software-defined Networking

Software-defined networking (SDN) technology is an approach to network management that enables dynamic, programmatically efficient network configuration in order to improve network performance and monitoring, making it more like cloud computing than traditional network management. SDN is meant to address the static architecture of traditional networks. SDN attempts to centralize network intelligence in one network component by disassociating the forwarding process of network packets (data plane) from the routing process ( control plane). The control plane consists of one or more controllers, which are considered the brain of the SDN network where the whole intelligence is incorporated. However, centralization has its own drawbacks when it comes to security, scalability and elasticity and this is the main issue of SDN. SDN was commonly associated with the OpenFlow protocol (for remote communication with network plane elements for the purpose of determining the path of network ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Low-power Wide-area Network

A low-power wide-area network (LPWAN or LPWA network) is a type of wireless telecommunication wide area network designed to allow long-range communications at a low bit rate among things (connected objects), such as sensors operated on a battery. The low power, low bit rate, and intended use distinguish this type of network from a wireless WAN that is designed to connect users or businesses, and carry more data, using more power. The LPWAN data rate ranges from 0.3 kbit/s to 50 kbit/s per channel. A LPWAN may be used to create a private wireless sensor network, but may also be a service or infrastructure offered by a third party, allowing the owners of sensors to deploy them in the field without investing in gateway technology. Attributes # Long range: The operating range of LPWAN technology varies from a few kilometers in urban areas to over 10 km in rural settings. It can also enable effective data communication in previously infeasible indoor and underground locations. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Of Things

The Internet of things (IoT) describes physical objects (or groups of such objects) with sensors, processing ability, software and other technologies that connect and exchange data with other devices and systems over the Internet or other communications networks. Internet of things has been considered a misnomer because devices do not need to be connected to the public internet, they only need to be connected to a network and be individually addressable. The field has evolved due to the convergence of multiple technologies, including ubiquitous computing, commodity sensors, increasingly powerful embedded systems, as well as machine learning.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Fault-tolerant cooperative navigation of networked UAV swarms for forest fire monitoring Aerospace Science and Technology, 2022. Traditional fields of embedded systems, wireless sensor networks, control systems, automation (including home and building automation), independently ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Application Virtualization

Application virtualization is a software technology that encapsulates computer programs from the underlying operating system on which they are executed. A fully virtualized application is not installed in the traditional sense, although it is still executed as if it were. The application behaves at runtime like it is directly interfacing with the original operating system and all the resources managed by it, but can be isolated or sandboxed to varying degrees. In this context, the term "virtualization" refers to the artifact being encapsulated (application), which is quite different from its meaning in hardware virtualization, where it refers to the artifact being abstracted (physical hardware). Description Full application virtualization requires a virtualization layer. Application virtualization layers replace part of the runtime environment normally provided by the operating system. The layer intercepts all disk operations of virtualized applications and transparently redire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hardware Virtualization

Hardware virtualization is the virtualization of computers as complete hardware platforms, certain logical abstractions of their componentry, or only the functionality required to run various operating systems. Virtualization hides the physical characteristics of a computing platform from the users, presenting instead an abstract computing platform. At its origins, the software that controlled virtualization was called a "control program", but the terms "hypervisor" or "virtual machine monitor" became preferred over time. Concept The term "virtualization" was coined in the 1960s to refer to a virtual machine (sometimes called "pseudo machine"), a term which itself dates from the experimental IBM M44/44X system. The creation and management of virtual machines has been called "platform virtualization", or "server virtualization", more recently. Platform virtualization is performed on a given hardware platform by ''host'' software (a ''control program''), which creates a simu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtualization

In computing, virtualization or virtualisation (sometimes abbreviated v12n, a numeronym) is the act of creating a virtual (rather than actual) version of something at the same abstraction level, including virtual computer hardware platforms, storage devices, and computer network resources. Virtualization began in the 1960s, as a method of logically dividing the system resources provided by mainframe computers between different applications. An early and successful example is IBM CP/CMS. The control program CP provided each user with a simulated stand-alone System/360 computer. Since then, the meaning of the term has broadened. Hardware virtualization ''Hardware virtualization'' or ''platform virtualization'' refers to the creation of a virtual machine that acts like a real computer with an operating system. Software executed on these virtual machines is separated from the underlying hardware resources. For example, a computer that is running Arch Linux may host a virtual ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)