|

Mantel Test

The Mantel test, named after Nathan Mantel, is a statistical test of the correlation between two matrices. The matrices must be of the same dimension; in most applications, they are matrices of interrelations between the same vectors of objects. The test was first published by Nathan Mantel, a biostatistician at the National Institutes of Health, in 1967. Accounts of it can be found in advanced statistics books (e.g., Sokal & Rohlf 1995). Usage The test is commonly used in ecology, where the data are usually estimates of the "distance" between objects such as species of organisms. For example, one matrix might contain estimates of the genetic distances (i.e., the amount of difference between two different genomes) between all possible pairs of species in the study, obtained by the methods of molecular systematics; while the other might contain estimates of the geographical distance between the ranges of each species to every other species. In this case, the hypothesis being test ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nathan Mantel

Nathan Mantel (February 16, 1919 – May 25, 2002) was an American biostatistician best known for his work with William Haenszel, which led to the Mantel–Haenszel test and its associated estimate, the Mantel–Haenszel odds ratio. The Mantel–Haenszel procedure and its extensions allow data from several sources or groups to be combined while avoiding confounding. He spent much of his career working for the National Cancer Institute. During his career, he published over 380 academic papers. Later in his life, Mantel was known for defending the tobacco industry against claims that passive smoking was harmful. See also *Mantel test * Logrank test The logrank test, or log-rank test, is a hypothesis test to compare the survival distributions of two samples. It is a nonparametric test and appropriate to use when the data are right skewed and censored (technically, the censoring must be non-in ... References * Further reading * {{DEFAULTSORT:Mantel, Nathan 1919 births 200 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

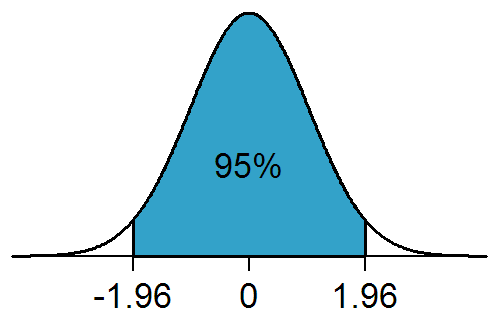

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significanc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Tests

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, "significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sørensen–Dice Coefficient

The Sørensen–Dice coefficient (see below for other names) is a statistic used to gauge the similarity of two samples. It was independently developed by the botanists Thorvald Sørensen and Lee Raymond Dice, who published in 1948 and 1945 respectively. Name The index is known by several other names, especially Sørensen–Dice index, Sørensen index and Dice's coefficient. Other variations include the "similarity coefficient" or "index", such as Dice similarity coefficient (DSC). Common alternate spellings for Sørensen are ''Sorenson'', ''Soerenson'' and ''Sörenson'', and all three can also be seen with the ''–sen'' ending. Other names include: * F1 score * Czekanowski's binary (non-quantitative) index * Measure of genetic similarity * Zijdenbos similarity index, referring to a 1994 paper of Zijdenbos et al. Formula Sørensen's original formula was intended to be applied to discrete data. Given two sets, X and Y, it is defined as : DSC = \frac where , ''X'', and , ''Y' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distribution-free or having a specified distribution but with the distribution's parameters unspecified. Nonparametric statistics includes both descriptive statistics and statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are violated. Definitions The term "nonparametric statistics" has been imprecisely defined in the following two ways, among others: Applications and purpose Non-parametric methods are widely used for studying populations that take on a ranked order (such as movie reviews receiving one to four stars). The use of non-parametric methods may be necessary when data have a ranking but no clear numerical interpretation, such as when assessing preferences. In terms of levels of me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of Statistical Packages

Statistical software are specialized computer programs for analysis in statistics and econometrics. Open-source * ADaMSoft – a generalized statistical software with data mining algorithms and methods for data management * ADMB – a software suite for non-linear statistical modeling based on C++ which uses automatic differentiation * Chronux – for neurobiological time series data * DAP – free replacement for SAS * Environment for DeveLoping KDD-Applications Supported by Index-Structures (ELKI) a software framework for developing data mining algorithms in Java * Epi Info – statistical software for epidemiology developed by Centers for Disease Control and Prevention (CDC). Apache 2 licensed * Fityk – nonlinear regression software (GUI and command line) * GNU Octave – programming language very similar to MATLAB with statistical features * gretl – gnu regression, econometrics and time-series library * intrinsic Noise Analyzer (iNA) – For analyzing intrinsic fluctuat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Permutation

A random permutation is a random ordering of a set of objects, that is, a permutation-valued random variable. The use of random permutations is often fundamental to fields that use randomized algorithms such as coding theory, cryptography, and simulation. A good example of a random permutation is the shuffling of a deck of cards: this is ideally a random permutation of the 52 cards. Generating random permutations Entry-by-entry brute force method One method of generating a random permutation of a set of length ''n'' uniformly at random (i.e., each of the ''n''! permutations is equally likely to appear) is to generate a sequence by taking a random number between 1 and ''n'' sequentially, ensuring that there is no repetition, and interpreting this sequence (''x''1, ..., ''x''''n'') as the permutation : \begin 1 & 2 & 3 & \cdots & n \\ x_1 & x_2 & x_3 & \cdots & x_n \\ \end, shown here in two-line notation. This brute-force method will require occasional retries whenever the ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Test Statistic

A test statistic is a statistic (a quantity derived from the sample) used in statistical hypothesis testing.Berger, R. L.; Casella, G. (2001). ''Statistical Inference'', Duxbury Press, Second Edition (p.374) A hypothesis test is typically specified in terms of a test statistic, considered as a numerical summary of a data-set that reduces the data to one value that can be used to perform the hypothesis test. In general, a test statistic is selected or defined in such a way as to quantify, within observed data, behaviours that would distinguish the null from the alternative hypothesis, where such an alternative is prescribed, or that would characterize the null hypothesis if there is no explicitly stated alternative hypothesis. An important property of a test statistic is that its sampling distribution under the null hypothesis must be calculable, either exactly or approximately, which allows ''p''-values to be calculated. A ''test statistic'' shares some of the same qualities o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, Mon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson Product-moment Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficient ― is a measure of linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of teenagers from a high school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by Karl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |