|

Morphological Parsing

Morphological parsing, in natural language processing, is the process of determining the morphemes from which a given word is constructed. It must be able to distinguish between orthographic rules and morphological rules. For example, the word 'foxes' can be decomposed into 'fox' (the stem), and 'es' (a suffix indicating plurality). The generally accepted approach to morphological parsing is through the use of a finite state transducer (FST), which inputs words and outputs their stem and modifiers. The FST is initially created through algorithmic parsing of some word source, such as a dictionary, complete with modifier markups. Another approach is through the use of an indexed lookup method, which uses a constructed radix tree. This is not an often-taken route because it breaks down for morphologically complex languages. With the advancement of neural networks in natural language processing, it became less common to use FST for morphological analysis, especially for languages fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modifiers

In linguistics, a modifier is an optional element in phrase structure or clause structure which ''modifies'' the meaning of another element in the structure. For instance, the adjective "red" acts as a modifier in the noun phrase "red ball", providing extra details about which particular ball is being referred to. Similarly, the adverb "quickly" acts as a modifier in the verb phrase "run quickly". Modification can be considered a high-level domain of the functions of language, on par with predication and reference. Premodifiers and postmodifiers Modifiers may come before or after the modified element (the ''head''), depending on the type of modifier and the rules of syntax for the language in question. A modifier placed before the head is called a premodifier; one placed after the head is called a postmodifier. For example, in ''land mines'', the word ''land'' is a premodifier of ''mines'', whereas in the phrase ''mines in wartime'', the phrase ''in wartime'' is a postmodifier of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

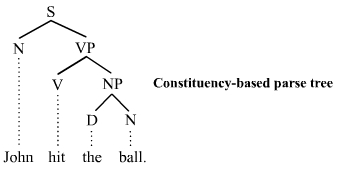

Grammar

In linguistics, grammar is the set of rules for how a natural language is structured, as demonstrated by its speakers or writers. Grammar rules may concern the use of clauses, phrases, and words. The term may also refer to the study of such rules, a subject that includes phonology, morphology (linguistics), morphology, and syntax, together with phonetics, semantics, and pragmatics. There are, broadly speaking, two different ways to study grammar: traditional grammar and #Theoretical frameworks, theoretical grammar. Fluency in a particular language variety involves a speaker internalizing these rules, many or most of which are language acquisition, acquired by observing other speakers, as opposed to intentional study or language teaching, instruction. Much of this internalization occurs during early childhood; learning a language later in life usually involves more direct instruction. The term ''grammar'' can also describe the linguistic behaviour of groups of speakers and writer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

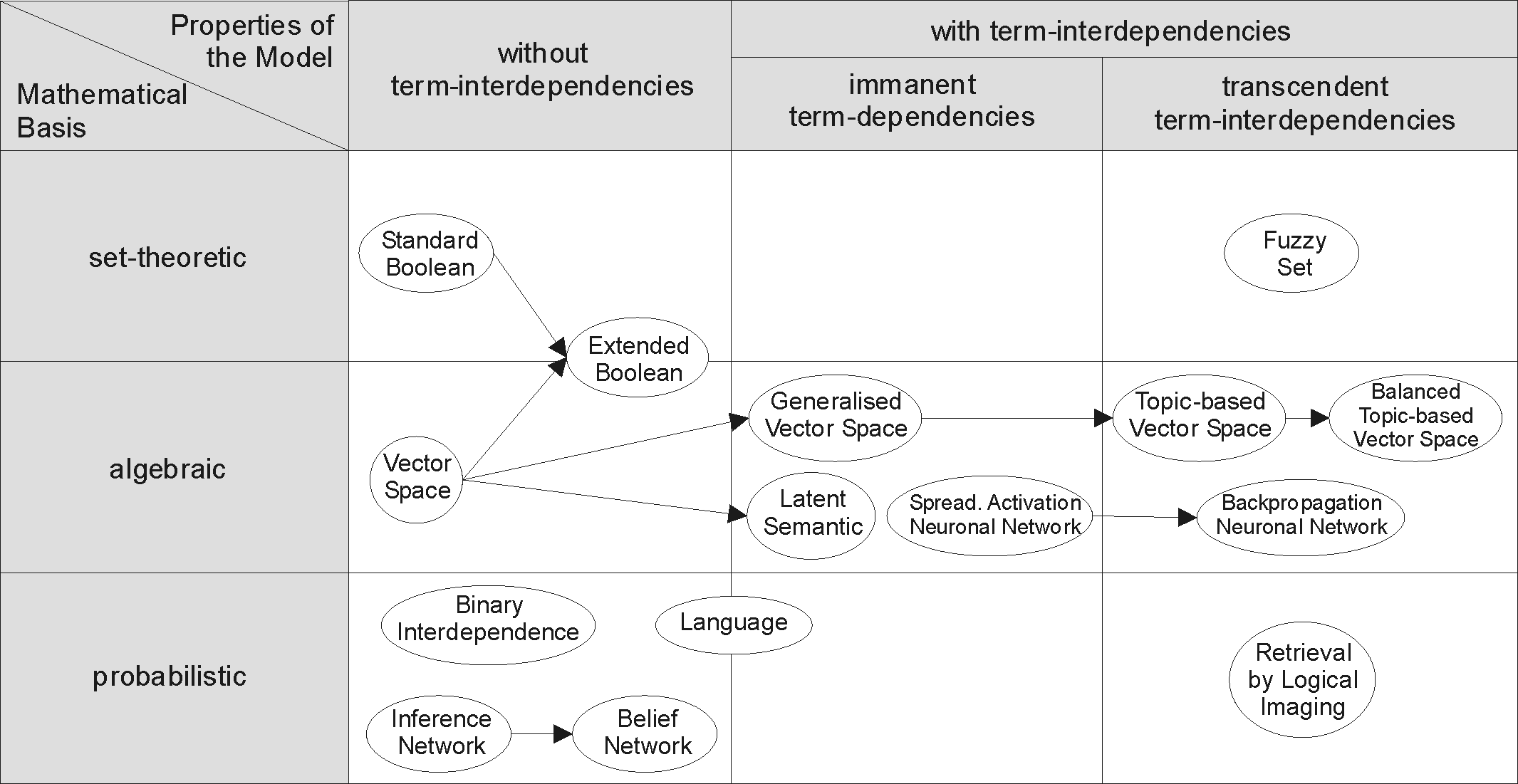

Information Retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

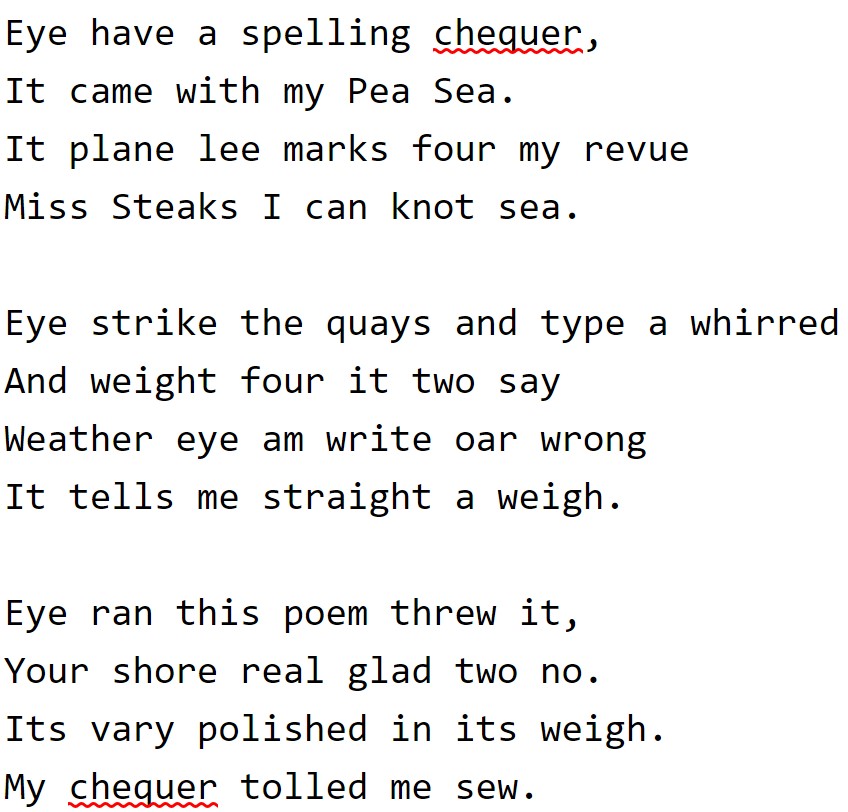

Spell Checker

In software, a spell checker (or spelling checker or spell check) is a software feature that checks for misspellings in a text. Spell-checking features are often embedded in software or services, such as a word processor, email client, electronic dictionary, or search engine. Design A basic spell checker carries out the following processes: * It scans the text and extracts the words contained in it. * It then compares each word with a known list of correctly spelled words (i.e. a dictionary). This might contain just a list of words, or it might also contain additional information, such as hyphenation points or lexical and grammatical attributes. * An additional step is a language-dependent algorithm for handling morphology. Even for a lightly inflected language like English, the spell checker will need to consider different forms of the same word, such as plurals, verbal forms, contractions, and possessives. For many other languages, such as those featuring agglutination and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation is use of computational techniques to translate text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages. Early approaches were mostly rule-based or statistical. These methods have since been superseded by neural machine translation and large language models. History Origins The origins of machine translation can be traced back to the work of Al-Kindi, a ninth-century Arabic cryptographer who developed techniques for systemic language translation, including cryptanalysis, frequency analysis, and probability and statistics, which are used in modern machine translation. The idea of machine translation later appeared in the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different tongues sharing one symbol. The idea of using digital computers for translation of natural languages was proposed as early as 1947 by England's A. D. Booth and Warr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bilingual

Multilingualism is the use of more than one language, either by an individual speaker or by a group of speakers. When the languages are just two, it is usually called bilingualism. It is believed that multilingual speakers outnumber monolingual speakers in the world's population. More than half of all Europeans claim to speak at least one language other than their mother tongue; but many read and write in one language. Being multilingual is advantageous for people wanting to participate in trade, globalization and cultural openness. Owing to the ease of access to information facilitated by the Internet, individuals' exposure to multiple languages has become increasingly possible. People who speak several languages are also called '' polyglots''. Multilingual speakers have acquired and maintained at least one language during childhood, the so-called first language (L1). The first language (sometimes also referred to as the mother tongue) is usually acquired without formal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monolingual

Monoglottism ( Greek μόνος ''monos'', "alone, solitary", + γλῶττα , "tongue, language") or, more commonly, monolingualism or unilingualism, is the condition of being able to speak only a single language, as opposed to multilingualism. In a different context, "unilingualism" may refer to a language policy which enforces an official or national language over others. Being monolingual or unilingual is also said of a text, dictionary, or conversation written or conducted in only one language, and of an entity in which a single language is either used or officially recognized (in particular when being compared with bilingual or multilingual entities or in the presence of individuals speaking different languages). Note that mono''glottism'' can only refer to lacking the ''ability'' to speak several languages. Multilingual speakers outnumber monolingual speakers in the world's population. Suzzane Romaine pointed out, in her 1995 book ''Bilingualism'', that it would be weir ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Stem

In linguistics, a word stem is a word part responsible for a word's lexical meaning. The term is used with slightly different meanings depending on the morphology of the language in question. For instance, in Athabaskan linguistics, a verb stem is a root that cannot appear on its own and that carries the tone of the word. Typically, a stem remains unmodified during inflection with few exceptions due to apophony (for example in Polish, ("city") and ("in the city"); in English, ''sing'', ''sang'', and ''sung'', where it can be modified according to morphological rules or peculiarities, such as sandhi). Word stem comparisons across languages have helped reveal cognates that have allowed comparative linguists to determine language families and their history. Root vs stem The word ''friendship'' is made by attaching the morpheme ''-ship'' to the root word ''friend'' (which some linguists also call a stem). While the inflectional plural morpheme ''-s'' can be attached to '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Morphemes

A morpheme is any of the smallest meaningful constituents within a linguistic expression and particularly within a word. Many words are themselves standalone morphemes, while other words contain multiple morphemes; in linguistic terminology, this is the distinction, respectively, between free and bound morphemes. The field of linguistic study dedicated to morphemes is called morphology. In English, inside a word with multiple morphemes, the main morpheme that gives the word its basic meaning is called a root (such as ''cat'' inside the word ''cats''), which can be bound or free. Meanwhile, additional bound morphemes, called affixes, may be added before or after the root, like the ''-s'' in ''cats'', which indicates plurality but is always bound to a root noun and is not regarded as a word on its own. However, in some languages, including English and Latin, even many roots cannot stand alone; i.e., they are bound morphemes. For instance, the Latin root ''reg-'' ('king') must alwa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orthography

An orthography is a set of convention (norm), conventions for writing a language, including norms of spelling, punctuation, Word#Word boundaries, word boundaries, capitalization, hyphenation, and Emphasis (typography), emphasis. Most national and international languages have an established writing system that has undergone substantial standardization, thus exhibiting less dialect variation than the spoken language. These processes can fossilize pronunciation patterns that are no longer routinely observed in speech (e.g. ''would'' and ''should''); they can also reflect deliberate efforts to introduce variability for the sake of national identity, as seen in Noah Webster's efforts to introduce easily noticeable differences between American and British spelling (e.g. ''honor'' and ''honour''). Orthographic norms develop through social and political influence at various levels, such as encounters with print in education, the workplace, and the state. Some nations have established ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Models

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |