|

Minimal Recursion Semantics

Minimal recursion semantics (MRS) is a framework for computational semantics. It can be implemented in typed feature structure formalisms such as head-driven phrase structure grammar and lexical functional grammar. It is suitable for computational language parsing and natural language generation.Copestake, A., Flickinger, D. P., Sag, I. A., & Pollard, C. (2005)Minimal Recursion Semantics. An introduction In Research on Language and Computation. 3:281–332 MRS enables a simple formulation of the grammatical constraints on lexical and phrasal semantics, including the principles of semantic composition. This technique is used in machine translation. Early pioneers of MRS include Ann Copestake, Dan Flickinger, Carl Pollard, and Ivan Sag Ivan Andrew Sag (November 9, 1949 – September 10, 2013) was an American linguist and cognitive scientist. He did research in areas of syntax and semantics as well as work in computational linguistics. Personal life Born in Alliance, Ohio on N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Semantics

Computational semantics is the study of how to automate the process of constructing and reasoning with meaning representations of natural language expressions. It consequently plays an important role in natural-language processing and computational linguistics. Some traditional topics of interest are: construction of meaning representations, semantic underspecification, anaphora resolution,Basile, Valerio, et al.Developing a large semantically annotated corpus" LREC 2012, Eighth International Conference on Language Resources and Evaluation. 2012. presupposition projection, and quantifier scope resolution. Methods employed usually draw from formal semantics or statistical semantics. Computational semantics has points of contact with the areas of lexical semantics (word-sense disambiguation and semantic role labeling), discourse semantics, knowledge representation and automated reasoning (in particular, automated theorem proving). Since 1999 there has been an ACL special i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dan Flickinger

Dan or DAN may refer to: People * Dan (name), including a list of people with the name ** Dan (king), several kings of Denmark * Dan people, an ethnic group located in West Africa **Dan language, a Mande language spoken primarily in Côte d'Ivoire and Liberia * Dan (son of Jacob), one of the 12 sons of Jacob/Israel in the Bible **Tribe of Dan, one of the 12 tribes of Israel descended from Dan * Crown Prince Dan, prince of Yan in ancient China Places * Dan (ancient city), the biblical location also called Dan, and identified with Tel Dan * Dan, Israel, a kibbutz * Dan, subdistrict of Kap Choeng District, Thailand * Dan, West Virginia, an unincorporated community in the United States * Dan River (other) * Danzhou, formerly Dan County, China * Gush Dan, the metropolitan area of Tel Aviv in Israel Organizations *Dan-Air, a defunct airline in the United Kingdom *Dan Bus Company, a public transport company in Israel *Dan Hotels, a hotel chain in Israel *Dan the Tire Man, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Linguistics

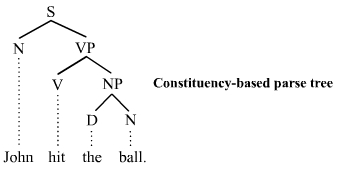

Generative grammar, or generativism , is a linguistic theory that regards linguistics as the study of a hypothesised innate grammatical structure. It is a biological or biologistic modification of earlier structuralist theories of linguistics, deriving ultimately from glossematics. Generative grammar considers grammar as a system of rules that generates exactly those combinations of words that form grammatical sentences in a given language. It is a system of explicit rules that may apply repeatedly to generate an indefinite number of sentences which can be as long as one wants them to be. The difference from structural and functional models is that the object is base-generated within the verb phrase in generative grammar. This purportedly cognitive structure is thought of as being a part of a universal grammar, a syntactic structure which is caused by a genetic mutation in humans. Generativists have created numerous theories to make the NP VP (NP) analysis work in natural l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Linguistics

Computational linguistics is an Interdisciplinarity, interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others. Sub-fields and related areas Traditionally, computational linguistics emerged as an area of artificial intelligence performed by computer scientists who had specialized in the application of computers to the processing of a natural language. With the formation of the Association for Computational Linguistics (ACL) and the establishment of independent conference series, the field consolidated during the 1970s and 1980s. The Association for Computational Linguistics defines computational linguistics as: The term "comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DELPH-IN

Deep Linguistic Processing with HPSG - INitiative (DELPH-IN) is a collaboration where computational linguists worldwide develop natural language processing tools for deep linguistic processing of human language. The goal of DELPH-IN is to combine linguistic and statistical processing methods in order to computationally understand the meaning of texts and utterances. The tools developed by DELPH-IN adopt two linguistic formalisms for deep linguistic analysis, viz. head-driven phrase structure grammar (HPSG) and minimal recursion semantics (MRS). All tools under the DELPH-IN collaboration are developed for general use of open-source licensing. Since 2005, DELPH-IN has held an annual summit. This is a loosely structured unconference where people update each other about the work they are doing, seek feedback on current work, and occasionally hammer out agreement on standards and best practice. DELPH-IN technologies and resources The DELPH-IN collaboration has been progressively bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ivan Sag

Ivan Andrew Sag (November 9, 1949 – September 10, 2013) was an American linguist and cognitive scientist. He did research in areas of syntax and semantics as well as work in computational linguistics. Personal life Born in Alliance, Ohio on November 9, 1949, Sag attended the Mercersburg Academy but was expelled shortly before graduation. He received a BA from the University of Rochester, an MA from the University of Pennsylvania—where he studied comparative Indo-European languages, Sanskrit, and sociolinguistics—and a PhD from MIT in 1976, writing his dissertation (advised by Noam Chomsky) on ellipsis. Sag received a Mellon Fellowship at Stanford University in 1978-79, and remained in California from that point on. He was appointed a position in Linguistics at Stanford, and earned tenure there. He was married to sociolinguist Penelope Eckert. Academic work Sag made notable contributions to the fields of syntax, semantics, pragmatics, and language processing. His early ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carl Pollard

Carl Jesse Pollard (born June 28, 1947) is a Professor of Linguistics at the Ohio State University. He is the inventor of head grammar and higher-order grammar, as well as co-inventor of head-driven phrase structure grammar (HPSG). He is currently also working on convergent grammar (CVG). He has written numerous books and articles on formal syntax and semantics. He received his Ph.D. from Stanford Stanford University, officially Leland Stanford Junior University, is a private research university in Stanford, California. The campus occupies , among the largest in the United States, and enrolls over 17,000 students. Stanford is consider .... External linksCarl Pollard's website 1947 births Living people Linguists from the United States Syntacticians Ohio State University faculty {{US-linguist-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ann Copestake

Ann Alicia Copestake is professor of computational linguistics and head of the Department of Computer Science and Technology at the University of Cambridge and a fellow of Wolfson College, Cambridge. Education Copestake was educated at the University of Cambridge where she was awarded a Bachelor of Arts degree in Natural Sciences. After two years working for Unilever Research she completed the Cambridge Diploma in Computer Science. She went on to study at the University of Sussex where she was awarded a DPhil in 1992 for research on lexical semantics supervised by Gerald Gazdar. Career and research Copestake started doing research in Natural language processing and Computational Linguistics at the University of Cambridge in 1985. Since then she has been a visiting researcher at Xerox PARC (1993/4) and the University of Stuttgart (1994/5). From July 1994 to October 2000 she worked at the Center for the Study of Language and Information (CSLI) at Stanford University, as a Senior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Structure

In phrase structure grammars, such as generalised phrase structure grammar, head-driven phrase structure grammar and lexical functional grammar, a feature structure is essentially a set of attribute–value pairs. For example, the attribute named ''number'' might have the value ''singular''. The value of an attribute may be either atomic, e.g. the symbol ''singular'', or complex (most commonly a feature structure, but also a list or a set). A feature structure can be represented as a directed acyclic graph (DAG), with the nodes corresponding to the variable values and the paths to the variable names. Operations defined on feature structures, e.g. unification, are used extensively in phrase structure grammars. In most theories (e.g. HPSG), operations are strictly speaking defined over equations describing feature structures and not over feature structures themselves, though feature structures are usually used in informal exposition. Often, feature structures are written like thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation or interactive translation), is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another. On a basic level, MT performs mechanical substitution of words in one language for words in another, but that alone rarely produces a good translation because recognition of whole phrases and their closest counterparts in the target language is needed. Not all words in one language have equivalent words in another language, and many words have more than one meaning. Solving this problem with corpus statistical and neural techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies. Current machine translation software often allows for customizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principle Of Compositionality

In semantics, mathematical logic and related disciplines, the principle of compositionality is the principle that the meaning of a complex expression is determined by the meanings of its constituent expressions and the rules used to combine them. This principle is also called Frege's principle, because Gottlob Frege is widely credited for the first modern formulation of it. The principle was never explicitly stated by Frege, and it was arguably already assumed by George Boole decades before Frege's work. The principle of compositionality is highly debated in linguistics, and among its most challenging problems there are the issues of contextuality, the non-compositionality of idiomatic expressions, and the non-compositionality of quotations. History Discussion of compositionality started to appear at the beginning of the 19th century, during which it was debated whether what was most fundamental in language was compositionality or contextuality, and compositionality was usuall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |