|

MinHash

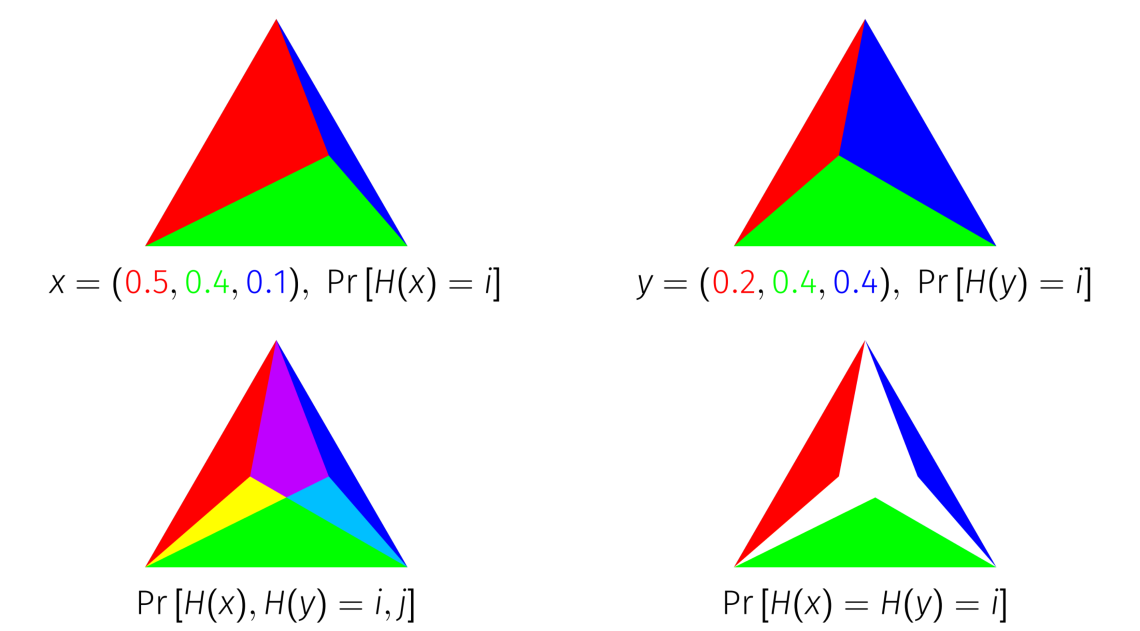

In computer science and data mining, MinHash (or the min-wise independent permutations locality sensitive hashing scheme) is a technique for quickly estimating how Similarity measure, similar two sets are. The scheme was invented by , and initially used in the AltaVista search engine to detect duplicate web pages and eliminate them from search results.. It has also been applied in large-scale Cluster analysis, clustering problems, such as document clustering, clustering documents by the similarity of their sets of words.. Jaccard similarity and minimum hash values The Jaccard index, Jaccard similarity coefficient is a commonly used indicator of the similarity between two sets. Let be a set and and be subsets of , then the Jaccard index is defined to be the ratio of the number of elements of their intersection (set theory), intersection and the number of elements of their union (set theory), union: : J(A,B) = . This value is 0 when the two sets are Disjoint sets, disjoint, 1 when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jaccard Index

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the similarity and diversity of sample sets. It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is frequently referred to as the Critical Success Index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name ''coefficient de communauté'', and independently formulated again by T. Tanimoto. Thus, the Tanimoto index or Tanimoto coefficient are also used in some fields. However, they are identical in generally taking the ratio of Intersection over Union. The Jaccard coefficient measures similarity between finite sample sets, and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A,B) = = . Note that by design, 0\le J(A,B)\le 1. If ''A'' intersection ''B'' is empty, then ''J''(''A'',''B'') = 0. The Jaccard coefficient is widely used in co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intersection (set Theory)

In set theory, the intersection of two sets A and B, denoted by A \cap B, is the set containing all elements of A that also belong to B or equivalently, all elements of B that also belong to A. Notation and terminology Intersection is written using the symbol "\cap" between the terms; that is, in infix notation. For example: \\cap\=\ \\cap\=\varnothing \Z\cap\N=\N \\cap\N=\ The intersection of more than two sets (generalized intersection) can be written as: \bigcap_^n A_i which is similar to capital-sigma notation. For an explanation of the symbols used in this article, refer to the table of mathematical symbols. Definition The intersection of two sets A and B, denoted by A \cap B, is the set of all objects that are members of both the sets A and B. In symbols: A \cap B = \. That is, x is an element of the intersection A \cap B if and only if x is both an element of A and an element of B. For example: * The intersection of the sets and is . * The number 9 is in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality Sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is an algorithmic technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Definitions An ''LSH family' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tabulation Hashing

In computer science, tabulation hashing is a method for constructing universal families of hash functions by combining table lookup with exclusive or operations. It was first studied in the form of Zobrist hashing for computer games; later work by Carter and Wegman extended this method to arbitrary fixed-length keys. Generalizations of tabulation hashing have also been developed that can handle variable-length keys such as text strings. Despite its simplicity, tabulation hashing has strong theoretical properties that distinguish it from some other hash functions. In particular, it is 3-independent: every 3-tuple of keys is equally likely to be mapped to any 3-tuple of hash values. However, it is not 4-independent. More sophisticated but slower variants of tabulation hashing extend the method to higher degrees of independence. Because of its high degree of independence, tabulation hashing is usable with hashing methods that require a high-quality hash function, including hopsco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-independent Hashing

In computer science, a family of hash functions is said to be ''k''-independent, ''k''-wise independent or ''k''-universal if selecting a function at random from the family guarantees that the hash codes of any designated ''k'' keys are independent random variables (see precise mathematical definitions below). Such families allow good average case performance in randomized algorithms or data structures, even if the input data is chosen by an adversary. The trade-offs between the degree of independence and the efficiency of evaluating the hash function are well studied, and many ''k''-independent families have been proposed. Background The goal of hashing is usually to map keys from some large domain (universe) U into a smaller range, such as m bins (labelled = \). In the analysis of randomized algorithms and data structures, it is often desirable for the hash codes of various keys to "behave randomly". For instance, if the hash code of each key were an independent random choi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chernoff Bound

In probability theory, the Chernoff bound gives exponentially decreasing bounds on tail distributions of sums of independent random variables. Despite being named after Herman Chernoff, the author of the paper it first appeared in, the result is due to Herman Rubin. It is a sharper bound than the first- or second-moment-based tail bounds such as Markov's inequality or Chebyshev's inequality, which only yield power-law bounds on tail decay. However, the Chernoff bound requires the variates to be independent, a condition that is not required by either Markov's inequality or Chebyshev's inequality (although Chebyshev's inequality does require the variates to be pairwise independent). The Chernoff bound is related to the Bernstein inequalities, which were developed earlier, and to Hoeffding's inequality. The generic bound The generic Chernoff bound for a random variable is attained by applying Markov's inequality to . This gives a bound in terms of the moment-generating funct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally express ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inverse Transform Sampling

Inverse transform sampling (also known as inversion sampling, the inverse probability integral transform, the inverse transformation method, Smirnov transform, or the golden ruleAalto University, N. Hyvönen, Computational methods in inverse problems. Twelfth lecture https://noppa.tkk.fi/noppa/kurssi/mat-1.3626/luennot/Mat-1_3626_lecture12.pdf) is a basic method for pseudo-random number sampling, i.e., for generating sample numbers at random from any probability distribution given its cumulative distribution function. Inverse transformation sampling takes uniform samples of a number u between 0 and 1, interpreted as a probability, and then returns the largest number x from the domain of the distribution P(X) such that P(-\infty , e.g. from U \sim \mathrm ,1 #Find the inverse of the desired CDF, e.g. F_X^(x). # Compute X=F_X^(u). The computed random variable X has distribution F_X(x). Expressed differently, given a continuous uniform variable U in ,1/math> and an invertible cumu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Distribution

In probability theory and statistics, the exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts. The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions. Definitions Probability density function The probability density function (pdf) of an exponential distribution is : f(x;\lambda) = \begin \lam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Hashing

In mathematics and computing, universal hashing (in a randomized algorithm or data structure) refers to selecting a hash function at random from a family of hash functions with a certain mathematical property (see definition below). This guarantees a low number of collisions in expectation, even if the data is chosen by an adversary. Many universal families are known (for hashing integers, vectors, strings), and their evaluation is often very efficient. Universal hashing has numerous uses in computer science, for example in implementations of hash tables, randomized algorithms, and cryptography. Introduction Assume we want to map keys from some universe U into m bins (labelled = \). The algorithm will have to handle some data set S \subseteq U of , S, =n keys, which is not known in advance. Usually, the goal of hashing is to obtain a low number of collisions (keys from S that land in the same bin). A deterministic hash function cannot offer any guarantee in an adversarial sett ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Permutation

A random permutation is a random ordering of a set of objects, that is, a permutation-valued random variable. The use of random permutations is often fundamental to fields that use randomized algorithms such as coding theory, cryptography, and simulation. A good example of a random permutation is the shuffling of a deck of cards: this is ideally a random permutation of the 52 cards. Generating random permutations Entry-by-entry brute force method One method of generating a random permutation of a set of length ''n'' uniformly at random (i.e., each of the ''n''! permutations is equally likely to appear) is to generate a sequence by taking a random number between 1 and ''n'' sequentially, ensuring that there is no repetition, and interpreting this sequence (''x''1, ..., ''x''''n'') as the permutation : \begin 1 & 2 & 3 & \cdots & n \\ x_1 & x_2 & x_3 & \cdots & x_n \\ \end, shown here in two-line notation. This brute-force method will require occasional retries whenever the ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |