|

Mean Percentage Error

In statistics, the mean percentage error (MPE) is the computed average of percentage errors by which forecasts of a model differ from actual values of the quantity being forecast. The formula for the mean percentage error is: : \text = \frac\sum_^n \frac where ''a''''t'' is the actual value of the quantity being forecast, ''f''''t'' is the forecast, and ''n'' is the number of different times for which the variable is forecast. Because actual rather than absolute values of the forecast errors are used in the formula, positive and negative forecast errors can offset each other; as a result the formula can be used as a measure of the bias in the forecasts. A disadvantage of this measure is that it is undefined whenever a single actual value is zero. See also *Percentage error * Mean absolute percentage error *Mean squared error *Mean squared prediction error *Minimum mean-square error *Squared deviations *Peak signal-to-noise ratio *Root mean square deviation *Errors and residu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias (statistics)

Statistical bias is a systematic tendency which causes differences between results and facts. The bias exists in numbers of the process of data analysis, including the source of the data, the estimator chosen, and the ways the data was analyzed. Bias may have a serious impact on results, for example, to investigate people's buying habits. If the sample size is not large enough, the results may not be representative of the buying habits of all the people. That is, there may be discrepancies between the survey results and the actual results. Therefore, understanding the source of statistical bias can help to assess whether the observed results are close to the real results. Bias can be differentiated from other mistakes such as accuracy (instrument failure/inadequacy), lack of data, or mistakes in transcription (typos). Bias implies that the data selection may have been skewed by the collection criteria. Bias does not preclude the existence of any other mistakes. One may have a poo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Percentage Error

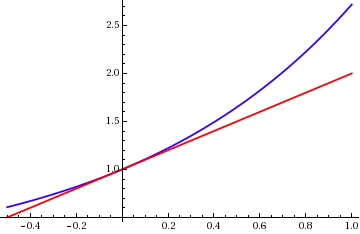

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Absolute Percentage Error

The mean absolute percentage error (MAPE), also known as mean absolute percentage deviation (MAPD), is a measure of prediction accuracy of a forecasting method in statistics. It usually expresses the accuracy as a ratio defined by the formula: : \mbox = \frac\sum_^n \left, \frac\ where is the actual value and is the forecast value. Their difference is divided by the actual value . The absolute value of this ratio is summed for every forecasted point in time and divided by the number of fitted points . MAPE in regression problems Mean absolute percentage error is commonly used as a loss function for regression problems and in model evaluation, because of its very intuitive interpretation in terms of relative error. Definition Consider a standard regression setting in which the data are fully described by a random pair Z=(X,Y) with values in \mathbb^d\times\mathbb, and i.i.d. copies (X_1, Y_1), ..., (X_n, Y_n) of (X,Y). Regression models aims at finding a good model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Squared Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the actual value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the error a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Squared Prediction Error

In statistics the mean squared prediction error or mean squared error of the predictions of a smoothing or curve fitting procedure is the expected value of the squared difference between the fitted values implied by the predictive function \widehat and the values of the (unobservable) function ''g''. It is an inverse measure of the explanatory power of \widehat, and can be used in the process of cross-validation of an estimated model. If the smoothing or fitting procedure has projection matrix (i.e., hat matrix) ''L'', which maps the observed values vector y to predicted values vector \hat via \hat=Ly, then :\operatorname(L)=\operatorname\left left( g(x_i)-\widehat(x_i)\right)^2\right The MSPE can be decomposed into two terms: the mean of squared biases of the fitted values and the mean of variances of the fitted values: :n\cdot\operatorname(L)=\sum_^n\left(\operatorname\left widehat(x_i)\rightg(x_i)\right)^2+\sum_^n\operatorname\left widehat(x_i)\right Knowledge of ''g'' is r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Mean-square Error

In statistics and signal processing, a minimum mean square error (MMSE) estimator is an estimation method which minimizes the mean square error (MSE), which is a common measure of estimator quality, of the fitted values of a dependent variable. In the Bayesian setting, the term MMSE more specifically refers to estimation with quadratic loss function. In such case, the MMSE estimator is given by the posterior mean of the parameter to be estimated. Since the posterior mean is cumbersome to calculate, the form of the MMSE estimator is usually constrained to be within a certain class of functions. Linear MMSE estimators are a popular choice since they are easy to use, easy to calculate, and very versatile. It has given rise to many popular estimators such as the Wiener–Kolmogorov filter and Kalman filter. Motivation The term MMSE more specifically refers to estimation in a Bayesian setting with quadratic cost function. The basic idea behind the Bayesian approach to estimation stems f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Squared Deviations

Squared deviations from the mean (SDM) result from squaring deviations. In probability theory and statistics, the definition of ''variance'' is either the expected value of the SDM (when considering a theoretical distribution) or its average value (for actual experimental data). Computations for ''analysis of variance'' involve the partitioning of a sum of SDM. Background An understanding of the computations involved is greatly enhanced by a study of the statistical value : \operatorname( X ^ 2 ), where \operatorname is the expected value operator. For a random variable X with mean \mu and variance \sigma^2, : \sigma^2 = \operatorname( X ^ 2 ) - \mu^2.Mood & Graybill: ''An introduction to the Theory of Statistics'' (McGraw Hill) Therefore, : \operatorname( X ^ 2 ) = \sigma^2 + \mu^2. From the above, the following can be derived: : \operatorname\left( \sum\left( X ^ 2\right) \right) = n\sigma^2 + n\mu^2, : \operatorname\left( \left(\sum X \right)^ 2 \right) = n\sigma^2 + ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peak Signal-to-noise Ratio

Peak signal-to-noise ratio (PSNR) is an engineering term for the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. Because many signals have a very wide dynamic range, PSNR is usually expressed as a logarithmic quantity using the decibel scale. PSNR is commonly used to quantify reconstruction quality for images and video subject to lossy compression. Definition PSNR is most easily defined via the mean squared error (''MSE''). Given a noise-free ''m''×''n'' monochrome image ''I'' and its noisy approximation ''K'', ''MSE'' is defined as : \mathit = \frac\sum_^\sum_^ (i,j) - K(i,j)2. The PSNR (in dB) is defined as : \begin \mathit &= 10 \cdot \log_ \left( \frac \right) \\ &= 20 \cdot \log_ \left( \frac \right) \\ &= 20 \cdot \log_(\mathit_I) - 10 \cdot \log_ (\mathit). \end Here, ''MAXI'' is the maximum possible pixel value of the image. When the pixels are represented using 8 bits per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Root Mean Square Deviation

The root-mean-square deviation (RMSD) or root-mean-square error (RMSE) is a frequently used measure of the differences between values (sample or population values) predicted by a model or an estimator and the values observed. The RMSD represents the square root of the second sample moment of the differences between predicted values and observed values or the quadratic mean of these differences. These deviations are called '' residuals'' when the calculations are performed over the data sample that was used for estimation and are called ''errors'' (or prediction errors) when computed out-of-sample. The RMSD serves to aggregate the magnitudes of the errors in predictions for various data points into a single measure of predictive power. RMSD is a measure of accuracy, to compare forecasting errors of different models for a particular dataset and not between datasets, as it is scale-dependent. RMSD is always non-negative, and a value of 0 (almost never achieved in practice) would ind ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Errors And Residuals In Statistics

In statistics and optimization, errors and residuals are two closely related and easily confused measures of the deviation of an observed value of an element of a statistical sample from its "true value" (not necessarily observable). The error of an observation is the deviation of the observed value from the true value of a quantity of interest (for example, a population mean). The residual is the difference between the observed value and the ''estimated'' value of the quantity of interest (for example, a sample mean). The distinction is most important in regression analysis, where the concepts are sometimes called the regression errors and regression residuals and where they lead to the concept of studentized residuals. In econometrics, "errors" are also called disturbances. Introduction Suppose there is a series of observations from a univariate distribution and we want to estimate the mean of that distribution (the so-called location model). In this case, the errors are th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |