|

Mark Carlson (engineer)

Mark A. Carlson (born 1955) is a software engineer known in the systems management industry for his work in management standards and technology. Mark was the first employee of a small startup in Boulder, Colorado called Redcape Policy Software. Sun Microsystems acquired the company and its technology in 1998 and subsequently promoted it as Jiro, a common management framework based on Java and Jini. Carlson is probably best known for his work on the development of a storage management standard called SMI-S for the SNIA, serving as the chair of the group overseeing the specification for several years. The specification is now an ANSI and ISO standard. In addition to SMI-S, Mark also has led the development of a reference implementation of the XAM standard, a next generation storage interface with support for metadata, query and compliance based data retention of fixed content. Based on this work, he authored the Storage Industry Resource Domain Model, a model for Computer data s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Engineering

Software engineering is a systematic engineering approach to software development. A software engineer is a person who applies the principles of software engineering to design, develop, maintain, test, and evaluate computer software. The term '' programmer'' is sometimes used as a synonym, but may also lack connotations of engineering education or skills. Engineering techniques are used to inform the software development process which involves the definition, implementation, assessment, measurement, management, change, and improvement of the software life cycle process itself. It heavily uses software configuration management which is about systematically controlling changes to the configuration, and maintaining the integrity and traceability of the configuration and code throughout the system life cycle. Modern processes use software versioning. History Beginning in the 1960s, software engineering was seen as its own type of engineering. Additionally, the development of soft ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

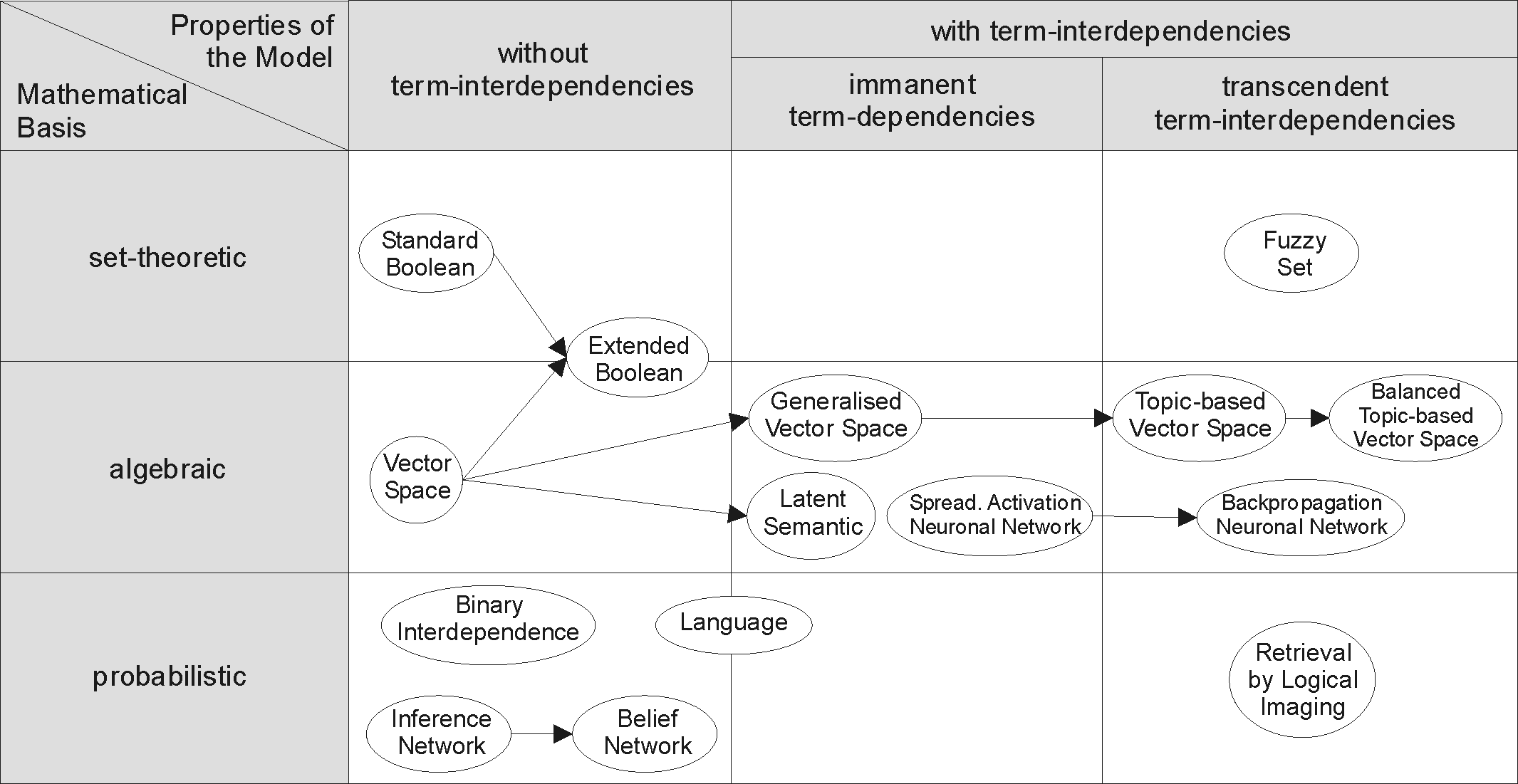

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Living People

Related categories * :Year of birth missing (living people) / :Year of birth unknown * :Date of birth missing (living people) / :Date of birth unknown * :Place of birth missing (living people) / :Place of birth unknown * :Year of death missing / :Year of death unknown * :Date of death missing / :Date of death unknown * :Place of death missing / :Place of death unknown * :Missing middle or first names See also * :Dead people * :Template:L, which generates this category or death years, and birth year and sort keys. : {{DEFAULTSORT:Living people 21st-century people People by status ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DMTF

Distributed Management Task Force (DMTF) is a 501(c)(6) nonprofit industry standards organization that creates open manageability standards spanning diverse emerging and traditional IT infrastructures including cloud, virtualization, network, servers and storage. Member companies and alliance partners collaborate on standards to improve interoperable management of information technologies. Based in Portland, Oregon, the DMTF is led by a board of directors representing technology companies including: Broadcom Inc., Cisco, Dell Technologies, Hewlett Packard Enterprise, Intel Corporation, Lenovo, NetApp, Positive Tecnologia S.A., and Verizon. History Founded in 1992 as the Desktop Management Task Force, the organization’s first standard was the now-legacy Desktop Management Interface (DMI). As the organization evolved to address distributed management through additional standards, such as the Common Information Model (CIM), it changed its name to the Distributed Management Task ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloud Storage

Cloud storage is a model of computer data storage in which the digital data is stored in logical pools, said to be on "the cloud". The physical storage spans multiple servers (sometimes in multiple locations), and the physical environment is typically owned and managed by a hosting company. These cloud storage providers are responsible for keeping the data available and accessible, and the physical environment secured, protected, and running. People and organizations buy or lease storage capacity from the providers to store user, organization, or application data. Cloud storage services may be accessed through a colocated cloud computing service, a web service application programming interface (API) or by applications that use the API, such as cloud desktop storage, a cloud storage gateway or Web-based content management systems. History Cloud computing is believed to have been invented by Joseph Carl Robnett Licklider in the 1960s with his work on ARPANET to connect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Management Task Force

Distributed Management Task Force (DMTF) is a 501(c)(6) nonprofit industry standards organization that creates open manageability standards spanning diverse emerging and traditional IT infrastructures including cloud, virtualization, network, servers and storage. Member companies and alliance partners collaborate on standards to improve interoperable management of information technologies. Based in Portland, Oregon, the DMTF is led by a board of directors representing technology companies including: Broadcom Inc., Cisco, Dell Technologies, Hewlett Packard Enterprise, Intel Corporation, Lenovo, NetApp, Positive Tecnologia S.A., and Verizon. History Founded in 1992 as the Desktop Management Task Force, the organization’s first standard was the now-legacy Desktop Management Interface (DMI). As the organization evolved to address distributed management through additional standards, such as the Common Information Model (CIM), it changed its name to the Distributed Management Task ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Services

In the pursuit of knowledge, data (; ) is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted. A datum is an individual value in a collection of data. Data is usually organized into structures such as tables that provide additional context and meaning, and which may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data is commonly used in scientific research, economics, and in virtually every other form of human organizational activity. Examples of data sets include price indices (such as consumer price index), unemployment rates, literacy rates, and census data. In this context, data represents the raw facts and figures which can be used in such a manner in order to capture the useful information out of it. Dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metadata

Metadata is "data that provides information about other data", but not the content of the data, such as the text of a message or the image itself. There are many distinct types of metadata, including: * Descriptive metadata – the descriptive information about a resource. It is used for discovery and identification. It includes elements such as title, abstract, author, and keywords. * Structural metadata – metadata about containers of data and indicates how compound objects are put together, for example, how pages are ordered to form chapters. It describes the types, versions, relationships, and other characteristics of digital materials. * Administrative metadata – the information to help manage a resource, like resource type, permissions, and when and how it was created. * Reference metadata – the information about the contents and quality of statistical data. * Statistical metadata – also called process data, may describe processes that collect, process, or produce st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Data Storage

Computer data storage is a technology consisting of computer components and Data storage, recording media that are used to retain digital data (computing), data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a memory hierarchy, storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast volatile technologies (which lose data when off power) are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). Thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Retention

Data retention defines the policies of persistent data and records management for meeting legal and business data archival requirements. Although sometimes interchangeable, it is not to be confused with the Data Protection Act 1998. The different data retention policies weigh legal and privacy concerns against economics and need-to-know concerns to determine the retention time, archival rules, data formats, and the permissible means of storage, access, and encryption. In the field of telecommunications, data retention generally refers to the storage of call detail records (CDRs) of telephony and internet traffic and transaction data ( IPDRs) by governments and commercial organisations. In the case of government data retention, the data that is stored is usually of telephone calls made and received, emails sent and received, and websites visited. Location data is also collected. The primary objective in government data retention is traffic analysis and mass surveillance. By a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metadata

Metadata is "data that provides information about other data", but not the content of the data, such as the text of a message or the image itself. There are many distinct types of metadata, including: * Descriptive metadata – the descriptive information about a resource. It is used for discovery and identification. It includes elements such as title, abstract, author, and keywords. * Structural metadata – metadata about containers of data and indicates how compound objects are put together, for example, how pages are ordered to form chapters. It describes the types, versions, relationships, and other characteristics of digital materials. * Administrative metadata – the information to help manage a resource, like resource type, permissions, and when and how it was created. * Reference metadata – the information about the contents and quality of statistical data. * Statistical metadata – also called process data, may describe processes that collect, process, or produce st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systems Management

Systems management refers to enterprise-wide administration of distributed systems including (and commonly in practice) computer systems. Systems management is strongly influenced by network management initiatives in telecommunications. The application performance management (APM) technologies are now a subset of Systems management. Maximum productivity can be achieved more efficiently through event correlation, system automation and predictive analysis which is now all part of APM. Centralized management has a time and effort trade-off that is related to the size of the company, the expertise of the IT staff, and the amount of technology being used: * For a small business startup with ten computers, automated centralized processes may take more time to learn how to use and implement than just doing the management work manually on each computer. * A very large business with thousands of similar employee computers may clearly be able to save time and money, by having IT staff ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |