|

List Of Runge–Kutta Methods

Runge–Kutta methods are methods for the numerical solution of the ordinary differential equation :\frac = f(t, y). Explicit and implicit methods, Explicit Runge–Kutta methods take the form :\begin y_ &= y_n + h \sum_^s b_i k_i \\ k_1 &= f(t_n, y_n), \\ k_2 &= f(t_n+c_2h, y_n+h(a_k_1)), \\ k_3 &= f(t_n+c_3h, y_n+h(a_k_1+a_k_2)), \\ &\;\;\vdots \\ k_i &= f\left(t_n + c_i h, y_n + h \sum_^ a_ k_j\right). \end Stages for Explicit and implicit methods, implicit methods of s stages take the more general form, with the Explicit and implicit methods#Computation, solution to be found over all s :k_i = f\left(t_n + c_i h, y_n + h \sum_^ a_ k_j\right). Each method listed on this page is defined by its Butcher tableau, which puts the coefficients of the method in a table as follows: : \begin c_1 & a_ & a_& \dots & a_\\ c_2 & a_ & a_& \dots & a_\\ \vdots & \vdots & \vdots& \ddots& \vdots\\ c_s & a_ & a_& \dots & a_ \\ \hline & b_1 & b_2 & \dots & b_s\\ \end For ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rehuel Lobatto

Rehuel Lobatto (6 June 1797 – 9 February 1866 ) was a Dutch mathematician. The Gauss-Lobatto quadrature method is named after him, as are his variants on the Runge–Kutta methods for solving ODEs, and the Lobatto polynomials. He was the author of a great number of articles in scientific periodicals, as well as various schoolbooks. Lobatto was born in Amsterdam to a Portuguese Jewish family. As a schoolboy Lobatto already displayed remarkable talent for mathematics. Gotthard Deutsch, E. Slijper (1906)"LOBATTO, REHUEL" ''The Jewish Encyclopedia''. He studied mathematics under Jean Henri van Swinden at the Athenaeum Illustre of Amsterdam, earning his BA in 1812; and then with Adolphe Quetelet (coediting a volume o"Correspondance Mathématique et Physique". Working for the Dutch government - initially for the Ministry of the Interior - he became secretary of a statistical commission in 1831. From 1826 till 1849 he was editor of the '' "Jaarboekje van Lobatto"'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Runge–Kutta Methods

In numerical analysis, the Runge–Kutta methods ( ) are a family of Explicit and implicit methods, implicit and explicit iterative methods, List of Runge–Kutta methods, which include the Euler method, used in temporal discretization for the approximate solutions of simultaneous nonlinear equations. These methods were developed around 1900 by the German mathematicians Carl Runge and Wilhelm Kutta. The Runge–Kutta method The most widely known member of the Runge–Kutta family is generally referred to as "RK4", the "classic Runge–Kutta method" or simply as "the Runge–Kutta method". Let an initial value problem be specified as follows: : \frac = f(t, y), \quad y(t_0) = y_0. Here y is an unknown function (scalar or vector) of time t, which we would like to approximate; we are told that \frac, the rate at which y changes, is a function of t and of y itself. At the initial time t_0 the corresponding y value is y_0. The function f and the initial conditions t_0, y_0 are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gauss–Legendre Method

In numerical analysis and scientific computing, the Gauss–Legendre methods are a family of numerical methods for ordinary differential equations. Gauss–Legendre methods are implicit Runge–Kutta methods. More specifically, they are collocation methods based on the points of Gauss–Legendre quadrature. The Gauss–Legendre method based on ''s'' points has order 2''s''. All Gauss–Legendre methods are A-stable. The Gauss–Legendre method of order two is the implicit midpoint rule. Its Butcher tableau is: : The Gauss–Legendre method of order four has Butcher tableau: : The Gauss–Legendre method of order six has Butcher tableau: : The computational cost of higher-order Gauss–Legendre methods is usually excessive, and thus, they are rarely used. Intuition Gauss-Legendre Runge-Kutta (GLRK) methods solve an ordinary differential equation \dot = f(x) with x(0) = x_0. The distinguishing feature of GLRK is the estimation of x(h) - x_0 = \int_0^h f( x(t) ) \, dt wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Numerical Differential Equations

{{disambig ...

Numerical may refer to: * Number * Numerical digit * Numerical analysis Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic computation, symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Springer-Verlag

Springer Science+Business Media, commonly known as Springer, is a German multinational publishing company of books, e-books and peer-reviewed journals in science, humanities, technical and medical (STM) publishing. Originally founded in 1842 in Berlin, it expanded internationally in the 1960s, and through mergers in the 1990s and a sale to venture capitalists it fused with Wolters Kluwer and eventually became part of Springer Nature in 2015. Springer has major offices in Berlin, Heidelberg, Dordrecht, and New York City. History Julius Springer founded Springer-Verlag in Berlin in 1842 and his son Ferdinand Springer grew it from a small firm of 4 employees into Germany's then second-largest academic publisher with 65 staff in 1872.Chronology ". Springer Science+Business Media. In 1964, Springer expanded its business internationally, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

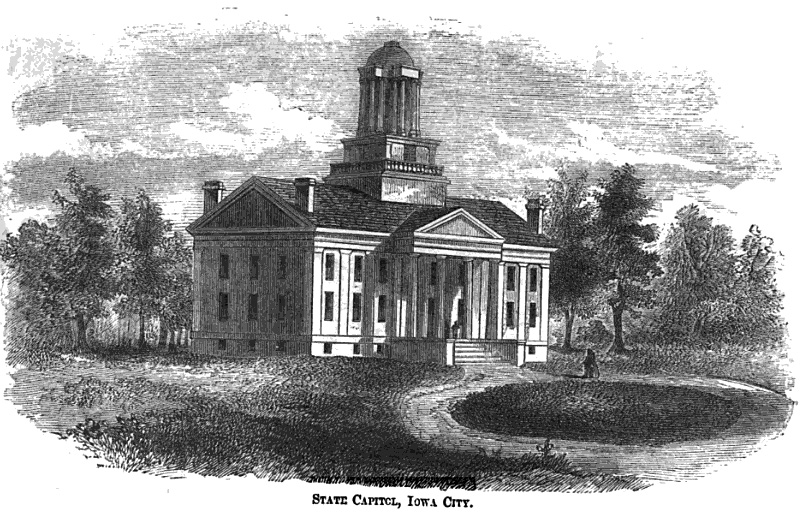

University Of Iowa

The University of Iowa (U of I, UIowa, or Iowa) is a public university, public research university in Iowa City, Iowa, United States. Founded in 1847, it is the oldest and largest university in the state. The University of Iowa is organized into 12 colleges offering more than 200 areas of study and 7 professional degrees. On an urban 1,880-acre campus on the banks of the Iowa River, the University of Iowa is Carnegie Classification of Institutions of Higher Education, classified among "R1: Doctoral Universities – Very high research activity". In fiscal year 2021, research expenditures at Iowa totaled $818 million. The university was the original developer of the Master of Fine Arts degree, and it operates the Iowa Writers' Workshop, whose alumni include 17 of the university's 46 Pulitzer Prize winners. Iowa is a member of the Association of American Universities and the Universities Research Association. Among public universities in the United States, UI was the first to beco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Discontinuous Collocation Method

Continuous functions are of utmost importance in mathematics, functions and applications. However, not all Function (mathematics), functions are continuous. If a function is not continuous at a limit point (also called "accumulation point" or "cluster point") of its Domain of a function, domain, one says that it has a discontinuity there. The Set theory, set of all points of discontinuity of a function may be a discrete set, a dense set, or even the entire domain of the function. The Oscillation (mathematics), oscillation of a function at a point quantifies these discontinuities as follows: * in a removable discontinuity, the distance that the value of the function is off by is the oscillation; * in a jump discontinuity, the size of the jump is the oscillation (assuming that the value ''at'' the point lies between these limits of the two sides); * in an essential discontinuity (a.k.a. infinite discontinuity), oscillation measures the failure of a Limit of a function, limit to exist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Trapezoidal Rule (differential Equations)

In numerical analysis and scientific computing, the trapezoidal rule is a numerical method to solve ordinary differential equations derived from the trapezoidal rule for computing integrals. The trapezoidal rule is an implicit second-order method, which can be considered as both a Runge–Kutta method and a linear multistep method. Method Suppose that we want to solve the differential equation y' = f(t,y). The trapezoidal rule is given by the formula y_ = y_n + \tfrac 1 2 h \Big( f(t_n,y_n) + f(t_,y_) \Big), where h = t_ - t_n is the step size. This is an implicit method: the value y_ appears on both sides of the equation, and to actually calculate it, we have to solve an equation which will usually be nonlinear. One possible method for solving this equation is Newton's method. We can use the Euler method to get a fairly good estimate for the solution, which can be used as the initial guess of Newton's method. Cutting short, using only the guess from Eulers method is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Collocation Method

In mathematics, a collocation method is a method for the numerical solution of ordinary differential equations, partial differential equations and integral equations. The idea is to choose a finite-dimensional space of candidate solutions (usually polynomials up to a certain degree) and a number of points in the domain (called ''collocation points''), and to select that solution which satisfies the given equation at the collocation points. Ordinary differential equations Suppose that the ordinary differential equation : y'(t) = f(t,y(t)), \quad y(t_0)=y_0, is to be solved over the interval _0,t_0+h/math>. Choose c_k from 0 ≤ ''c''1< ''c''2< ... < ''c''''n'' ≤ 1. The corresponding (polynomial) collocation method approximates the solution ''y'' by the polynomial ''p'' of degree ''n'' which satisfies the initial condition , and the differential equation at all ''collocation points'' |

Gaussian Quadrature

In numerical analysis, an -point Gaussian quadrature rule, named after Carl Friedrich Gauss, is a quadrature rule constructed to yield an exact result for polynomials of degree or less by a suitable choice of the nodes and weights for . The modern formulation using orthogonal polynomials was developed by Carl Gustav Jacobi in 1826. The most common domain of integration for such a rule is taken as , so the rule is stated as \int_^1 f(x)\,dx \approx \sum_^n w_i f(x_i), which is exact for polynomials of degree or less. This exact rule is known as the Gauss–Legendre quadrature rule. The quadrature rule will only be an accurate approximation to the integral above if is well-approximated by a polynomial of degree or less on . The Gauss–Adrien-Marie Legendre, Legendre quadrature rule is not typically used for integrable functions with endpoint singularity (math), singularities. Instead, if the integrand can be written as f(x) = \left(1 - x\right)^\alpha \left(1 + x\right) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

L-stability

Within mathematics regarding differential equations, L-stability is a special case of A-stability, a property of Runge–Kutta methods for solving ordinary differential equations. A method is L-stable if it is A-stable and \phi(z) \to 0 as z \to \infty , where \phi is the stability function of the method (the stability function of a Runge–Kutta method is a rational function and thus the limit as z \to +\infty is the same as the limit as z \to -\infty). L-stable methods are in general very good at integrating stiff equation In mathematics, a stiff equation is a differential equation for which certain numerical methods for solving the equation are numerically unstable, unless the step size is taken to be extremely small. It has proven difficult to formulate a precise ...s. References * . Numerical differential equations {{mathapplied-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gauss–Legendre Quadrature

In numerical analysis, Gauss–Legendre quadrature is a form of Gaussian quadrature for approximating the definite integral of a function. For integrating over the interval , the rule takes the form: :\int_^1 f(x)\,dx \approx \sum_^n w_i f(x_i) : where * ''n'' is the number of sample points used, * ''w''''i'' are quadrature weights, and * ''x''''i'' are the roots of the ''n''th Legendre polynomial. This choice of quadrature weights ''w''''i'' and quadrature nodes ''x''''i'' is the unique choice that allows the quadrature rule to integrate degree polynomials exactly. Many algorithms have been developed for computing Gauss–Legendre quadrature rules. The Golub–Welsch algorithm presented in 1969 reduces the computation of the nodes and weights to an eigenvalue problem which is solved by the QR algorithm. This algorithm was popular, but significantly more efficient algorithms exist. Algorithms based on the Newton–Raphson method are able to compute quadrature rules for signific ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |