|

Li Cai (psychometrician)

Li Cai (; born c. 1980) is a statistician and quantitative psychologist. He is a professor of Advanced Quantitative Methodology at the UCLA Graduate School of Education and Information Studies with a joint appointment in the quantitative area of the UCLA Department of Psychology. He is also Director of the National Center for Research on Evaluation, Standards, and Student Testing, Managing Partner aVector Psychometric Group He invented the Metropolis–Hastings Robbins–Monro algorithm for inference in high-dimensional latent variable models that had been intractable with existing solutions. The algorithm was recognized as a mathematically rigorous breakthrough in the "curse of dimensionality" and garnered numerous top-tier publications and national awards. Career A native of Nanjing, China and alumnus of the Nanjing Foreign Language School, Cai earned a bachelor's degree with distinction from Nanjing University in 2001. He completed his undergraduate studies in three year ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nanjing

Nanjing (; , Mandarin pronunciation: ), alternately romanized as Nanking, is the capital of Jiangsu province of the People's Republic of China. It is a sub-provincial city, a megacity, and the second largest city in the East China region. The city has 11 districts, an administrative area of , and a total recorded population of 9,314,685 . Situated in the Yangtze River Delta region, Nanjing has a prominent place in Chinese history and culture, having served as the capital of various Chinese dynasties, kingdoms and republican governments dating from the 3rd century to 1949, and has thus long been a major center of culture, education, research, politics, economy, transport networks and tourism, being the home to one of the world's largest inland ports. The city is also one of the fifteen sub-provincial cities in the People's Republic of China's administrative structure, enjoying jurisdictional and economic autonomy only slightly less than that of a province. Nanjing has be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Science Foundation

The National Science Foundation (NSF) is an independent agency of the United States government that supports fundamental research and education in all the non-medical fields of science and engineering. Its medical counterpart is the National Institutes of Health. With an annual budget of about $8.3 billion (fiscal year 2020), the NSF funds approximately 25% of all federally supported basic research conducted by the United States' colleges and universities. In some fields, such as mathematics, computer science, economics, and the social sciences, the NSF is the major source of federal backing. The NSF's director and deputy director are appointed by the President of the United States and confirmed by the United States Senate, whereas the 24 president-appointed members of the National Science Board (NSB) do not require Senate confirmation. The director and deputy director are responsible for administration, planning, budgeting and day-to-day operations of the foundation, while t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

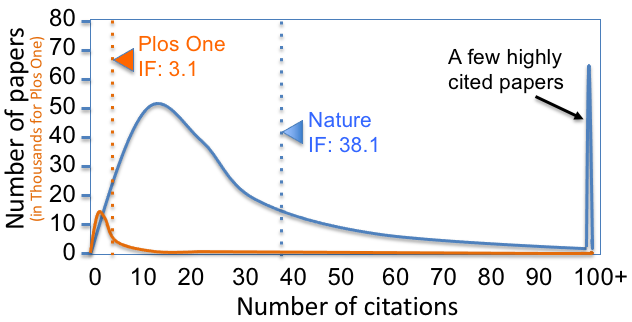

Impact Factor

The impact factor (IF) or journal impact factor (JIF) of an academic journal is a scientometric index calculated by Clarivate that reflects the yearly mean number of citations of articles published in the last two years in a given journal, as indexed by Clarivate's Web of Science. As a journal-level metric, it is frequently used as a proxy for the relative importance of a journal within its field; journals with higher impact factor values are given the status of being more important, or carry more prestige in their respective fields, than those with lower values. While frequently used by universities and funding bodies to decide on promotion and research proposals, it has come under attack for distorting good scientific practices. History The impact factor was devised by Eugene Garfield, the founder of the Institute for Scientific Information (ISI) in Philadelphia. Impact factors began to be calculated yearly starting from 1975 for journals listed in the ''Journal Citation Rep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Curse Of Dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The expression was coined by Richard E. Bellman when considering problems in dynamic programming. Dimensionally cursed phenomena occur in domains such as numerical analysis, sampling, combinatorics, machine learning, data mining and databases. The common theme of these problems is that when the dimensionality increases, the volume of the space increases so fast that the available data become sparse. In order to obtain a reliable result, the amount of data needed often grows exponentially with the dimensionality. Also, organizing and searching data often relies on detecting areas where objects form groups with similar properties; in high dimensional data, however, all objects appear to be sparse and dissimilar in many ways, which prevents co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rigour

Rigour (British English) or rigor (American English; American and British English spelling differences#-our, -or, see spelling differences) describes a condition of stiffness or strictness. These constraints may be environmentally imposed, such as "the rigours of famine"; logically imposed, such as mathematical proofs which must maintain Consistency, consistent answers; or socially imposed, such as the process of defining ethics and law. Etymology "Rigour" comes to English language, English through old French (13th c., Modern French language, French ''Wiktionary:fr:rigueur, rigueur'') meaning "stiffness", which itself is based on the Latin ''rigorem'' (nominative ''rigor'') "numbness, stiffness, hardness, firmness; roughness, rudeness", from the verb ''rigere'' "to be stiff". The noun was frequently used to describe a condition of strictness or stiffness, which arises from a situation or constraint either chosen or experienced passively. For example, the title of the book '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation–maximization Algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the ''E'' step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step. History The EM algorithm was explained and given its name in a classic 1977 paper by Arthur Dempster, Nan Laird, and Donald Rubin. They pointed out that the method had been "proposed many times in special circumstances" by earlier authors. One of the earliest is the gene-counting method for estimating allele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity Theory

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm. A problem is regarded as inherently difficult if its solution requires significant resources, whatever the algorithm used. The theory formalizes this intuition, by introducing mathematical models of computation to study these problems and quantifying their computational complexity, i.e., the amount of resources needed to solve them, such as time and storage. Other measures of complexity are also used, such as the amount of communication (used in communication complexity), the number of gates in a circuit (used in circuit complexity) and the number of processors (used in parallel computing). One of the roles of computationa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High-dimensional Statistics

In statistical theory, the field of high-dimensional statistics studies data whose dimension is larger than typically considered in classical multivariate analysis. The area arose owing to the emergence of many modern data sets in which the dimension of the data vectors may be comparable to, or even larger than, the sample size, so that justification for the use of traditional techniques, often based on asymptotic arguments with the dimension held fixed as the sample size increased, was lacking. Examples Parameter estimation in linear models The most basic statistical model for the relationship between a covariate vector x \in \mathbb^p and a response variable y \in \mathbb is the linear model : y = x^\top \beta + \epsilon, where \beta \in \mathbb^p is an unknown parameter vector, and \epsilon is random noise with mean zero and variance \sigma^2. Given independent responses Y_1,\ldots,Y_n, with corresponding covariates x_1,\ldots,x_n, from this model, we can form the r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution, distribution of probability.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of a Statistical population, population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is Sampling (statistics), sampled from a larger population. Inferential statistics can be contrasted with descriptive statistics. Descriptive statistics is solely concerned with properties of the observed data, and it does not rest on the assumption that the data come from a larger population. In machine learning, the term ''inference'' is sometimes used instead to mean "make a prediction, by evaluating an already trained model"; in this context inferring properties of the model is referred to as ''training'' or ''learning'' (rather than ''inference''), and using a model for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Approximation

Stochastic approximation methods are a family of iterative methods typically used for root-finding problems or for optimization problems. The recursive update rules of stochastic approximation methods can be used, among other things, for solving linear systems when the collected data is corrupted by noise, or for approximating extreme values of functions which cannot be computed directly, but only estimated via noisy observations. In a nutshell, stochastic approximation algorithms deal with a function of the form f(\theta) = \operatorname E_ (\theta,\xi) which is the expected value of a function depending on a random variable \xi . The goal is to recover properties of such a function f without evaluating it directly. Instead, stochastic approximation algorithms use random samples of F(\theta,\xi) to efficiently approximate properties of f such as zeros or extrema. Recently, stochastic approximations have found extensive applications in the fields of statistics and machine lea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metropolis–Hastings Algorithm

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. This sequence can be used to approximate the distribution (e.g. to generate a histogram) or to compute an integral (e.g. an expected value). Metropolis–Hastings and other MCMC algorithms are generally used for sampling from multi-dimensional distributions, especially when the number of dimensions is high. For single-dimensional distributions, there are usually other methods (e.g. adaptive rejection sampling) that can directly return independent samples from the distribution, and these are free from the problem of autocorrelated samples that is inherent in MCMC methods. History The algorithm was named after Nicholas Metropolis and W.K. Hastings. Metropolis was the first author to appear on the list of authors of the 1953 article ''Equation of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UCLA Graduate School Of Education And Information Studies

The UCLA School of Education and Information Studies (UCLA Ed & IS) is one of the academic and professional schools at the University of California, Los Angeles. Located in Los Angeles, California, the school combines two distinguished departments whose research and doctoral training programs are committed to expanding the range of knowledge in education, information science, and associated disciplines. Established in 1881, the school is the oldest unit at UCLA, having been founded as a normal school prior to the establishment of the university. It was incorporated into the University of California in 1919. The school offers a wide variety of doctoral and master's degrees, including the MA, MEd, MLIS, EdD, and PhD, as well as professional certificates and credentials in education and information studies. It also hosts visiting scholars and a number of research centers, institutes, and programs. Ed&IS recently initiated an undergraduate major in Education & Social Transformatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |