|

Least-squares Spectral Analysis

Least-squares spectral analysis (LSSA) is a method of estimating a frequency spectrum, based on a least squares fit of sinusoids to data samples, similar to Fourier analysis. Fourier analysis, the most used spectral method in science, generally boosts long-periodic noise in long gapped records; LSSA mitigates such problems. Unlike with Fourier analysis, data need not be equally spaced to use LSSA. LSSA is also known as the Vaníček method or the Gauss-Vaniček method after Petr Vaníček, and as the Lomb method or the Lomb–Scargle periodogram, based on the contributions of Nicholas R. Lomb and, independently, Jeffrey D. Scargle. Historical background The close connections between Fourier analysis, the periodogram, and least-squares fitting of sinusoids have long been known. Most developments, however, are restricted to complete data sets of equally spaced samples. In 1963, Freek J. M. Barning of Mathematisch Centrum, Amsterdam, handled unequally spaced data by similar tec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Least Squares2

Linearity is the property of a mathematical relationship (''function'') that can be graphically represented as a straight line. Linearity is closely related to '' proportionality''. Examples in physics include rectilinear motion, the linear relationship of voltage and current in an electrical conductor (Ohm's law), and the relationship of mass and weight. By contrast, more complicated relationships are ''nonlinear''. Generalized for functions in more than one dimension, linearity means the property of a function of being compatible with addition and scaling, also known as the superposition principle. The word linear comes from Latin ''linearis'', "pertaining to or resembling a line". In mathematics In mathematics, a linear map or linear function ''f''(''x'') is a function that satisfies the two properties: * Additivity: . * Homogeneity of degree 1: for all α. These properties are known as the superposition principle. In this definition, ''x'' is not necessarily a real n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dot Product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors), and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used. It is often called the inner product (or rarely projection product) of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space (see Inner product space for more). Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them. These definitions are equivalent when using Cartesian coordinates. In mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decibel

The decibel (symbol: dB) is a relative unit of measurement equal to one tenth of a bel (B). It expresses the ratio of two values of a power or root-power quantity on a logarithmic scale. Two signals whose levels differ by one decibel have a power ratio of 101/10 (approximately ) or root-power ratio of 10 (approximately ). The unit expresses a relative change or an absolute value. In the latter case, the numeric value expresses the ratio of a value to a fixed reference value; when used in this way, the unit symbol is often suffixed with letter codes that indicate the reference value. For example, for the reference value of 1 volt, a common suffix is " V" (e.g., "20 dBV"). Two principal types of scaling of the decibel are in common use. When expressing a power ratio, it is defined as ten times the logarithm in base 10. That is, a change in ''power'' by a factor of 10 corresponds to a 10 dB change in level. When expressing root-power quantities, a change in ''ampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

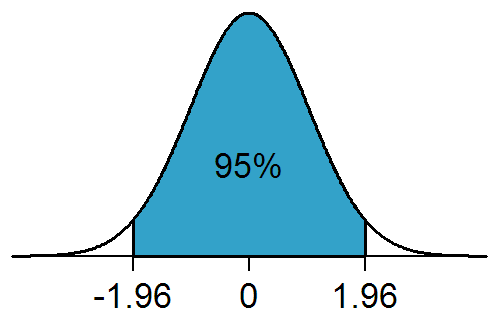

Significance Level

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average. A time series is very frequently plotted via a run chart (which is a temporal line chart). Time series are used in statistics, signal processing, pattern recognition, econometrics, mathematical finance, weather forecasting, earthquake prediction, electroencephalography, control engineering, astronomy, communications engineering, and largely in any domain of applied science and engineering which involves temporal measurements. Time series ''analysis'' comprises methods for analyzing time series data in order to extract meaningful statistics and other characteristics of the data. Time series ''forecasting' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Magnitude (mathematics)

In mathematics, the magnitude or size of a mathematical object is a property which determines whether the object is larger or smaller than other objects of the same kind. More formally, an object's magnitude is the displayed result of an order theory, ordering (or ranking)—of the class (mathematics), class of objects to which it belongs. In physics, magnitude can be defined as quantity or distance. History The Greeks distinguished between several types of magnitude, including: *Positive fractions *Line segments (ordered by length) *Geometric shape, Plane figures (ordered by area) *Solid geometry, Solids (ordered by volume) *Angle, Angles (ordered by angular magnitude) They proved that the first two could not be the same, or even isomorphic systems of magnitude. They did not consider negative number, negative magnitudes to be meaningful, and ''magnitude'' is still primarily used in contexts in which zero is either the smallest size or less than all possible sizes. Numbers Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Manipulation

Statistics, when used in a misleading fashion, can trick the casual observer into believing something other than what the data shows. That is, a misuse of statistics occurs when a statistical argument asserts a falsehood. In some cases, the misuse may be accidental. In others, it is purposeful and for the gain of the perpetrator. When the statistical reason involved is false or misapplied, this constitutes a statistical fallacy. The false statistics trap can be quite damaging for the quest for knowledge. For example, in medical science, correcting a falsehood may take decades and cost lives. Misuses can be easy to fall into. Professional scientists, even mathematicians and professional statisticians, can be fooled by even some simple methods, even if they are careful to check everything. Scientists have been known to fool themselves with statistics due to lack of knowledge of probability theory and lack of standardization of their tests. Definition, limitations and context One ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least-squares Analysis

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the residuals (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each individual equation. The most important application is in data fitting. When the problem has substantial uncertainties in the independent variable (the ''x'' variable), then simple regression and least-squares methods have problems; in such cases, the methodology required for fitting errors-in-variables models may be considered instead of that for least squares. Least squares problems fall into two categories: linear or ordinary least squares and nonlinear least squares, depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical regression ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fast Fourier Transform

A fast Fourier transform (FFT) is an algorithm that computes the discrete Fourier transform (DFT) of a sequence, or its inverse (IDFT). Fourier analysis converts a signal from its original domain (often time or space) to a representation in the frequency domain and vice versa. The DFT is obtained by decomposing a sequence of values into components of different frequencies. This operation is useful in many fields, but computing it directly from the definition is often too slow to be practical. An FFT rapidly computes such transformations by factorizing the DFT matrix into a product of sparse (mostly zero) factors. As a result, it manages to reduce the complexity of computing the DFT from O\left(N^2\right), which arises if one simply applies the definition of DFT, to O(N \log N), where N is the data size. The difference in speed can be enormous, especially for long data sets where ''N'' may be in the thousands or millions. In the presence of round-off error, many FFT algorithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Matrix

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cholesky Decomposition

In linear algebra, the Cholesky decomposition or Cholesky factorization (pronounced ) is a decomposition of a Hermitian, positive-definite matrix into the product of a lower triangular matrix and its conjugate transpose, which is useful for efficient numerical solutions, e.g., Monte Carlo simulations. It was discovered by André-Louis Cholesky for real matrices, and posthumously published in 1924. When it is applicable, the Cholesky decomposition is roughly twice as efficient as the LU decomposition for solving systems of linear equations. Statement The Cholesky decomposition of a Hermitian positive-definite matrix A, is a decomposition of the form : \mathbf = \mathbf^*, where L is a lower triangular matrix with real and positive diagonal entries, and L* denotes the conjugate transpose of L. Every Hermitian positive-definite matrix (and thus also every real-valued symmetric positive-definite matrix) has a unique Cholesky decomposition. The converse holds trivially: if A can be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)