|

LIDA (cognitive Architecture)

The LIDA (Learning Intelligent Distribution Agent) cognitive architecture is an integrated artificial cognitive system that attempts to model a broad spectrum of cognition in biological systems, from low-level perception/action to high-level reasoning. Developed primarily by Stan Franklin and colleagues at the University of Memphis, the LIDA architecture is empirically grounded in cognitive science and cognitive neuroscience. In addition to providing hypotheses to guide further research, the architecture can support control structures for software agents and robots. Providing plausible explanations for many cognitive processes, the LIDA conceptual model is also intended as a tool with which to think about how minds work. Two hypotheses underlie the LIDA architecture and its corresponding conceptual model: 1) Much of human cognition functions by means of frequently iterated (~10 Hz) interactions, called cognitive cycles, between conscious contents, the various memory systems a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Architecture

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R (Adaptive Control of Thought - Rational) and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990. The Institute for Creative Technologies defines cognitive architecture as: "''hypothesis about the fixed structures that provide a mind, whether in natural or artificial systems, and how they work together – in conjunction with knowledge and skills embodied within the architecture – to yield intelligent behavior in a diversity of complex environments." History Herbert A. Simon, one of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Copycat (software)

Copycat is a model of analogy making and human cognition based on the concept of the parallel terraced scan, developed in 1988 by Douglas Hofstadter, Melanie Mitchell, and others at thCenter for Research on Concepts and Cognition Indiana University Bloomington. The original Copycat was written in Common Lisp and is bitrotten (as it relies on now-outdated graphics libraries for Lucid Common Lisp); however, Java and Python ports exist. The latest version in 2018 is Python3 portby Lucas Saldyt and J. Alan Brogan. Description Copycat produces answers to such problems as "abc is to abd as ijk is to what?" (abc:abd :: ijk:?). Hofstadter and Mitchell consider analogy making as the core of high-level cognition, or ''high-level perception'', as Hofstadter calls it, basic to recognition and categorization. High-level perception emerges from the spreading activity of many independent processes, called ''codelets'', running in parallel, competing or cooperating. They create and destroy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernard Baars

Bernard J. Baars (born 1946, in Amsterdam) is a former Senior Fellow in Theoretical Neurobiology at The Neurosciences Institute in San Diego, CA., and is currently an Affiliated Fellow there. He is best known as the originator of the global workspace theory, a theory of human cognitive architecture and consciousness. He previously served as a professor of psychology at the State University of New York, Stony Brook where he conducted research into the causation of human errors and the Freudian slip, and as a faculty member at the Wright Institute. Baars co-founded the Association for the Scientific Study of Consciousness, and the Academic Press journal Consciousness and Cognition The journal ''Consciousness and Cognition'' provides a forum for scientific approaches to the issues of consciousness, voluntary control, and self. The journal was launched by Bernard Baars and William Banks. The journal's editor-in-chief position ..., which he also edited, with William P. Banks, for "mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

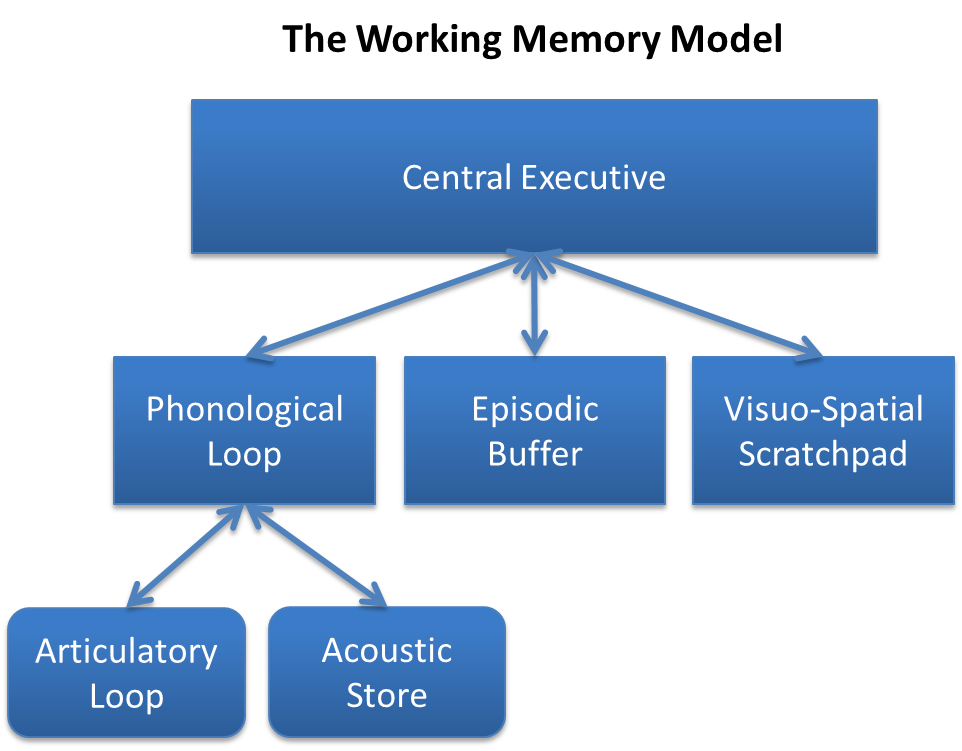

Working Memory

Working memory is a cognitive system with a limited capacity that can hold information temporarily. It is important for reasoning and the guidance of decision-making and behavior. Working memory is often used synonymously with short-term memory, but some theorists consider the two forms of memory distinct, assuming that working memory allows for the manipulation of stored information, whereas short-term memory only refers to the short-term storage of information. Working memory is a theoretical concept central to cognitive psychology, neuropsychology, and neuroscience. History The term "working memory" was coined by Miller, Galanter, and Pribram, and was used in the 1960s in the context of theories that likened the mind to a computer. In 1968, Atkinson and Shiffrin used the term to describe their "short-term store". What we now call working memory was formerly referred to variously as a "short-term store" or short-term memory, primary memory, immediate memory, operant mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Situated Cognition

Situated cognition is a theory that posits that knowing is inseparable from doing by arguing that all knowledge is situated in activity bound to social, cultural and physical contexts. Under this assumption, which requires an epistemological shift from empiricism, situativity theorists suggest a model of knowledge and learning that requires thinking on the fly rather than the storage and retrieval of conceptual knowledge. In essence, cognition cannot be separated from the context. Instead knowing exists, ''in situ'', inseparable from context, activity, people, culture, and language. Therefore, learning is seen in terms of an individual's increasingly effective performance across situations rather than in terms of an accumulation of knowledge, since what is known is co-determined by the agent and the context. History While situated cognition gained recognition in the field of educational psychology in the late twentieth century,Brown, Collins, & Duguid, 1989 it shares many principle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subsumption Architecture

Subsumption architecture is a reactive robotic architecture heavily associated with behavior-based robotics which was very popular in the 1980s and 90s. The term was introduced by Rodney Brooks and colleagues in 1986.Brooks, R. A., "A Robust Programming Scheme for a Mobile Robot", Proceedings of NATO Advanced Research Workshop on Languages for Sensor-Based Control in Robotics, Castelvecchio Pascoli, Italy, September 1986. Subsumption has been widely influential in autonomous robotics and elsewhere in real-time AI. Overview Subsumption architecture is a control architecture that was proposed in opposition to traditional AI, or GOFAI. Instead of guiding behavior by symbolic mental representations of the world, subsumption architecture couples sensory information to action selection in an intimate and bottom-up fashion. It does this by decomposing the complete behavior into sub-behaviors. These sub-behaviors are organized into a hierarchy of layers. Each layer implements a par ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Distributed Memory

Sparse distributed memory (SDM) is a mathematical model of human long-term memory introduced by Pentti Kanerva in 1988 while he was at NASA Ames Research Center. It is a generalized random-access memory (RAM) for long (e.g., 1,000 bit) binary words. These words serve as both addresses to and data for the memory. The main attribute of the memory is sensitivity to similarity, meaning that a word can be read back not only by giving the original write address but also by giving one close to it, as measured by the number of mismatched bits (i.e., the Hamming distance between memory addresses). SDM implements transformation from logical space to physical space using distributed data representation and storage, similarly to encoding processes in human memory. A value corresponding to a logical address is stored into many physical addresses. This way of storing is robust and not deterministic. A memory cell is not addressed directly. If input data (logical addresses) are partially damaged a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connectionism

Connectionism refers to both an approach in the field of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN) and to a wide range of techniques and algorithms using ANNs in the context of artificial intelligence to build more intelligent machines. Connectionism presents a cognitive theory based on simultaneously occurring, distributed signal activity via connections that can be represented numerically, where learning occurs by modifying connection strengths based on experience. Some advantages of the connectionist approach include its applicability to a broad array of functions, structural approximation to biological neurons, low requirements for innate structure, and capacity for graceful degradation. Some disadvantages include the difficulty in deciphering how ANNs process information, or account for the compositionality of mental representations, and a resultant difficulty explaining phenomena at a higher level. The success of deep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive System

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and G ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Action Selection

Action selection is a way of characterizing the most basic problem of intelligent systems: what to do next. In artificial intelligence and computational cognitive science, "the action selection problem" is typically associated with intelligent agents and animats—artificial systems that exhibit complex behaviour in an agent environment. The term is also sometimes used in ethology or animal behavior. One problem for understanding action selection is determining the level of abstraction used for specifying an "act". At the most basic level of abstraction, an atomic act could be anything from ''contracting a muscle cell'' to ''provoking a war''. Typically for any one action-selection mechanism, the set of possible actions is predefined and fixed. Most researchers working in this field place high demands on their agents: * The acting agent typically must select its action in dynamic and unpredictable environments. * The agents typically act in real time; therefore they must make de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |