|

Lossless Compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to , 1/math>, the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Delta (letter)

Delta ( ; uppercase Δ, lowercase δ; , ''délta'', ) is the fourth letter of the Greek alphabet. In the system of Greek numerals, it has a value of four. It was derived from the Phoenician alphabet, Phoenician letter Dalet (letter), dalet 𐤃. Letters that come from delta include the Latin alphabet, Latin D and the Cyrillic script, Cyrillic De (Cyrillic), Д. A river delta (originally, the Nile Delta, delta of the Nile River) is named so because its shape approximates the triangular uppercase letter delta. Contrary to a popular legend, this use of the word ''delta'' was not coined by Herodotus. Pronunciation In Ancient Greek, delta represented a voiced dental plosive . In Modern Greek, it represents a voiced dental fricative , like the "''th''" in "''that''" or "''this''" (while in foreign words is instead commonly transcribed as ντ). Delta is romanization of Greek, romanized as ''d'' or ''dh''. Uppercase The uppercase letter Δ is used to denote: * Change of any changeable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

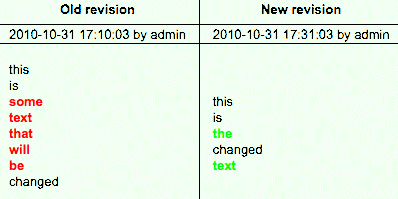

Delta Encoding

Delta encoding is a way of storing or transmitting data in the form of '' differences'' (deltas) between sequential data rather than complete files; more generally this is known as data differencing. Delta encoding is sometimes called delta compression, particularly where archival histories of changes are required (e.g., in revision control software). The differences are recorded in discrete files called "deltas" or "diffs". In situations where differences are small – for example, the change of a few words in a large document or the change of a few records in a large table – delta encoding greatly reduces data redundancy. Collections of unique deltas are substantially more space-efficient than their non-encoded equivalents. From a logical point of view, the difference between two data values is the information required to obtain one value from the other – see relative entropy. The difference between identical values (under some equivalence) is often called ''0'' or the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Video Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating Redundancy (information theory), statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive

In statistics, econometrics, and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it can be used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a stochastic term (an imperfectly predictable term); thus the model is in the form of a stochastic difference equation (or recurrence relation) which should not be confused with a differential equation. Together with the moving-average (MA) model, it is a special case and key component of the more general autoregressive–moving-average (ARMA) and autoregressive integrated moving average (ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one ev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Indexed Image

In computing, indexed color is a technique to manage digital images' colors in a limited fashion, in order to save computer memory and file storage, while speeding up display refresh and file transfers. It is a form of vector quantization compression. When an image is encoded in this way, color information is not directly carried by the image pixel data, but is stored in a separate piece of data called a color lookup table (CLUT) or palette: an array of color specifications. Every element in the array represents a color, indexed by its position within the array. Each image pixel does not contain the full specification of its color, but only its index into the ''palette''. This technique is sometimes referred as pseudocolor or indirect color, as colors are addressed indirectly. History Early graphics display systems that used 8-bit indexed color with frame buffers and color lookup tables include Shoup's SuperPaint (1973) and the video frame buffer described in 1975 by Kaj ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LZ77 And LZ78

LZ77 and LZ78 are the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as Lempel-Ziv 1 (LZ1) and Lempel-Ziv 2 (LZ2) respectively. These two algorithms form the basis for many variations including LZW, LZSS, LZMA and others. Besides their academic influence, these algorithms formed the basis of several ubiquitous compression schemes, including GIF and the DEFLATE algorithm used in PNG and ZIP. They are both theoretically dictionary coders. LZ77 maintains a sliding window during compression. This was later shown to be equivalent to the ''explicit dictionary'' constructed by LZ78—however, they are only equivalent when the entire data is intended to be decompressed. Since LZ77 encodes and decodes from a sliding window over previously seen characters, decompression must always start at the beginning of the input. Conceptually, LZ78 decompression could allow random access to the input if the en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Portable Network Graphics

Portable Network Graphics (PNG, officially pronounced , colloquially pronounced ) is a raster graphics, raster-graphics file graphics file format, format that supports lossless data compression. PNG was developed as an improved, non-patented replacement for Graphics Interchange Format (GIF). PNG supports palette-based images (with palettes of 24-bit RGB color model, RGB or 32-bit RGBA color space, RGBA colors), grayscale images (with or without an Alpha compositing, alpha channel for transparency), and full-color non-palette-based RGB or RGBA images. The PNG working group designed the format for transferring images on the Internet, not for professional-quality print graphics; therefore, non-RGB color spaces such as CMYK color model, CMYK are not supported. A PNG file contains a single image in an extensible structure of ''chunks'', encoding the basic pixels and other information such as textual comments and Integrity checker, integrity checks documented in Request for Comments ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphics Interchange Format

The Graphics Interchange Format (GIF; or , ) is a bitmap image format that was developed by a team at the online services provider CompuServe led by American computer scientist Steve Wilhite and released on June 15, 1987. The format can contain up to 8 bits per pixel, allowing a single image to reference its own palette of up to 256 different colors chosen from the 24-bit RGB color space. It can also represent multiple images in a file, which can be used for animations, and allows a separate palette of up to 256 colors for each frame. These palette limitations make GIF less suitable for reproducing color photographs and other images with color gradients but well-suited for simpler images such as graphics or logos with solid areas of color. GIF images are compressed using the Lempel–Ziv–Welch (LZW) lossless data compression technique to reduce the file size without degrading the visual quality. While once in widespread usage on the World Wide Web because of i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lempel–Ziv–Welch

Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham Lempel, Jacob Ziv, and Terry Welch. It was published by Welch in 1984 as an improved implementation of the LZ78 algorithm published by Lempel and Ziv in 1978. The algorithm is simple to implement and has the potential for very high throughput in hardware implementations. It is the algorithm of the Unix file compression utility compress and is used in the GIF image format. Algorithm The scenario described by Welch's 1984 paper encodes sequences of 8-bit data as fixed-length 12-bit codes. The codes from 0 to 255 represent 1-character sequences consisting of the corresponding 8-bit character, and the codes 256 through 4095 are created in a dictionary for sequences encountered in the data as it is encoded. At each stage in compression, input bytes are gathered into a sequence until the next character would make a sequence with no code yet in the dictionary. The code for th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

United States

The United States of America (USA), also known as the United States (U.S.) or America, is a country primarily located in North America. It is a federal republic of 50 U.S. state, states and a federal capital district, Washington, D.C. The 48 contiguous states border Canada to the north and Mexico to the south, with the semi-exclave of Alaska in the northwest and the archipelago of Hawaii in the Pacific Ocean. The United States asserts sovereignty over five Territories of the United States, major island territories and United States Minor Outlying Islands, various uninhabited islands in Oceania and the Caribbean. It is a megadiverse country, with the world's List of countries and dependencies by area, third-largest land area and List of countries and dependencies by population, third-largest population, exceeding 340 million. Its three Metropolitan statistical areas by population, largest metropolitan areas are New York metropolitan area, New York, Greater Los Angeles, Los Angel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |