|

Linear Dynamical System

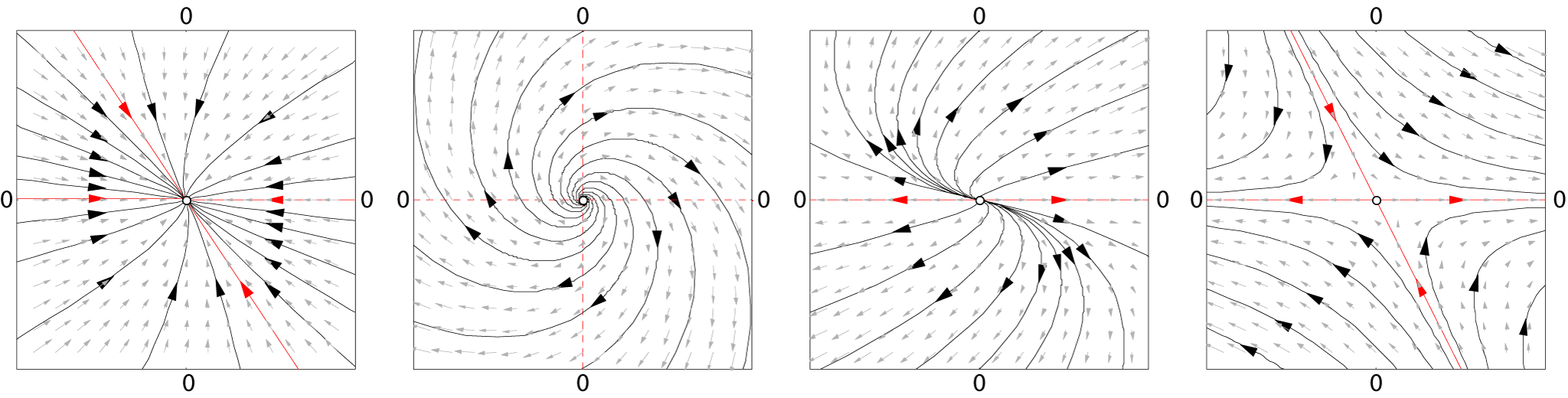

Linear dynamical systems are dynamical systems whose evaluation functions are linear. While dynamical systems, in general, do not have closed-form solutions, linear dynamical systems can be solved exactly, and they have a rich set of mathematical properties. Linear systems can also be used to understand the qualitative behavior of general dynamical systems, by calculating the equilibrium points of the system and approximating it as a linear system around each such point. Introduction In a linear dynamical system, the variation of a state vector (an N-dimensional vector denoted \mathbf) equals a constant matrix (denoted \mathbf) multiplied by \mathbf. This variation can take two forms: either as a flow, in which \mathbf varies continuously with time : \frac \mathbf(t) = \mathbf \mathbf(t) or as a mapping, in which \mathbf varies in discrete steps : \mathbf_ = \mathbf \mathbf_ These equations are linear in the following sense: if \mathbf(t) and \mathbf(t) are two v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamical Systems

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it. At any given time, a dynamical system has a state representing a point in an appropriate state space. This state is often given by a tuple of real numbers or by a vector in a geometr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by \lambda, is the factor by which the eigenvector is scaled. Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed. Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated. Formal definition If is a linear transformation from a vector space over a field into itself and is a nonzero vector in , then is an eigenvector of if is a scalar multiple of . This can be written as T(\mathbf) = \lambda \mathbf, where is a scalar in , known as the eigenvalue, characteristic value, or characteristic roo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of Dynamical System Topics

This is a list of dynamical system and differential equation topics, by Wikipedia page. See also list of partial differential equation topics, list of equations. Dynamical systems, in general *Deterministic system (mathematics) *Linear system *Partial differential equation *Dynamical systems and chaos theory *Chaos theory ** Chaos argument ** Butterfly effect ** 0-1 test for chaos *Bifurcation diagram * Feigenbaum constant *Sharkovskii's theorem *Attractor **Strange nonchaotic attractor *Stability theory **Mechanical equilibrium **Astable ** Monostable **Bistability **Metastability *Feedback **Negative feedback **Positive feedback **Homeostasis *Damping ratio *Dissipative system *Spontaneous symmetry breaking *Turbulence *Perturbation theory *Control theory ** Non-linear control **Adaptive control ** Hierarchical control **Intelligent control ** Optimal control **Dynamic programming **Robust control ** Stochastic control *System dynamics, system analysis * Takens' theorem * Exponen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dynamical System

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it. At any given time, a dynamical system has a state representing a point in an appropriate state space. This state is often given by a tuple of real numbers or by a vector in a geome ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear System

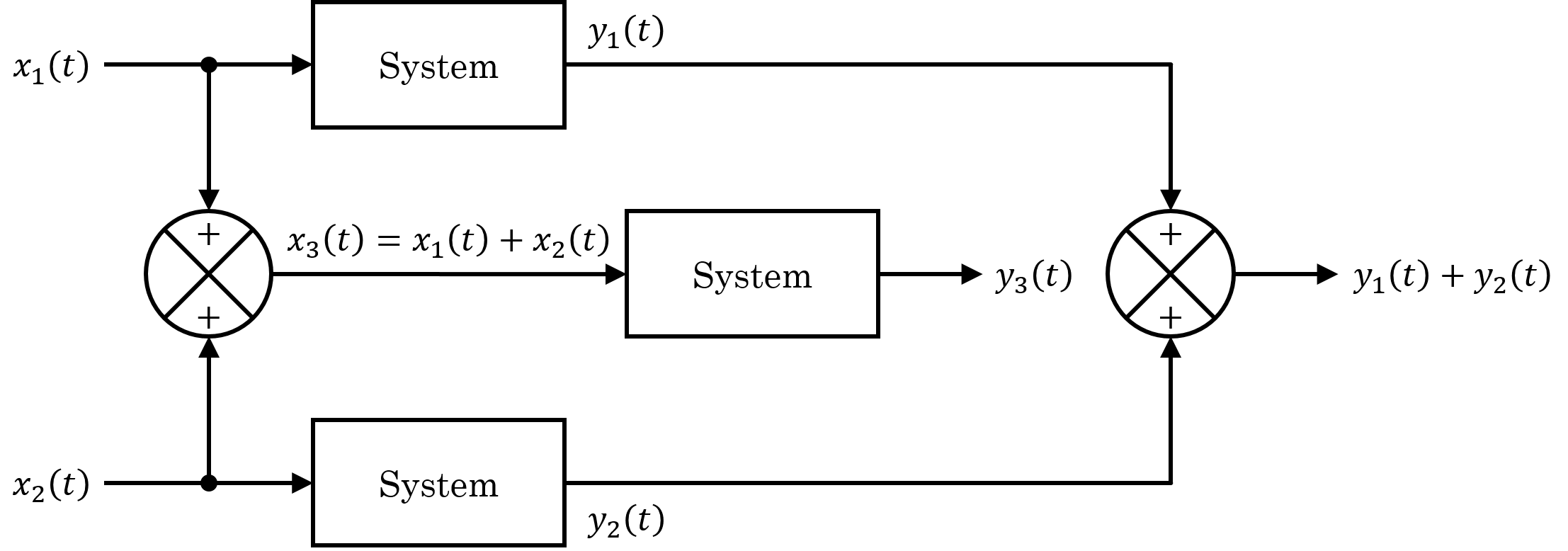

In systems theory, a linear system is a mathematical model of a system based on the use of a linear operator. Linear systems typically exhibit features and properties that are much simpler than the nonlinear case. As a mathematical abstraction or idealization, linear systems find important applications in automatic control theory, signal processing, and telecommunications. For example, the propagation medium for wireless communication systems can often be modeled by linear systems. Definition A general deterministic system can be described by an operator, that maps an input, as a function of to an output, a type of black box description. A system is linear if and only if it satisfies the superposition principle, or equivalently both the additivity and homogeneity properties, without restrictions (that is, for all inputs, all scaling constants and all time.) The superposition principle means that a linear combination of inputs to the system produces a linear combin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discriminant

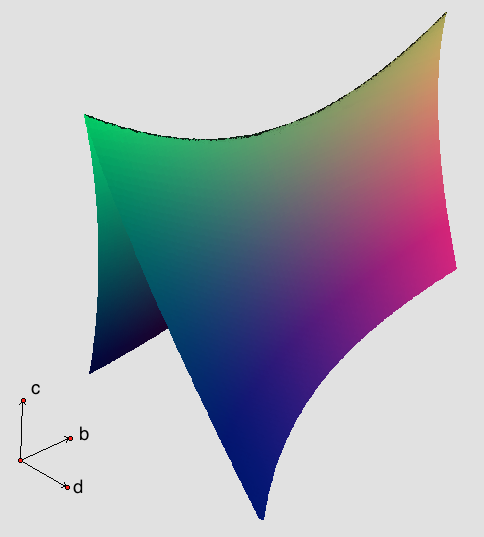

In mathematics, the discriminant of a polynomial is a quantity that depends on the coefficients and allows deducing some properties of the roots without computing them. More precisely, it is a polynomial function of the coefficients of the original polynomial. The discriminant is widely used in polynomial factoring, number theory, and algebraic geometry. The discriminant of the quadratic polynomial ax^2+bx+c is :b^2-4ac, the quantity which appears under the square root in the quadratic formula. If a\ne 0, this discriminant is zero if and only if the polynomial has a double root. In the case of real coefficients, it is positive if the polynomial has two distinct real roots, and negative if it has two distinct complex conjugate roots. Similarly, the discriminant of a cubic polynomial is zero if and only if the polynomial has a multiple root. In the case of a cubic with real coefficients, the discriminant is positive if the polynomial has three distinct real roots, and negati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trace (linear Algebra)

In linear algebra, the trace of a square matrix , denoted , is defined to be the sum of elements on the main diagonal (from the upper left to the lower right) of . The trace is only defined for a square matrix (). It can be proved that the trace of a matrix is the sum of its (complex) eigenvalues (counted with multiplicities). It can also be proved that for any two matrices and . This implies that similar matrices have the same trace. As a consequence one can define the trace of a linear operator mapping a finite-dimensional vector space into itself, since all matrices describing such an operator with respect to a basis are similar. The trace is related to the derivative of the determinant (see Jacobi's formula). Definition The trace of an square matrix is defined as \operatorname(\mathbf) = \sum_^n a_ = a_ + a_ + \dots + a_ where denotes the entry on the th row and th column of . The entries of can be real numbers or (more generally) complex numbers. The trace is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Polynomial

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base (that is, the characteristic polynomial does not depend on the choice of a basis). The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero. In spectral graph theory, the characteristic polynomial of a graph is the characteristic polynomial of its adjacency matrix. Motivation In linear algebra, eigenvalues and eigenvectors play a fundamental role, since, given a linear transformation, an eigenvector is a vector whose direction is not changed by the transformation, and the corresponding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Left Eigenvector

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by \lambda, is the factor by which the eigenvector is scaled. Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed. Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated. Formal definition If is a linear transformation from a vector space over a field into itself and is a nonzero vector in , then is an eigenvector of if is a scalar multiple of . This can be written as T(\mathbf) = \lambda \mathbf, where is a scalar in , known as the eigenvalue, characteristic value, or characteristic root assoc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diagonalizable Matrix

In linear algebra, a square matrix A is called diagonalizable or non-defective if it is similar to a diagonal matrix, i.e., if there exists an invertible matrix P and a diagonal matrix D such that or equivalently (Such D are not unique.) For a finite-dimensional vector space a linear map T:V\to V is called diagonalizable if there exists an ordered basis of V consisting of eigenvectors of T. These definitions are equivalent: if T has a matrix representation T = PDP^ as above, then the column vectors of P form a basis consisting of eigenvectors of and the diagonal entries of D are the corresponding eigenvalues of with respect to this eigenvector basis, A is represented by Diagonalization is the process of finding the above P and Diagonalizable matrices and maps are especially easy for computations, once their eigenvalues and eigenvectors are known. One can raise a diagonal matrix D to a power by simply raising the diagonal entries to that power, and the determi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |