|

Leave One Out Error

For mathematical analysis and statistics, Leave-one-out error can refer to the following: * Leave-one-out cross-validation Stability (CVloo, for ''stability of Cross Validation with leave one out''): An algorithm f has CVloo stability β with respect to the loss function V if the following holds: \forall i\in\, \mathbb_S\\geq1-\delta_ * Expected-to-leave-one-out error Stability (Eloo_, for ''Expected error from leaving one out''): An algorithm f has Eloo_ stability if for each n there exists a\beta_^m and a \delta_^m such that: \forall i\in\, \mathbb_S\\geq1-\delta_^m, with \beta_^mand \delta_^m going to zero for n\rightarrow\inf Preliminary notations With X and Y being a subset of the real numbers R, or X and Y ⊂ R, being respectively an input space X and an output space Y, we consider a training set: S = \ of size m in Z = X \times Y drawn independently and identically distributed (i.i.d.) from an unknown distribution, here called "D". Then a learning algorithm is a funct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Analysis

Analysis is the branch of mathematics dealing with continuous functions, limit (mathematics), limits, and related theories, such as Derivative, differentiation, Integral, integration, measure (mathematics), measure, infinite sequences, series (mathematics), series, and analytic functions. These theories are usually studied in the context of Real number, real and Complex number, complex numbers and Function (mathematics), functions. Analysis evolved from calculus, which involves the elementary concepts and techniques of analysis. Analysis may be distinguished from geometry; however, it can be applied to any Space (mathematics), space of mathematical objects that has a definition of nearness (a topological space) or specific distances between objects (a metric space). History Ancient Mathematical analysis formally developed in the 17th century during the Scientific Revolution, but many of its ideas can be traced back to earlier mathematicians. Early results in analysis were i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deterministic Algorithm

In computer science, a deterministic algorithm is an algorithm that, given a particular input, will always produce the same output, with the underlying machine always passing through the same sequence of states. Deterministic algorithms are by far the most studied and familiar kind of algorithm, as well as one of the most practical, since they can be run on real machines efficiently. Formally, a deterministic algorithm computes a mathematical function; a function has a unique value for any input in its domain, and the algorithm is a process that produces this particular value as output. Formal definition Deterministic algorithms can be defined in terms of a state machine: a ''state'' describes what a machine is doing at a particular instant in time. State machines pass in a discrete manner from one state to another. Just after we enter the input, the machine is in its ''initial state'' or ''start state''. If the machine is deterministic, this means that from this point onward ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jackknife Resampling

In statistics, the jackknife (jackknife cross-validation) is a cross-validation technique and, therefore, a form of resampling. It is especially useful for bias and variance estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. Given a sample of size n, a jackknife estimator can be built by aggregating the parameter estimates from each subsample of size (n-1) obtained by omitting one observation. The jackknife technique was developed by Maurice Quenouille (1924–1973) from 1949 and refined in 1956. John Tukey expanded on the technique in 1958 and proposed the name "jackknife" because, like a physical jack-knife (a compact folding knife), it is a rough-and-ready tool that can improvise a solution for a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool. The jackknife is a linear approximation of the bootstrap. A simple example: Mean estimation The jackknife estimator of a paramet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypercomplex Analysis

In mathematics, hypercomplex analysis is the basic extension of real analysis and complex analysis to the study of functions where the argument is a hypercomplex number. The first instance is functions of a quaternion variable, where the argument is a quaternion (in this case, the sub-field of hypercomplex analysis is called quaternionic analysis). A second instance involves functions of a motor variable where arguments are split-complex numbers. In mathematical physics, there are hypercomplex systems called Clifford algebras. The study of functions with arguments from a Clifford algebra is called Clifford analysis. A matrix may be considered a hypercomplex number. For example, the study of functions of 2 × 2 real matrices shows that the topology of the space of hypercomplex numbers determines the function theory. Functions such as square root of a matrix, matrix exponential, and logarithm of a matrix are basic examples of hypercomplex analysis. The function theory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

History Of Calculus

Calculus, originally called infinitesimal calculus, is a mathematical discipline focused on limits, continuity, derivatives, integrals, and infinite series. Many elements of calculus appeared in ancient Greece, then in China and the Middle East, and still later again in medieval Europe and in India. Infinitesimal calculus was developed in the late 17th century by Isaac Newton and Gottfried Wilhelm Leibniz independently of each other. An argument over priority led to the Leibniz–Newton calculus controversy which continued until the death of Leibniz in 1716. The development of calculus and its uses within the sciences have continued to the present day. Etymology In mathematics education, ''calculus'' denotes courses of elementary mathematical analysis, which are mainly devoted to the study of functions and limits. The word ''calculus'' is Latin for "small pebble" (the diminutive of '' calx,'' meaning "stone"), a meaning which still persists in medicine. Because such pebbles were ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constructive Analysis

In mathematics, constructive analysis is mathematical analysis done according to some principles of constructive mathematics. This contrasts with ''classical analysis'', which (in this context) simply means analysis done according to the (more common) principles of classical mathematics. Generally speaking, constructive analysis can reproduce theorems of classical analysis, but only in application to separable spaces; also, some theorems may need to be approached by approximations. Furthermore, many classical theorems can be stated in ways that are logically equivalent according to classical logic, but not all of these forms will be valid in constructive analysis, which uses intuitionistic logic. Examples The intermediate value theorem For a simple example, consider the intermediate value theorem (IVT). In classical analysis, IVT implies that, given any continuous function ''f'' from a closed interval 'a'',''b''to the real line ''R'', if ''f''(''a'') is negative while ''f' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

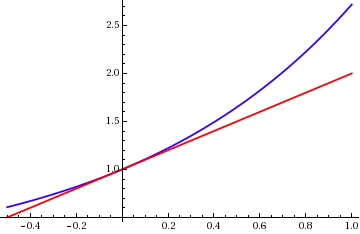

Approximation Error

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

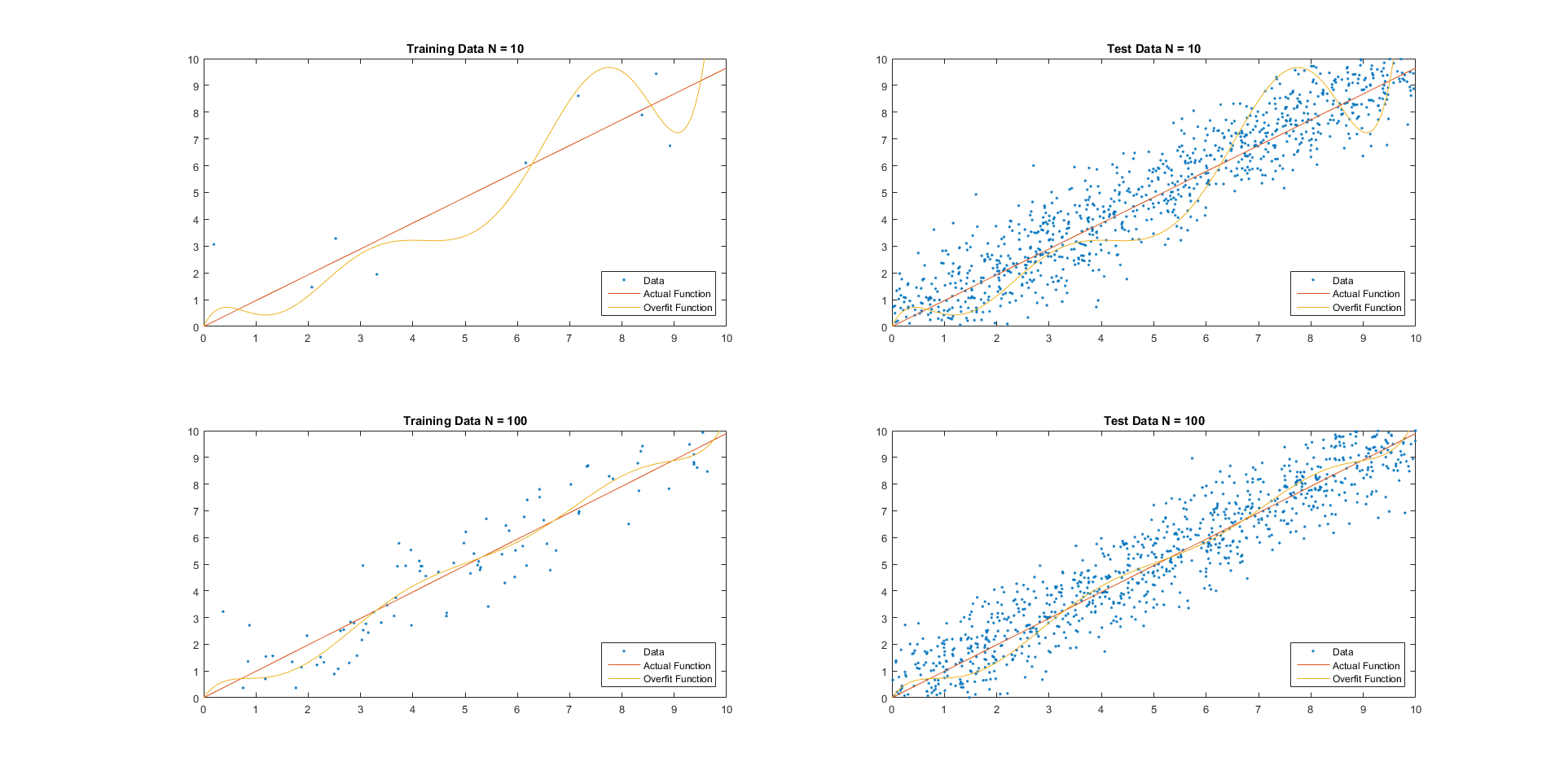

Generalization Error

For supervised learning applications in machine learning and statistical learning theory, generalization error (also known as the out-of-sample error or the risk) is a measure of how accurately an algorithm is able to predict outcome values for previously unseen data. Because learning algorithms are evaluated on finite samples, the evaluation of a learning algorithm may be sensitive to sampling error. As a result, measurements of prediction error on the current data may not provide much information about predictive ability on new data. Generalization error can be minimized by avoiding overfitting in the learning algorithm. The performance of a machine learning algorithm is visualized by plots that show values of ''estimates'' of the generalization error through the learning process, which are called learning curves. Definition In a learning problem, the goal is to develop a function f_n(\vec) that predicts output values y for each input datum \vec. The subscript n indicates that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hypothesis

A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. For a hypothesis to be a scientific hypothesis, the scientific method requires that one can testable, test it. Scientists generally base scientific hypotheses on previous observations that cannot satisfactorily be explained with the available scientific theories. Even though the words "hypothesis" and "theory" are often used interchangeably, a scientific hypothesis is not the same as a scientific theory. A working hypothesis is a provisionally accepted hypothesis proposed for further research in a process beginning with an educated guess or thought. A different meaning of the term ''hypothesis'' is used in formal logic, to denote the antecedent (logic), antecedent of a proposition; thus in the proposition "If ''P'', then ''Q''", ''P'' denotes the hypothesis (or antecedent); ''Q'' can be called a consequent. ''P'' is the :wikt:assumption, assumption in a (possibly Counterfactual conditional, counterfac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Training, Validation, And Test Sets

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from input data. These input data used to build the model are usually divided in multiple data sets. In particular, three data sets are commonly used in different stages of the creation of the model: training, validation and test sets. The model is initially fit on a training data set, which is a set of examples used to fit the parameters (e.g. weights of connections between neurons in artificial neural networks) of the model. The model (e.g. a naive Bayes classifier) is trained on the training data set using a supervised learning method, for example using optimization methods such as gradient descent or stochastic gradient descent. In practice, the training data set often consists of pairs of an input vector (or scalar) and the corresponding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |