|

Least Frequently Used

Least Frequently Used (LFU) is a type of cache algorithm used to manage memory within a computer. The standard characteristics of this method involve the system keeping track of the number of times a block is referenced in memory. When the cache is full and requires more room the system will purge the item with the lowest reference frequency. LFU is sometimes combined with a Least Recently Used algorithm and called LRFU. Implementation The simplest method to employ an LFU algorithm is to assign a counter to every block that is loaded into the cache. Each time a reference is made to that block the counter is increased by one. When the cache reaches capacity and has a new block waiting to be inserted the system will search for the block with the lowest counter and remove it from the cache. * Ideal LFU: there is a counter for each item in the catalogue * Practical LFU: there is a counter for the items stored in cache. The counter is forgotten if the item is evicted. Problems While ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Algorithm

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A ''cache hit'' occurs when the requested data can be found in a cache, while a ''cache miss'' occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective and to enable efficient use of data, caches must be relatively small. Nevertheless, caches have proven themselves in many areas of computing, because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested already, and spatial locality, where d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page (computer Memory)

A page, memory page, or virtual page is a fixed-length contiguous block of virtual memory, described by a single entry in the page table. It is the smallest unit of data for memory management in a virtual memory operating system. Similarly, a page frame is the smallest fixed-length contiguous block of physical memory into which memory pages are mapped by the operating system. A transfer of pages between main memory and an auxiliary store, such as a hard disk drive, is referred to as paging or swapping. Page size trade-off Page size is usually determined by the processor architecture. Traditionally, pages in a system had uniform size, such as 4,096 bytes. However, processor designs often allow two or more, sometimes simultaneous, page sizes due to its benefits. There are several points that can factor into choosing the best page size. Page table size A system with a smaller page size uses more pages, requiring a page table that occupies more space. For example, if a 232 vi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reference (computer Science)

In computer programming, a reference is a value that enables a program to indirectly access a particular data, such as a variable's value or a record, in the computer's memory or in some other storage device. The reference is said to refer to the datum, and accessing the datum is called dereferencing the reference. A reference is distinct from the datum itself. A reference is an abstract data type and may be implemented in many ways. Typically, a reference refers to data stored in memory on a given system, and its internal value is the memory address of the data, i.e. a reference is implemented as a pointer. For this reason a reference is often said to "point to" the data. Other implementations include an offset (difference) between the datum's address and some fixed "base" address, an index, unique key, or identifier used in a lookup operation into an array or table, an operating system handle, a physical address on a storage device, or a network address such as a URL. For ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

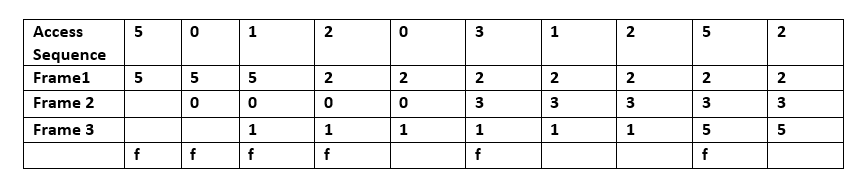

Least Recently Used

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to manage a cache of information stored on the computer. Caching improves performance by keeping recent or often-used data items in memory locations that are faster or computationally cheaper to access than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for the new ones. Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make a main memory access when there is a miss (or, with multi-level cache, average memory reference time for the next-lower cache) : T_h= the latency: the time to reference the cache (should be the same for hits and misses) : E = various secondary effects, such as queuing effects in mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paging

In computer operating systems, memory paging is a memory management scheme by which a computer stores and retrieves data from secondary storage for use in main memory. In this scheme, the operating system retrieves data from secondary storage in same-size blocks called ''pages''. Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory. For simplicity, main memory is called "RAM" (an acronym of random-access memory) and secondary storage is called "disk" (a shorthand for hard disk drive, drum memory or solid-state drive, etc.), but as with many aspects of computing, the concepts are independent of the technology used. Depending on the memory model, paged memory functionality is usually hardwired into a CPU/MCU by using a Memory Management Unit (MMU) or Memory Protection Unit (MPU) and separately enabled by privileged system code in the operating system's ke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page Replacement Algorithm

In a computer operating system that uses paging for virtual memory management, page replacement algorithms decide which memory pages to page out, sometimes called swap out, or write to disk, when a page of memory needs to be allocated. Page replacement happens when a requested page is not in memory (page fault) and a free page cannot be used to satisfy the allocation, either because there are none, or because the number of free pages is lower than some threshold. When the page that was selected for replacement and paged out is referenced again it has to be paged in (read in from disk), and this involves waiting for I/O completion. This determines the ''quality'' of the page replacement algorithm: the less time waiting for page-ins, the better the algorithm. A page replacement algorithm looks at the limited information about accesses to the pages provided by hardware, and tries to guess which pages should be replaced to minimize the total number of page misses, while balancing thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtual Memory

In computing, virtual memory, or virtual storage is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory". The computer's operating system, using a combination of hardware and software, maps memory addresses used by a program, called '' virtual addresses'', into ''physical addresses'' in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit (MMU), automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities, utilizing, e.g., disk storage, to provide a virtual address space that ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Management Algorithms

Memory is the faculty of the mind by which data or information is encoded, stored, and retrieved when needed. It is the retention of information over time for the purpose of influencing future action. If past events could not be remembered, it would be impossible for language, relationships, or personal identity to develop. Memory loss is usually described as forgetfulness or amnesia. Memory is often understood as an informational processing system with explicit and implicit functioning that is made up of a sensory processor, short-term (or working) memory, and long-term memory. This can be related to the neuron. The sensory processor allows information from the outside world to be sensed in the form of chemical and physical stimuli and attended to various levels of focus and intent. Working memory serves as an encoding and retrieval processor. Information in the form of stimuli is encoded in accordance with explicit or implicit functions by the working memory processor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |