|

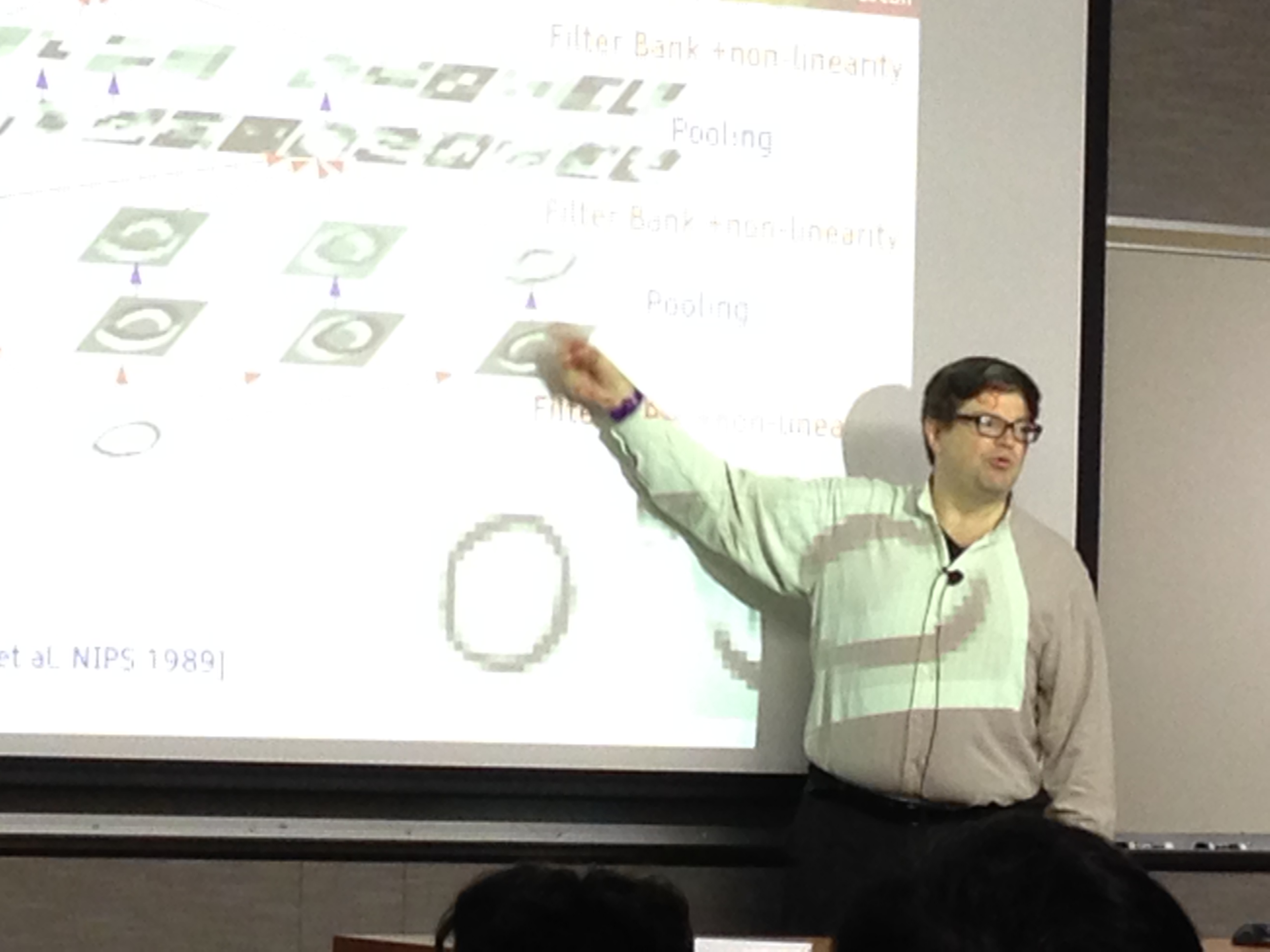

LeNet

LeNet is a series of convolutional neural network architectures created by a research group in AT&T Bell Laboratories during the 1988 to 1998 period, centered around Yann LeCun. They were designed for reading small grayscale images of handwritten digits and letters, and were used in ATM for reading cheques. Convolutional neural networks are a kind of feed-forward neural network whose artificial neurons can respond to a part of the surrounding cells in the coverage range and perform well in large-scale image processing. LeNet-5 was one of the earliest convolutional neural networks and was historically important during the development of deep learning. In general, when "LeNet" is referred to without a number, it refers to the 1998 version, the most well-known version. It is also sometimes called "LeNet-5" or "LeNet5". Development history In 1988, LeCun joined the Adaptive Systems Research Department at AT&T Bell Laboratories in Holmdel, New Jersey, United States, headed by L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convolutional Layer

In artificial neural networks, a convolutional layer is a type of network layer that applies a convolution operation to the input. Convolutional layers are some of the primary building blocks of convolutional neural networks (CNNs), a class of neural network most commonly applied to images, video, audio, and other data that have the property of uniform translational symmetry. The convolution operation in a convolutional layer involves sliding a small window (called a kernel or filter) across the input data and computing the dot product between the values in the kernel and the input at each position. This process creates a feature map that represents detected features in the input. Concepts Kernel Kernels, also known as filters, are small matrices of weights that are learned during the training process. Each kernel is responsible for detecting a specific feature in the input data. The size of the kernel is a hyperparameter that affects the network's behavior. Convolution ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Yann LeCun

Yann André Le Cun ( , ; usually spelled LeCun; born 8 July 1960) is a French-American computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at New York University and Vice President, Chief AI Scientist at Meta. He is well known for his work on optical character recognition and computer vision using convolutional neural networks (CNNs). He is also one of the main creators of the DjVu image compression technology (together with Léon Bottou and Patrick Haffner). He co-developed the Lush programming language with Léon Bottou. In 2018, LeCun, Yoshua Bengio, and Geoffrey Hinton, received the Turing Award for their work on deep learning. The three are sometimes referred to as the "Godfathers of AI" and "Godfathers of Deep Learning". Early life and education LeCun was born on 8 July 1960, at Soisy-sous-Montmorency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MNIST Dataset Example

The MNIST database (''Modified National Institute of Standards and Technology database'') is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training and testing in the field of machine learning. It was created by "re-mixing" the samples from NIST's original datasets. The creators felt that since NIST's training dataset was taken from American Census Bureau employees, while the testing dataset was taken from American high school students, it was not well-suited for machine learning experiments. Furthermore, the black and white images from NIST were normalized to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels. The MNIST database contains 60,000 training images and 10,000 testing images. Half of the training set and half of the test set were taken from NIST's training dataset, while the other half of the training set and the other half of the test ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Topological Skeleton

In shape analysis, skeleton (or topological skeleton) of a shape is a thin version of that shape that is equidistant to its boundaries. The skeleton usually emphasizes geometrical and topological properties of the shape, such as its connectivity, topology, length, direction, and width. Together with the distance of its points to the shape boundary, the skeleton can also serve as a representation of the shape (they contain all the information necessary to reconstruct the shape). Skeletons have several different mathematical definitions in the technical literature, and there are many different algorithms for computing them. Various different variants of skeleton can also be found, including straight skeletons, morphological skeletons, etc. In the technical literature, the concepts of skeleton and medial axis are used interchangeably by some authors,, Section 11.1.5, p. 650 while some other authors, Section 9.9, p. 382., Section 17.5.2, p. 234. regard them as re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |