|

Kullback–Leibler Divergence

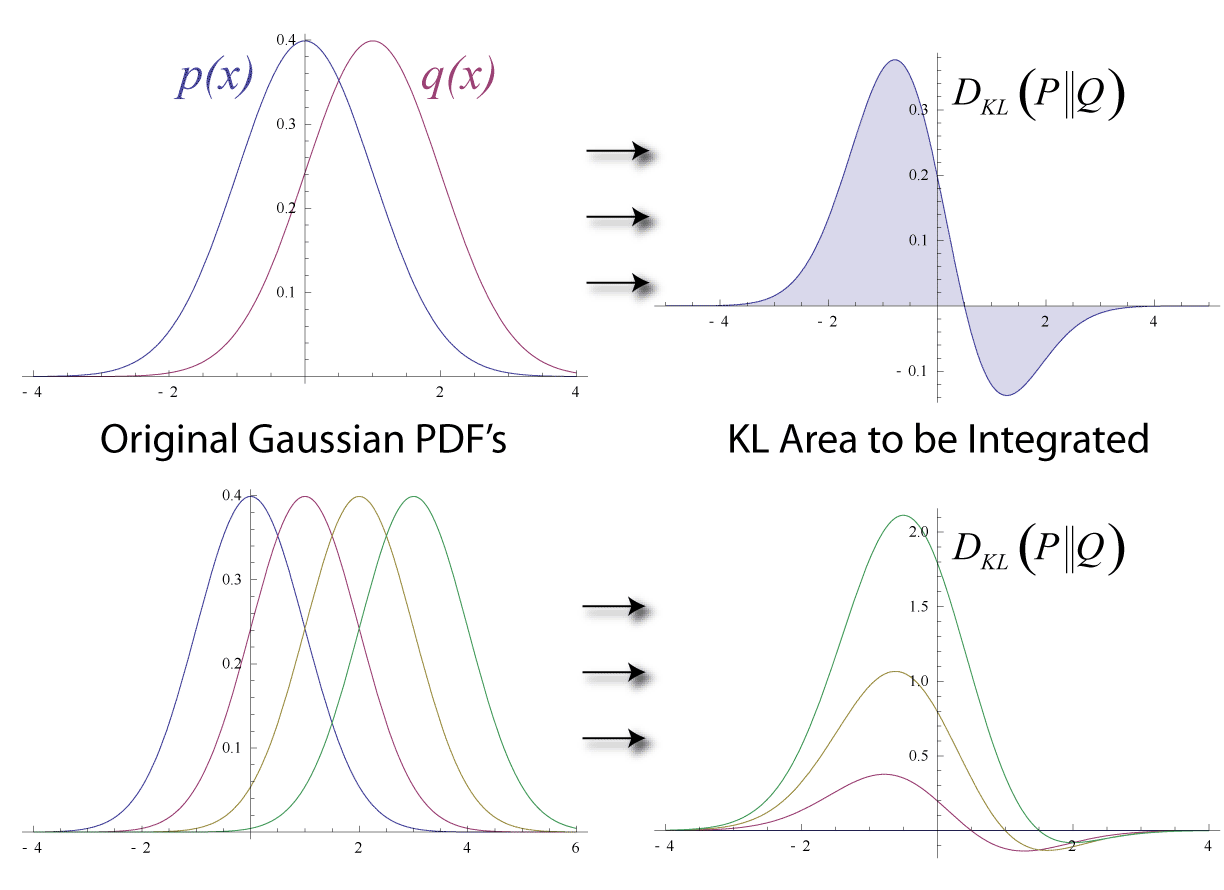

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harold Jeffreys

Sir Harold Jeffreys, FRS (22 April 1891 – 18 March 1989) was a British geophysicist who made significant contributions to mathematics and statistics. His book, ''Theory of Probability'', which was first published in 1939, played an important role in the revival of the objective Bayesian view of probability. Education Jeffreys was born in Fatfield, County Durham, England, the son of Robert Hal Jeffreys, headmaster of Fatfield Church School, and his wife, Elizabeth Mary Sharpe, a school teacher. He was educated at his father's school and at Rutherford Technical College, then studied at Armstrong College in Newcastle upon Tyne (at that time part of the University of Durham) and with the University of London External Programme.Cook, Alan ev.br>"Jeffreys, Sir Harold (1891–1989)" ''Oxford Dictionary of National Biography'', Oxford University Press, 2004; online edition, September 2004. Retrieved 1 January 2023. Jeffreys subsequently won a scholarship to study the Mathem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Statistics

Mathematical statistics is the application of probability theory and other mathematical concepts to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques that are commonly used in statistics include mathematical analysis, linear algebra, stochastic analysis, differential equations, and measure theory. Introduction Statistical data collection is concerned with the planning of studies, especially with the design of randomized experiments and with the planning of surveys using random sampling. The initial analysis of the data often follows the study protocol specified prior to the study being conducted. The data from a study can also be analyzed to consider secondary hypotheses inspired by the initial results, or to suggest new studies. A secondary analysis of the data from a planned study uses tools from data analysis, and the process of doing this is mathematical statistics. Data analysis is divided into: * descriptive stati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average. A time series is very frequently plotted via a run chart (which is a temporal line chart). Time series are used in statistics, signal processing, pattern recognition, econometrics, mathematical finance, weather forecasting, earthquake prediction, electroencephalography, control engineering, astronomy, communications engineering, and largely in any domain of applied science and engineering which involves temporal measurements. Time series ''analysis'' comprises methods for analyzing time series data in order to extract meaningful statistics and other characteristics of the data. Time series ''f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sample Space

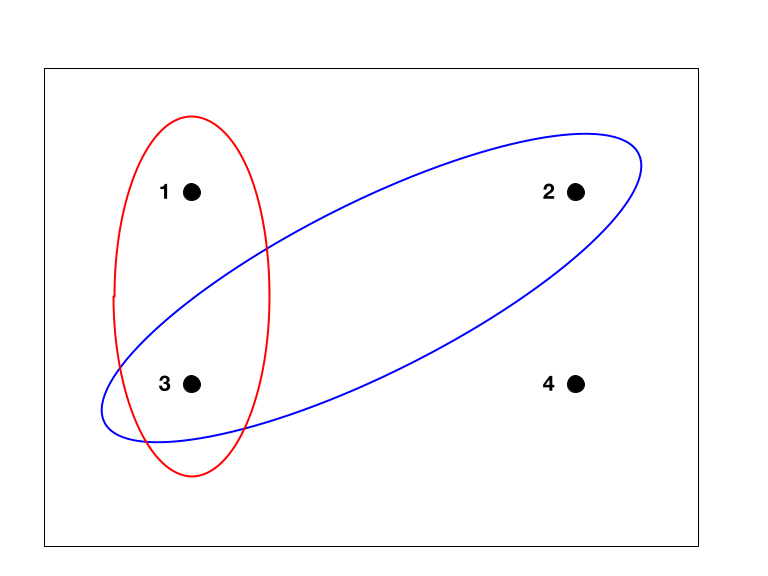

In probability theory, the sample space (also called sample description space, possibility space, or outcome space) of an experiment or random trial is the set of all possible outcomes or results of that experiment. A sample space is usually denoted using set notation, and the possible ordered outcomes, or sample points, are listed as elements in the set. It is common to refer to a sample space by the labels ''S'', Ω, or ''U'' (for " universal set"). The elements of a sample space may be numbers, words, letters, or symbols. They can also be finite, countably infinite, or uncountably infinite. A subset of the sample space is an event, denoted by E. If the outcome of an experiment is included in E, then event E has occurred. For example, if the experiment is tossing a single coin, the sample space is the set \, where the outcome H means that the coin is heads and the outcome T means that the coin is tails. The possible events are E=\, E=\, E = \, and E = \. For tossing two ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Probability Distribution

In probability theory and statistics, a probability distribution is a function that gives the probabilities of occurrence of possible events for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The American Statistician

''The American Statistician'' is a quarterly peer-reviewed scientific journal covering statistics published by Taylor & Francis on behalf of the American Statistical Association. It was established in 1947. The editor-in-chief An editor-in-chief (EIC), also known as lead editor or chief editor, is a publication's editorial leader who has final responsibility for its operations and policies. The editor-in-chief heads all departments of the organization and is held accoun ... is Daniel R. Jeske, a professor at the University of California, Riverside. External links * Taylor & Francis academic journals Statistics journals Academic journals established in 1947 English-language journals Quarterly journals 1947 establishments in the United States Academic journals associated with learned and professional societies of the United States {{statistics-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Richard Leibler

Richard A. Leibler (March 18, 1914, Chicago, Illinois – October 25, 2003, Reston, Virginia) was an American mathematician and cryptanalyst. Richard Leibler was born in March 1914. He received his A.M. in mathematics from Northwestern University and his Ph.D. from the University of Illinois in 1939. While working at the National Security Agency, he and Solomon Kullback formulated the Kullback–Leibler divergence, a measure of similarity between probability distributions which has found important applications in information theory and cryptology. Leibler is also credited by the NSA as having opened up "new methods of attack" in the celebrated VENONA code-breaking project during 1949-1950; this may be a reference to his joint paper with Kullback, which was published in the open literature in 1951 and was immediately noted by Soviet cryptologists. He was director of the Communications Research Division at the Institute for Defense Analyses from 1962 to 1977, during which he was t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Solomon Kullback

Solomon Kullback (April 3, 1907August 5, 1994) was an American cryptanalyst and mathematician, who was one of the first three employees hired by William F. Friedman at the US Army's Signal Intelligence Service (SIS) in the 1930s, along with Frank Rowlett and Abraham Sinkov. He went on to a long and distinguished career at SIS and its eventual successor, the National Security Agency (NSA). Kullback was the Chief Scientist at the NSA until his retirement in 1962, whereupon he took a position at the George Washington University. The Kullback–Leibler divergence is named after Kullback and Richard Leibler. Life and career Kullback was born to Jewish parents in Brooklyn, New York. His father Nathan had been born in Vilna, Russian Empire, (now Vilnius, Lithuania) and had immigrated to the US as a young man circa 1905, and became a naturalized American in 1911. Kullback attended Boys High School in Brooklyn. He then went to City College of New York, graduating with a BA in 1927 a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Evidence Lower Bound

In variational Bayesian methods, the evidence lower bound (often abbreviated ELBO, also sometimes called the variational lower bound or negative variational free energy) is a useful lower bound on the log-likelihood of some observed data. The ELBO is useful because it provides a guarantee on the worst-case for the log-likelihood of some distribution (e.g. p(X)) which models a set of data. The actual log-likelihood may be higher (indicating an even better fit to the distribution) because the ELBO includes a Kullback-Leibler divergence (KL divergence) term which decreases the ELBO due to an internal part of the model being inaccurate despite good fit of the model overall. Thus improving the ELBO score indicates either improving the likelihood of the model p(X) or the fit of a component internal to the model, or both, and the ELBO score makes a good loss function, e.g., for training a deep neural network to improve both the model overall and the internal component. (The internal c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation–maximization Algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the ''E'' step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step. It can be used, for example, to estimate a mixture of gaussians, or to solve the multiple linear regression problem. History The EM algorithm was explained and given its name in a classic 1977 paper by Arthur Dempster, Nan Laird, and Donald Rubin. They pointed out that the method had been "proposed man ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, chemistry, physics, computer science, data science, computer programming, information engineering, mathematics and statistics to analyze and interpret biological data. The process of analyzing and interpreting data can sometimes be referred to as computational biology, however this distinction between the two terms is often disputed. To some, the term ''computational biology'' refers to building and using models of biological systems. Computational, statistical, and computer programming techniques have been used for In silico, computer simulation analyses of biological queries. They include reused specific analysis "pipelines", particularly in the field of genomics, such as by the identification of genes and single nucleotide polymorphis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |