|

Inverse Gamma Distribution

In probability theory and statistics, the inverse gamma distribution is a two-parameter family of continuous probability distributions on the positive real line, which is the distribution of the reciprocal of a variable distributed according to the gamma distribution. Perhaps the chief use of the inverse gamma distribution is in Bayesian statistics, where the distribution arises as the marginal posterior distribution for the unknown variance of a normal distribution, if an uninformative prior is used, and as an analytically tractable conjugate prior, if an informative prior is required. It is common among some Bayesians to consider an alternative parametrization of the normal distribution in terms of the precision, defined as the reciprocal of the variance, which allows the gamma distribution to be used directly as a conjugate prior. Other Bayesians prefer to parametrize the inverse gamma distribution differently, as a scaled inverse chi-squared distribution. Characteriza ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Parameter

In statistics, as opposed to its general use in mathematics, a parameter is any quantity of a statistical population that summarizes or describes an aspect of the population, such as a mean or a standard deviation. If a population exactly follows a known and defined distribution, for example the normal distribution, then a small set of parameters can be measured which provide a comprehensive description of the population and can be considered to define a probability distribution for the purposes of extracting samples from this population. A "parameter" is to a population as a "statistic" is to a sample; that is to say, a parameter describes the true value calculated from the full population (such as the population mean), whereas a statistic is an estimated measurement of the parameter based on a sample (such as the sample mean, which is the mean of gathered data per sampling, called sample). Thus a "statistical parameter" can be more specifically referred to as a population ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inverse-chi-squared Distribution

In probability and statistics, the inverse-chi-squared distribution (or inverted-chi-square distributionBernardo, J.M.; Smith, A.F.M. (1993) ''Bayesian Theory'', Wiley (pages 119, 431) ) is a continuous probability distribution of a positive-valued random variable. It is closely related to the chi-squared distribution. It is used in Bayesian inference as conjugate prior for the variance of the normal distribution. Definition The inverse chi-squared distribution (or inverted-chi-square distribution ) is the probability distribution of a random variable whose multiplicative inverse (reciprocal) has a chi-squared distribution. If X follows a chi-squared distribution with \nu degrees of freedom then 1/X follows the inverse chi-squared distribution with \nu degrees of freedom. The probability density function of the inverse chi-squared distribution is given by : f(x; \nu) = \frac\,x^ e^ In the above x>0 and \nu is the degrees of freedom parameter. Further, \Gamma is the gamm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

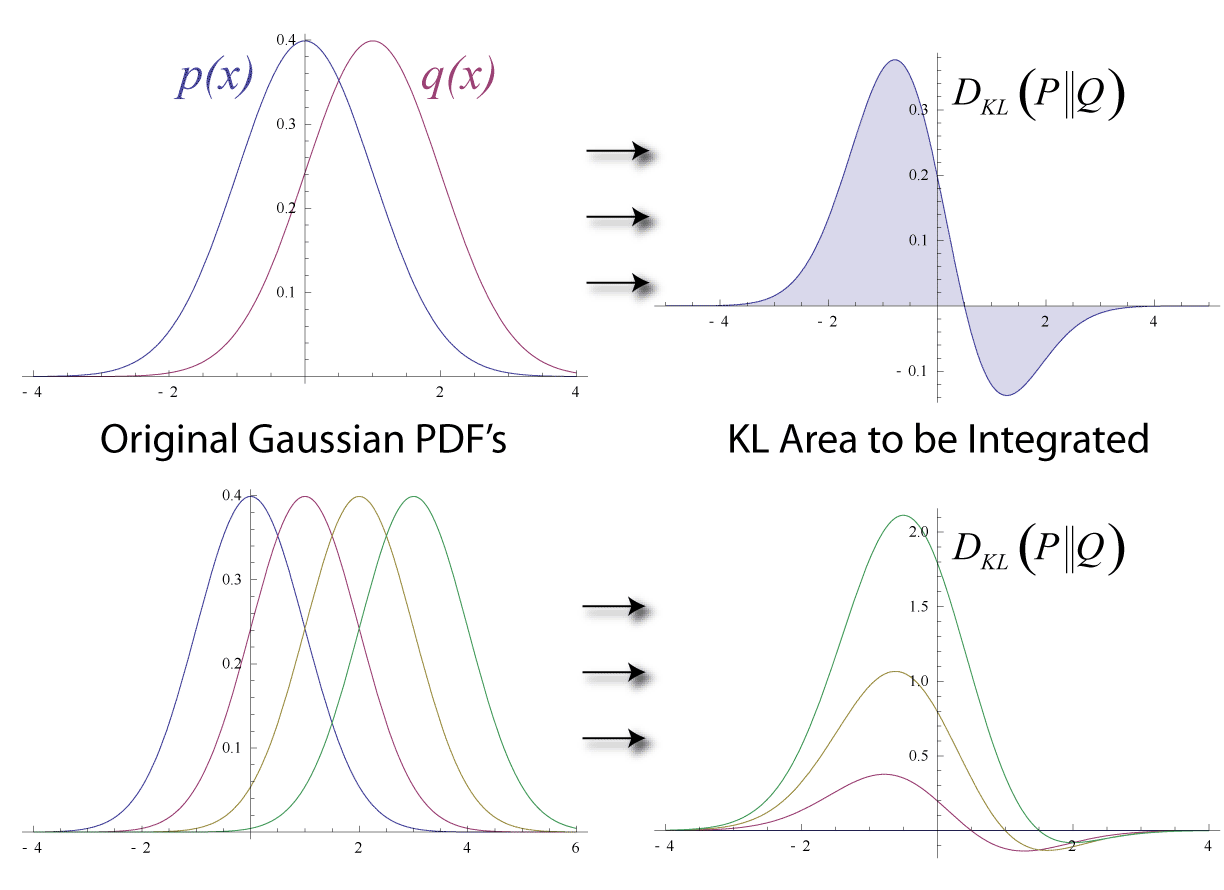

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is different from a true probability distribution . Mathematically, it is defined as D_\text(P \parallel Q) = \sum_ P(x) \, \log \frac\text A simple interpretation of the KL divergence of from is the expected excess surprise from using as a model instead of when the actual distribution is . While it is a measure of how different two distributions are and is thus a distance in some sense, it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for cer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential states or possible outcomes. This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across all potential states. Given a discrete random variable X, which may be any member x within the set \mathcal and is distributed according to p\colon \mathcal\to , 1/math>, the entropy is \Eta(X) := -\sum_ p(x) \log p(x), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modified Bessel Function

Bessel functions, named after Friedrich Bessel who was the first to systematically study them in 1824, are canonical solutions of Bessel's differential equation x^2 \frac + x \frac + \left(x^2 - \alpha^2 \right)y = 0 for an arbitrary complex number \alpha, which represents the ''order'' of the Bessel function. Although \alpha and -\alpha produce the same differential equation, it is conventional to define different Bessel functions for these two values in such a way that the Bessel functions are mostly smooth functions of \alpha. The most important cases are when \alpha is an integer or half-integer. Bessel functions for integer \alpha are also known as cylinder functions or the cylindrical harmonics because they appear in the solution to Laplace's equation in cylindrical coordinates. #Spherical Bessel functions, Spherical Bessel functions with half-integer \alpha are obtained when solving the Helmholtz equation in spherical coordinates. Applications Bessel's equation arise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Function (probability Theory)

In probability theory and statistics, the characteristic function of any real-valued random variable completely defines its probability distribution. If a random variable admits a probability density function, then the characteristic function is the Fourier transform (with sign reversal) of the probability density function. Thus it provides an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables. In addition to univariate distributions, characteristic functions can be defined for vector- or matrix-valued random variables, and can also be extended to more generic cases. The characteristic function always exists when treated as a function of a real-valued argument, unlike the moment-generating function. There are relations between the behavior of the charact ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Incomplete Gamma Function

In mathematics, the upper and lower incomplete gamma functions are types of special functions which arise as solutions to various mathematical problems such as certain integrals. Their respective names stem from their integral definitions, which are defined similarly to the gamma function but with different or "incomplete" integral limits. The gamma function is defined as an integral from zero to infinity. This contrasts with the lower incomplete gamma function, which is defined as an integral from zero to a variable upper limit. Similarly, the upper incomplete gamma function is defined as an integral from a variable lower limit to infinity. Definition The upper incomplete gamma function is defined as: \Gamma(s,x) = \int_x^ t^\,e^\, dt , whereas the lower incomplete gamma function is defined as: \gamma(s,x) = \int_0^x t^\,e^\, dt . In both cases is a complex parameter, such that the real part of is positive. Properties By integration by parts we find the recurrence relati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution Support (measure theory), supported on the real numbers, discrete or "mixed" as well as Continuous variable, continuous, is uniquely identified by a right-continuous Monotonic function, monotone increasing function (a càdlàg function) F \colon \mathbb R \rightarrow [0,1] satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from negative infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Function

In mathematics, the gamma function (represented by Γ, capital Greek alphabet, Greek letter gamma) is the most common extension of the factorial function to complex numbers. Derived by Daniel Bernoulli, the gamma function \Gamma(z) is defined for all complex numbers z except non-positive integers, and for every positive integer z=n, \Gamma(n) = (n-1)!\,.The gamma function can be defined via a convergent improper integral for complex numbers with positive real part: \Gamma(z) = \int_0^\infty t^ e^\textt, \ \qquad \Re(z) > 0\,.The gamma function then is defined in the complex plane as the analytic continuation of this integral function: it is a meromorphic function which is holomorphic function, holomorphic except at zero and the negative integers, where it has simple Zeros and poles, poles. The gamma function has no zeros, so the reciprocal gamma function is an entire function. In fact, the gamma function corresponds to the Mellin transform of the negative exponential functi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support (mathematics)

In mathematics, the support of a real-valued function f is the subset of the function domain of elements that are not mapped to zero. If the domain of f is a topological space, then the support of f is instead defined as the smallest closed set containing all points not mapped to zero. This concept is used widely in mathematical analysis. Formulation Suppose that f : X \to \R is a real-valued function whose domain is an arbitrary set X. The of f, written \operatorname(f), is the set of points in X where f is non-zero: \operatorname(f) = \. The support of f is the smallest subset of X with the property that f is zero on the subset's complement. If f(x) = 0 for all but a finite number of points x \in X, then f is said to have . If the set X has an additional structure (for example, a topology), then the support of f is defined in an analogous way as the smallest subset of X of an appropriate type such that f vanishes in an appropriate sense on its complement. The notion of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |