|

Internet Research

In its widest sense, Internet research comprises any kind of research done on the Internet or the World Wide Web. Unlike simple fact-checking or web scraping, it often involves synthesizing from diverse sources and verifying the credibility of each. In a stricter sense, "Internet research" refers to conducting scientific research using Online and offline , online tools and techniques; the discipline that studies Internet research thus understood is known as online research methods or Internet-mediated research. As with other kinds of scientific research, it involves an Internet research ethics , ethical dimension. Internet research can also be interpreted as the part of Internet studies that investigates the social, ethical, economic, managerial and political implications of the Internet. Characterization Internet research has had a profound impact on the way ideas are formed and knowledge is created. Through web search, Web page, pages with some relation to a given search entry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Research

Research is creative and systematic work undertaken to increase the stock of knowledge. It involves the collection, organization, and analysis of evidence to increase understanding of a topic, characterized by a particular attentiveness to controlling sources of bias and error. These activities are characterized by accounting and controlling for biases. A research project may be an expansion of past work in the field. To test the validity of instruments, procedures, or experiments, research may replicate elements of prior projects or the project as a whole. The primary purposes of basic research (as opposed to applied research) are documentation, discovery, interpretation, and the research and development (R&D) of methods and systems for the advancement of human knowledge. Approaches to research depend on epistemologies, which vary considerably both within and between humanities and sciences. There are several forms of research: scientific, humanities, artistic, eco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discussion Group

A discussion group is a group of individuals, typically who share a similar interest, who gather either formally or informally to discuss ideas, solve problems, or make comments. Common methods of conversing including meeting in person, conducting conference calls, using text messaging, or using a website such as an Internet forum. People respond, add comments, and make posts on such forums, as well as on established mailing lists, in news groups, or in IRC channels. Other group members could choose to respond by posting text or image. Brief history Discussion group was evolved from USENET which is a traced back to early 80's. Two computer scientists Jim Ellis and Tom Truscott founded the idea of setting a system of rules to produce "articles", and then send back to their parallel news group. Fundamentally, the form of discussion group was generated on the concept of USENET, which emphasised ways of communication via email and web forums. Gradually, USENET had developed t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Library And Information Science

Library and information science (LIS)Library and Information Sciences is the name used in the Dewey Decimal Classification for class 20 from the 18th edition (1971) to the 22nd edition (2003). are two interconnected disciplines that deal with information management. This includes organization, access, collection, and regulation of information, both in physical and digital forms.Coleman, A. (2002)Interdisciplinarity: The Road Ahead for Education in Digital Libraries D-Lib Magazine, 8:8/9 (July/August). Library science and information science are two original disciplines; however, they are within the same field of study. Library science is applied information science. Library science is both an application and a subfield of information science. Due to the strong connection, sometimes the two terms are used synonymously. Definition Library science (previously termed library studies and library economy) is an interdisciplinary or multidisciplinary field that applies the practices, p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Market Research

Market research is an organized effort to gather information about target markets and customers. It involves understanding who they are and what they need. It is an important component of business strategy and a major factor in maintaining competitiveness. Market research helps to identify and analyze the needs of the market, the market size and the competition. Its techniques encompass both qualitative techniques such as focus groups, in-depth interviews, and ethnography, as well as quantitative techniques such as customer surveys, and analysis of secondary data. It includes social and opinion research, and is the systematic gathering and interpretation of information about individuals or organizations using statistical and analytical methods and techniques of the applied social sciences to gain insight or support decision making. Market research, marketing research, and marketing are a sequence of business activities; sometimes these are handled informally. The field of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Search

A search engine is a software system that provides hyperlinks to web pages, and other relevant information on the Web in response to a user's query. The user enters a query in a web browser or a mobile app, and the search results are typically presented as a list of hyperlinks accompanied by textual summaries and images. Users also have the option of limiting a search to specific types of results, such as images, videos, or news. For a search provider, its engine is part of a distributed computing system that can encompass many data centers throughout the world. The speed and accuracy of an engine's response to a query are based on a complex system of indexing that is continuously updated by automated web crawlers. This can include data mining the files and databases stored on web servers, although some content is not accessible to crawlers. There have been many search engines since the dawn of the Web in the 1990s, however, Google Search became the dominant one in the 2000s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scientific Research

The scientific method is an empirical method for acquiring knowledge that has been referred to while doing science since at least the 17th century. Historically, it was developed through the centuries from the ancient and medieval world. The scientific method involves careful observation coupled with rigorous skepticism, because cognitive assumptions can distort the interpretation of the observation. Scientific inquiry includes creating a testable hypothesis through inductive reasoning, testing it through experiments and statistical analysis, and adjusting or discarding the hypothesis based on the results. Although procedures vary across fields, the underlying process is often similar. In more detail: the scientific method involves making conjectures (hypothetical explanations), predicting the logical consequences of hypothesis, then carrying out experiments or empirical observations based on those predictions. with added notes. Reprinted with previously unpublished part, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kennesaw State University

Kennesaw State University (KSU) is a public research university in the U.S. state of Georgia with two campuses in the Atlanta metropolitan area, one in the Kennesaw area and the other in Marietta on a combined of land. The school was founded in 1963 by the Georgia Board of Regents using local bonds and a federal space-grant during a time of major Georgia economic expansion after World War II. KSU also holds classes at the Cobb Galleria Centre, Dalton State College, and in Paulding County (Dallas). The total enrollment exceeds 47,000 students making KSU the third-largest university by enrollment in Georgia. KSU is part of the University System of Georgia and is classified among "R2: Doctoral Universities – High research activity". Kennesaw State's athletic teams are an NCAA Division I member of the Conference USA. History Establishment in 1963 until 1975 KSU was chartered by the Board of Regents on October 9, 1963, during one of the most dramatic periods of college ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

First Monday (journal)

''First Monday'' is a monthly peer-reviewed open access academic journal covering research on the Internet, published in the United States. Publication The journal is sponsored and hosted by the University of Illinois at Chicago. It is published on the first Monday of every month. In 2011, the journal had an acceptance rate of about 15%. The journal has no article processing charges and no advertisements. History According to the chief editor, Edward Valauskas, the journal emerged before the open access model emerged: ''First Monday'' is among the first peer-reviewed journals on the Internet. It originated in the summer of 1995 with a proposal to start a new Internet-only, peer-reviewed journal about the Internet by eventual editor-in-chief Edward J. Valauskas to Munksgaard, a Danish publisher. Munksgaard agreed to publish the journal in September 1995. The first issue appeared on 6 May 1996, the first Monday of May, also the opening of the Fifth International World Wide ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Credentials

A credential is a piece of any document that details a qualification, competence, or authority issued to an individual by a third party with a relevant or '' de facto'' authority or assumed competence to do so. Examples of credentials include academic diplomas, academic degrees, certifications, security clearances, identification documents, badges, passwords, user names, keys, powers of attorney, and so on. Sometimes publications, such as scientific papers or books, may be viewed as similar to credentials by some people, especially if the publication was peer reviewed or made in a well-known journal or reputable publisher. Types and documentation of credentials A person holding a credential is usually given documentation or secret knowledge (''e.g.,'' a password or key) as proof of the credential. Sometimes this proof (or a copy of it) is held by a third, trusted party. While in some cases a credential may be as simple as a paper membership card, in other cases, such as di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Access To Information

Access may refer to: Companies and organizations * ACCESS (Australia), an Australian youth network * Access (credit card), a former credit card in the United Kingdom * Access Co., a Japanese software company * Access International Advisors, a hedge fund * AirCraft Casualty Emotional Support Services * Arab Community Center for Economic and Social Services * Access, the Alphabet division containing Google Fiber * Access, the Southwest Ohio Regional Transit Authority's paratransit service Sailing * Access 2.3, a sailing keelboat * Access 303, a sailing keelboat * Access Liberty, a sailing keelboat Television * '' Access Hollywood'', formerly ''Access'', an American entertainment newsmagazine * ''Access'' (British TV programme), a British entertainment television programme * ''Access'' (Canadian TV series), a Canadian television series (1974–1982) * Access TV, a former Canadian educational television channel (1973–2011) * Access Television Network, an American infom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Newsgroup

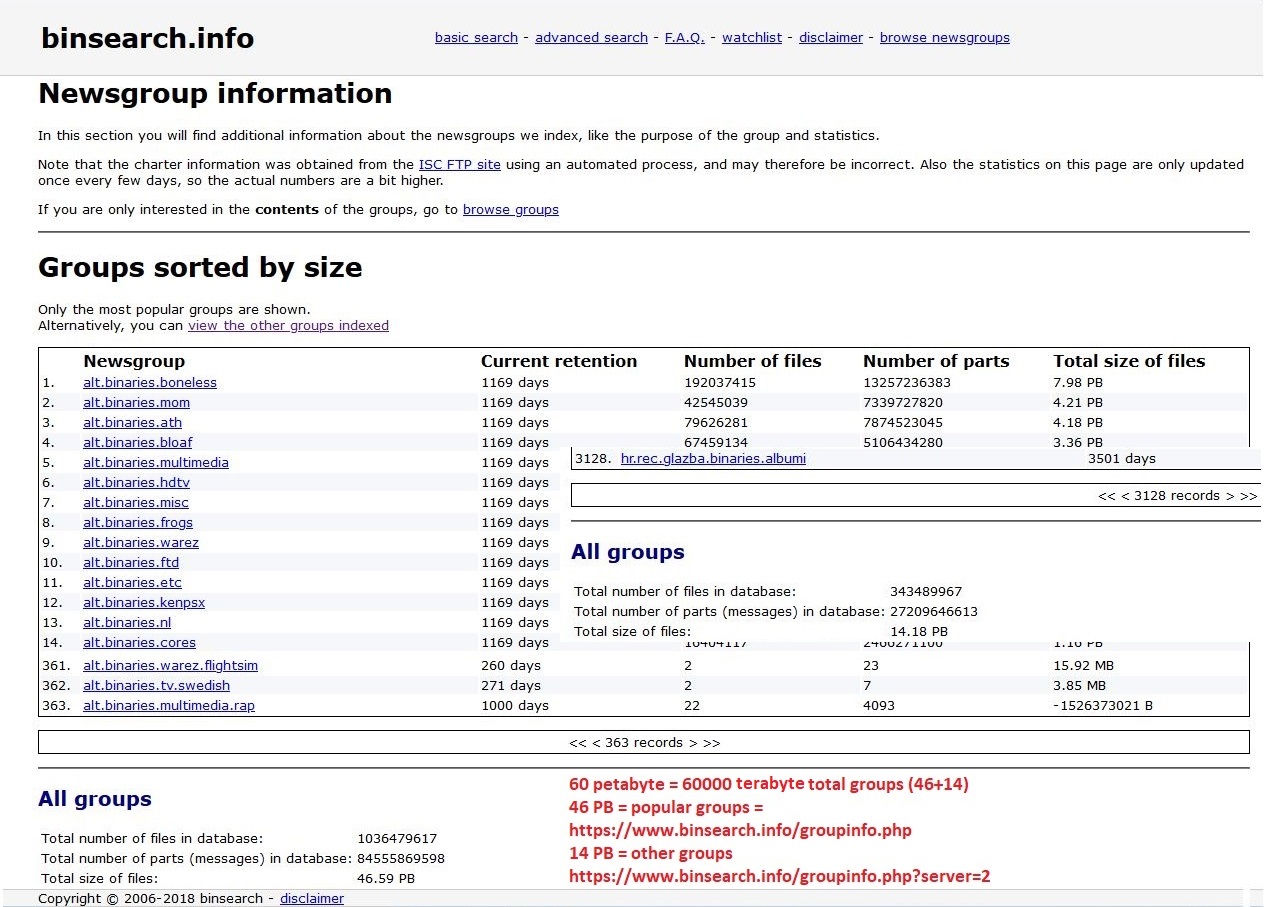

A Usenet newsgroup is a repository usually within the Usenet system for messages posted from users in different locations using the Internet. They are not only discussion groups or conversations, but also a repository to publish articles, start developing tasks like creating Linux, sustain mailing lists and file uploading. That’s thank to the protocol that poses no article size limit, but are to the providers to decide. In the late 1980s, Usenet articles were often limited by the providers to 60,000 characters, but in time, Usenet groups have been split into two types: ''text'' for mainly discussions, conversations, articles, limited by most providers to about 32,000 characters, and ''binary'' for file transfer, with providers setting limits ranging from less than 1 MB to about 4 MB. Newsgroups are technically distinct from, but functionally similar to, discussion forums on the World Wide Web. Newsreader software is used to read the content of newsgroups. Before the adoption ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internet Relay Chat

IRC (Internet Relay Chat) is a text-based chat system for instant messaging. IRC is designed for Many-to-many, group communication in discussion forums, called ''#Channels, channels'', but also allows one-on-one communication via instant messaging, private messages as well as Direct Client-to-Client, chat and data transfer, including file sharing. Internet Relay Chat is implemented as an application layer protocol to facilitate communication in the form of text. The chat process works on a Client–server model, client–server networking model. Users connect, using a clientwhich may be a Web application, web app, a Computer program, standalone desktop program, or embedded into part of a larger programto an IRC server, which may be part of a larger IRC network. Examples of ways used to connect include the programs Mibbit, KiwiIRC, mIRC and the paid service IRCCloud. IRC usage has been declining steadily since 2003, losing 60 percent of its users by 2012. In April 2011, the t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |