|

Informatica Timișoara

Informatica is an American software development company founded in 1993. It is headquartered in Redwood City, California. Its core products include Enterprise Cloud Data Management and Data Integration. It was co-founded by Gaurav Dhillon and Diaz Nesamoney. Amit Walia is the company's CEO. History On 29 April 1999, its Initial public offering on the NASDAQ stock exchange listed its shares under the stock symbol INFA. On 7 April 2015, Permira and Canada Pension Plan Investment Board announced that a company controlled by the Permira funds and CPPIB would acquire Informatica for approximately US$5.3 billion. On 6 August 2015, the acquisition was completed and Microsoft and Salesforce Ventures invested in the company as part of the deal. The company's stock ceased trading on the NASDAQ under the ticker symbol INFA effective on the same date. On 27 October 2021, Informatica again became publicly traded with the INFA stock symbol, this time listed on the NYSE, opening at $27. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Public Company

A public company is a company whose ownership is organized via shares of stock which are intended to be freely traded on a stock exchange or in over-the-counter markets. A public (publicly traded) company can be listed on a stock exchange (listed company), which facilitates the trade of shares, or not (unlisted public company). In some jurisdictions, public companies over a certain size must be listed on an exchange. In most cases, public companies are ''private'' enterprises in the ''private'' sector, and "public" emphasizes their reporting and trading on the public markets. Public companies are formed within the legal systems of particular states, and therefore have associations and formal designations which are distinct and separate in the polity in which they reside. In the United States, for example, a public company is usually a type of corporation (though a corporation need not be a public company), in the United Kingdom it is usually a public limited company (plc), i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Companies Established In 1993

Software is a set of computer programs and associated documentation and data. This is in contrast to hardware, from which the system is built and which actually performs the work. At the lowest programming level, executable code consists of machine language instructions supported by an individual processor—typically a central processing unit (CPU) or a graphics processing unit (GPU). Machine language consists of groups of binary values signifying processor instructions that change the state of the computer from its preceding state. For example, an instruction may change the value stored in a particular storage location in the computer—an effect that is not directly observable to the user. An instruction may also invoke one of many input or output operations, for example displaying some text on a computer screen; causing state changes which should be visible to the user. The processor executes the instructions in the order they are provided, unless it is instructed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Companies Established In 1993

American(s) may refer to: * American, something of, from, or related to the United States of America, commonly known as the "United States" or "America" ** Americans, citizens and nationals of the United States of America ** American ancestry, people who self-identify their ancestry as "American" ** American English, the set of varieties of the English language native to the United States ** Native Americans in the United States, indigenous peoples of the United States * American, something of, from, or related to the Americas, also known as "America" ** Indigenous peoples of the Americas * American (word), for analysis and history of the meanings in various contexts Organizations * American Airlines, U.S.-based airline headquartered in Fort Worth, Texas * American Athletic Conference, an American college athletic conference * American Recordings (record label), a record label previously known as Def American * American University, in Washington, D.C. Sports teams Soccer * B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

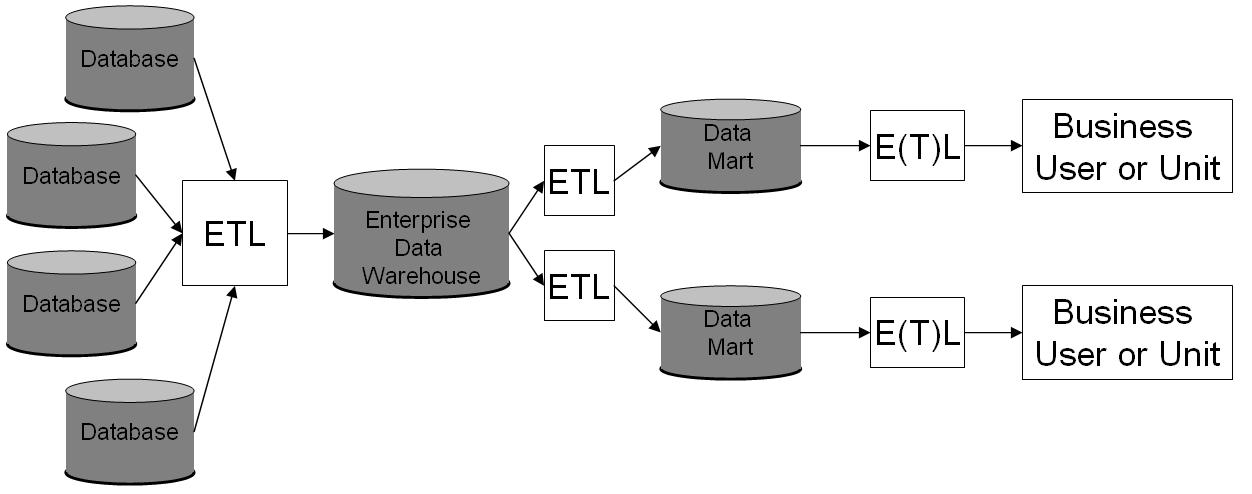

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Governance

Data governance is a term used on both a macro and a micro level. The former is a political concept and forms part of international relations and Internet governance; the latter is a data management concept and forms part of corporate data governance. Macro level On the macro level, data governance refers to the governing of cross-border data flows by countries, and hence is more precisely called ''international data governance''. This new field consists of "norms, principles and rules governing various types of data." Micro level Here the focus is on an individual company. Here data governance is a data management concept concerning the capability that enables an organization to ensure that high data quality exists throughout the complete lifecycle of the data, and data controls are implemented that support business objectives. The key focus areas of data governance include availability, usability, consistency, data integrity and data security, standard compliance and incl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Master Data

Master data represents "data about the business entities that provide context for business transactions". The most commonly found categories of master data are parties (individuals and organisations, and their roles, such as customers, suppliers, employees), products, financial structures (such as ledgers and cost centers) and locational concepts. Master data should be distinguished from reference data. While both provide context for business transactions, reference data is concerned with classification and categorisation, while master data is concerned with business entities. Master data is, by its nature, almost always non-transactional in nature. There exist edge cases where an organization may need to treat certain transactional processes and operations as "master data". This arises, for example, where information about master data entities, such as customers or products, is only contained within transactional data such as orders and receipts and is not housed separately. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Virtualization

Data virtualization is an approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view (or single view of any other entity) of the overall data. Unlike the traditional extract, transform, load ("ETL") process, the data remains in place, and real-time access is given to the source system for the data. This reduces the risk of data errors, of the workload moving data around that may never be used, and it does not attempt to impose a single data model on the data (an example of heterogeneous data is a federated database system). The technology also supports the writing of transaction data updates back to the source systems. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Replication

Replication in computing involves sharing information so as to ensure consistency between redundant resources, such as software or hardware components, to improve reliability, fault-tolerance, or accessibility. Terminology Replication in computing can refer to: * ''Data replication'', where the same data is stored on multiple storage devices * ''Computation replication'', where the same computing task is executed many times. Computational tasks may be: ** ''Replicated in space'', where tasks are executed on separate devices ** ''Replicated in time'', where tasks are executed repeatedly on a single device Replication in space or in time is often linked to scheduling algorithms. Access to a replicated entity is typically uniform with access to a single non-replicated entity. The replication itself should be transparent to an external user. In a failure scenario, a failover of replicas should be hidden as much as possible with respect to quality of service. Computer scientis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and planning". Moreover, data is deemed of high quality if it correctly represents the real-world construct to which it refers. Furthermore, apart from these definitions, as the number of data sources increases, the question of internal data consistency becomes significant, regardless of fitness for use for any particular external purpose. People's views on data quality can often be in disagreement, even when discussing the same set of data used for the same purpose. When this is the case, data governance is used to form agreed upon definitions and standards for data quality. In such cases, data cleansing, including standardization, may be required in order to ensure data quality. Definitions Defining data quality is difficult due to the ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Masking

Data masking or data obfuscation is the process of modifying sensitive data in such a way that it is of no or little value to unauthorized intruders while still being usable by software or authorized personnel. Data masking can also be referred as anonymization, or tokenization, depending on different context. The main reason to mask data is to protect information that is classified as personally identifiable information, or mission critical data. However, the data must remain usable for the purposes of undertaking valid test cycles. It must also look real and appear consistent. It is more common to have masking applied to data that is represented outside of a corporate production system. In other words, where data is needed for the purpose of application development, building program extensions and conducting various test cycles. It is common practice in enterprise computing to take data from the production systems to fill the data component, required for these non-production en ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Event Processing

Event processing is a method of tracking and analyzing (processing) streams of information (data) about things that happen (events), and deriving a conclusion from them. Complex event processing, or CEP, consists of a set of concepts and techniques developed in the early 1990s for processing real-time events and extracting information from event streams as they arrive. The goal of complex event processing is to identify meaningful events (such as opportunities or threats) in real-time situations and respond to them as quickly as possible. These events may be happening across the various layers of an organization as sales leads, orders or customer service calls. Or, they may be news items, text messages, social media posts, stock market feeds, traffic reports, weather reports, or other kinds of data. An event may also be defined as a "change of state," when a measurement exceeds a predefined threshold of time, temperature, or other value. Analysts have suggested that CEP will give ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |