|

Inductive Bias

The inductive bias (also known as learning bias) of a learning algorithm is the set of assumptions that the learner uses to predict outputs of given inputs that it has not encountered. In machine learning, one aims to construct algorithms that are able to ''learn'' to predict a certain target output. To achieve this, the learning algorithm is presented some training examples that demonstrate the intended relation of input and output values. Then the learner is supposed to approximate the correct output, even for examples that have not been shown during training. Without any additional assumptions, this problem cannot be solved since unseen situations might have an arbitrary output value. The kind of necessary assumptions about the nature of the target function are subsumed in the phrase ''inductive bias''. A classical example of an inductive bias is Occam's razor, assuming that the simplest consistent hypothesis about the target function is actually the best. Here ''consistent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machines

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues (Boser et al., 1992, Guyon et al., 1993, Cortes and Vapnik, 1995, Vapnik et al., 1997) SVMs are one of the most robust prediction methods, being based on statistical learning frameworks or VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). Given a set of training examples, each marked as belonging to one of two categories, an SVM training algorithm builds a model that assigns new examples to one category or the other, making it a non-probabilistic binary linear classifier (although methods such as Platt scaling exist to use SVM in a probabilistic classification setting). SVM maps training examples to points in space so as to maximise the width of the gap between the two categories. New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Bias

A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, or what is broadly called irrationality. Although it may seem like such misperceptions would be aberrations, biases can help humans find commonalities and shortcuts to assist in the navigation of common situations in life. Some cognitive biases are presumably adaptive. Cognitive biases may lead to more effective actions in a given context. Furthermore, allowing cognitive biases enables faster decisions which can be desirable when timeliness is more valuable than accuracy, as illustrated in heuristics. Other cognitive biases are a "by-product" of human processing limitations, result ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Bias

Algorithmic bias describes systematic and repeatable errors in a computer system that create " unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm. Bias can emerge from many factors, including but not limited to the design of the algorithm or the unintended or unanticipated use or decisions relating to the way data is coded, collected, selected or used to train the algorithm. For example, algorithmic bias has been observed in search engine results and social media platforms. This bias can have impacts ranging from inadvertent privacy violations to reinforcing social biases of race, gender, sexuality, and ethnicity. The study of algorithmic bias is most concerned with algorithms that reflect "systematic and unfair" discrimination. This bias has only recently been addressed in legal frameworks, such as the European Union's General Data Protection Regulation (2018) and the proposed Artificial Intelligence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-nearest Neighbors Algorithm

In statistics, the ''k''-nearest neighbors algorithm (''k''-NN) is a non-parametric supervised learning method first developed by Evelyn Fix and Joseph Hodges in 1951, and later expanded by Thomas Cover. It is used for classification and regression. In both cases, the input consists of the ''k'' closest training examples in a data set. The output depends on whether ''k''-NN is used for classification or regression: :* In ''k-NN classification'', the output is a class membership. An object is classified by a plurality vote of its neighbors, with the object being assigned to the class most common among its ''k'' nearest neighbors (''k'' is a positive integer, typically small). If ''k'' = 1, then the object is simply assigned to the class of that single nearest neighbor. :* In ''k-NN regression'', the output is the property value for the object. This value is the average of the values of ''k'' nearest neighbors. If ''k'' = 1, then the output is simply assigned to t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Selection

In machine learning and statistics, feature selection, also known as variable selection, attribute selection or variable subset selection, is the process of selecting a subset of relevant features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons: :* simplification of models to make them easier to interpret by researchers/users, :* shorter training times, :* to avoid the curse of dimensionality, :*improve data's compatibility with a learning model class, :*encode inherent symmetries present in the input space. The central premise when using a feature selection technique is that the data contains some features that are either ''redundant'' or ''irrelevant'', and can thus be removed without incurring much loss of information. ''Redundant'' and ''irrelevant'' are two distinct notions, since one relevant feature may be redundant in the presence of another relevant feature with which it is strongly correlated. Feature sele ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Space

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a phenomenon. Choosing informative, discriminating and independent features is a crucial element of effective algorithms in pattern recognition, classification and regression. Features are usually numeric, but structural features such as strings and graphs are used in syntactic pattern recognition. The concept of "feature" is related to that of explanatory variable used in statistical techniques such as linear regression. Classification A numeric feature can be conveniently described by a feature vector. One way to achieve binary classification is using a linear predictor function (related to the perceptron) with a feature vector as input. The method consists of calculating the scalar product between the feature vector and a vector of weights, qualifying those observations whose result exceeds a threshold. Algorithms for classification from a feature vector includ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Description Length

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam's razor. The MDL principle can be extended to other forms of inductive inference and learning, for example to estimation and sequential prediction, without explicitly identifying a single model of the data. MDL has its origins mostly in information theory and has been further developed within the general fields of statistics, theoretical computer science and machine learning, and more narrowly computational learning theory. Historically, there are different, yet interrelated, usages of the definite noun phrase "''the'' minimum description length ''principle''" that vary in what is meant by ''description'': * Within Jorma Rissanen's theory of learning, a central concept of information theory, models are statistical hypotheses and desc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No Free Lunch In Search And Optimization

In computational complexity and optimization the no free lunch theorem is a result that states that for certain types of mathematical problems, the computational cost of finding a solution, averaged over all problems in the class, is the same for any solution method. The name alludes to the saying "there ain't no such thing as a free lunch", that is, no method offers a "short cut". This is under the assumption that the search space is a probability density function. It does not apply to the case where the search space has underlying structure (e.g., is a differentiable function) that can be exploited more efficiently (e.g., Newton's method in optimization) than random search or even has closed-form solutions (e.g., the extrema of a quadratic polynomial) that can be determined without search at all. For such probabilistic assumptions, the outputs of all procedures solving a particular type of problem are statistically identical. A colourful way of describing such a circumstance, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Occam's Razor

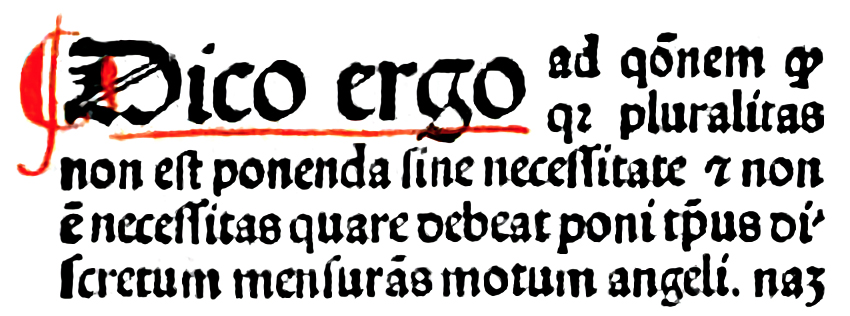

Occam's razor, Ockham's razor, or Ocham's razor ( la, novacula Occami), also known as the principle of parsimony or the law of parsimony ( la, lex parsimoniae), is the problem-solving principle that "entities should not be multiplied beyond necessity". It is generally understood in the sense that with competing theories or explanations, the simpler one, for example a model with fewer parameters, is to be preferred. The idea is frequently attributed to English Franciscan friar William of Ockham (), a scholastic philosopher and theologian, although he never used these exact words. This philosophical razor advocates that when presented with competing hypotheses about the same prediction, one should select the solution with the fewest assumptions, and that this is not meant to be a way of choosing between hypotheses that make different predictions. Similarly, in science, Occam's razor is used as an abductive heuristic in the development of theoretical models rather than as a rigoro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. Cross-validation is a resampling method that uses different portions of the data to test and train a model on different iterations. It is mainly used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation dataset or ''testing set''). The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias and to give an insight on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Naive Bayes Classifier

In statistics, naive Bayes classifiers are a family of simple " probabilistic classifiers" based on applying Bayes' theorem with strong (naive) independence assumptions between the features (see Bayes classifier). They are among the simplest Bayesian network models, but coupled with kernel density estimation, they can achieve high accuracy levels. Naive Bayes classifiers are highly scalable, requiring a number of parameters linear in the number of variables (features/predictors) in a learning problem. Maximum-likelihood training can be done by evaluating a closed-form expression, which takes linear time, rather than by expensive iterative approximation as used for many other types of classifiers. In the statistics literature, naive Bayes models are known under a variety of names, including simple Bayes and independence Bayes. All these names reference the use of Bayes' theorem in the classifier's decision rule, but naive Bayes is not (necessarily) a Bayesian method. Introduct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |