|

Hutter Prize

The Hutter Prize is a cash prize funded by Marcus Hutter which rewards data compression improvements on a specific 1 GB English text file, with the goal of encouraging research in artificial intelligence (AI). Launched in 2006, the prize awards 5000 euros for each one percent improvement (with 500,000 euros total funding) in the compressed size of the file ''enwik9'', which is the larger of two files used in the Large Text Compression Benchmark; enwik9 consists of the first 1,000,000,000 characters of a specific version of English Wikipedia. The ongoing competition is organized by Hutter, Matt Mahoney, and Jim Bowery. Goals The goal of the Hutter Prize is to encourage research in artificial intelligence (AI). The organizers believe that text compression and AI are equivalent problems. Hutter proved that the optimal behavior of a goal-seeking agent in an unknown but computable environment is to guess at each step that the environment is probably controlled by one of the shortest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Marcus Hutter

Marcus Hutter (born April 14, 1967 in Munich) is DeepMind Senior Scientist researching the mathematical foundations of artificial general intelligence. He is on leave from his professorship at the ANU College of Engineering and Computer Science of the Australian National University in Canberra, Australia. Hutter studied physics and computer science at the Technical University of Munich. In 2000 he joined Jürgen Schmidhuber's group at the Istituto Dalle Molle di Studi sull'Intelligenza Artificiale (Dalle Molle Institute for Artificial Intelligence Research) in Manno, Switzerland. With others, he developed a mathematical theory of artificial general intelligence. His book ''Universal Artificial Intelligence: Sequential Decisions Based on Algorithmic Probability'' was published by Springer in 2005. Research In 2002, Hutter, with Jürgen Schmidhuber and Shane Legg, developed and published a mathematical theory of artificial general intelligence, AIXI, based on idealised int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

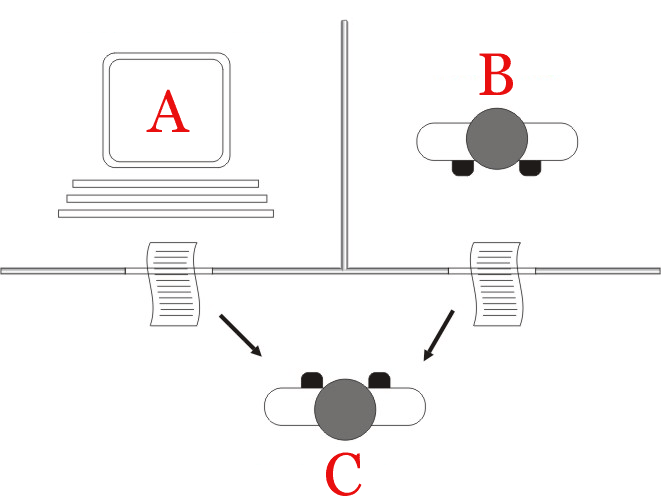

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gigabyte

The gigabyte () is a multiple of the unit byte for digital information. The prefix '' giga'' means 109 in the International System of Units (SI). Therefore, one gigabyte is one billion bytes. The unit symbol for the gigabyte is GB. This definition is used in all contexts of science (especially data science), engineering, business, and many areas of computing, including storage capacities of hard drives, solid state drives, and tapes, as well as data transmission speeds. However, the term is also used in some fields of computer science and information technology to denote (10243 or 230) bytes, particularly for sizes of RAM. Thus, prior to 1998, some usage of ''gigabyte'' has been ambiguous. To resolve this difficulty, IEC 80000-13 clarifies that a ''gigabyte'' (GB) is 109 bytes and specifies the term ''gibibyte'' (GiB) to denote 230 bytes. These differences are still readily seen for example, when a 400 GB drive's capacity is displayed by Microsoft Windows as 372&nbs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

English Wikipedia

The English Wikipedia is, along with the Simple English Wikipedia, one of two English-language editions of Wikipedia, an online encyclopedia. It was founded on January 15, 2001, as Wikipedia's first edition, and, as of , has the most articles of any edition, at . As of , of articles in all Wikipedias belong to the English-language edition; this share was more than 50% in 2003. The edition's one-billionth edit was made on January 13, 2021. Articles The English Wikipedia has pioneered some ideas as conventions, policies or features which were later adopted by Wikipedia editions in some of the other languages. These ideas include "featured articles", the neutral-point-of-view policy, navigation templates, the sorting of short "stub" articles into sub-categories, dispute resolution mechanisms such as mediation and arbitration, and weekly collaborations. It surpassed six million articles on 23 January 2020. In November 2022, the total volume of the compressed texts of it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Springer Science+Business Media

Springer Science+Business Media, commonly known as Springer, is a German multinational publishing company of books, e-books and peer-reviewed journals in science, humanities, technical and medical (STM) publishing. Originally founded in 1842 in Berlin, it expanded internationally in the 1960s, and through mergers in the 1990s and a sale to venture capitalists it fused with Wolters Kluwer and eventually became part of Springer Nature in 2015. Springer has major offices in Berlin, Heidelberg, Dordrecht, and New York City. History Julius Springer founded Springer-Verlag in Berlin in 1842 and his son Ferdinand Springer grew it from a small firm of 4 employees into Germany's then second largest academic publisher with 65 staff in 1872.Chronology ". Springer Science+Business Media. In 1964, Springer expanded its business internationally, o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov Complexity

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value essentially larger than ''P'''s own len ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AIXI

AIXI is a theoretical mathematical formalism for artificial general intelligence. It combines Solomonoff induction with sequential decision theory. AIXI was first proposed by Marcus Hutter in 2000 and several results regarding AIXI are proved in Hutter's 2005 book ''Universal Artificial Intelligence''. AIXI is a reinforcement learning (RL) agent. It maximizes the expected total rewards received from the environment. Intuitively, it simultaneously considers every computable hypothesis (or environment). In each time step, it looks at every possible program and evaluates how many rewards that program generates depending on the next action taken. The promised rewards are then weighted by the subjective belief that this program constitutes the true environment. This belief is computed from the length of the program: longer programs are considered less likely, in line with Occam's razor. AIXI then selects the action that has the highest expected total reward in the weighted sum of al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hard AI

Artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that a human being can. It is a primary goal of some artificial intelligence research and a common topic in science fiction and futures studies. AGI is also called strong AI,: Kurzweil describes strong AI as "machine intelligence with the full range of human intelligence." full AI, or general intelligent action, although some academic sources reserve the term "strong AI" for computer programs that experience sentience or consciousness. Strong AI contrasts with ''weak AI'' (or ''narrow AI''), which is not intended to have general cognitive abilities; rather, weak AI is any program that is designed to solve exactly one problem. (Academic sources reserve "weak AI" for programs that do not experience consciousness or do not have a mind in the same sense people do.) A 2020 survey identified 72 active AGI R&D projects spread across 37 countries. Characteristics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turing Test

The Turing test, originally called the imitation game by Alan Turing in 1950, is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. The evaluator would be aware that one of the two partners in conversation was a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel, such as a computer keyboard and screen, so the result would not depend on the machine's ability to render words as speech. If the evaluator could not reliably tell the machine from the human, the machine would be said to have passed the test. The test results would not depend on the machine's ability to give correct answers to questions, only on how closely its answers resembled those a human would give. The test was intr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Model

A language model is a probability distribution over sequences of words. Given any sequence of words of length , a language model assigns a probability P(w_1,\ldots,w_m) to the whole sequence. Language models generate probabilities by training on text corpora in one or many languages. Given that languages can be used to express an infinite variety of valid sentences (the property of digital infinity), language modeling faces the problem of assigning non-zero probabilities to linguistically valid sequences that may never be encountered in the training data. Several modelling approaches have been designed to surmount this problem, such as applying the Markov assumption or using neural architectures such as recurrent neural networks or transformers. Language models are useful for a variety of problems in computational linguistics; from initial applications in speech recognition to ensure nonsensical (i.e. low-probability) word sequences are not predicted, to wider use in machine tran ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Free-software License

A free-software license is a notice that grants the recipient of a piece of software extensive rights to modify and redistribute that software. These actions are usually prohibited by copyright law, but the rights-holder (usually the author) of a piece of software can remove these restrictions by accompanying the software with a software license which grants the recipient these rights. Software using such a license is free software (or free and open-source software) as conferred by the copyright holder. Free-software licenses are applied to software in source code and also binary object-code form, as the copyright law recognizes both forms. Comparison Free-software licenses provide risk mitigation against different legal threats or behaviors that are seen as potentially harmful by developers: History Pre-1980s In the early times of software, sharing of software and source code was common in certain communities, for instance academic institutions. Before the US Co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |