|

History Of Natural Language Processing

The history of natural language processing describes the advances of natural language processing (Outline of natural language processing). There is some overlap with the history of machine translation, the history of speech recognition, and the history of artificial intelligence. Research and development The history of machine translation dates back to the seventeenth century, when philosophers such as Leibniz and Descartes put forward proposals for codes which would relate words between languages. All of these proposals remained theoretical, and none resulted in the development of an actual machine. The first patents for "translating machines" were applied for in the mid-1930s. One proposal, by Georges Artsrouni was simply an automatic bilingual dictionary using paper tape. The other proposal, by Peter Troyanskii, a Russian, was more detailed. It included both the bilingual dictionary, and a method for dealing with grammatical roles between languages, based on Esperanto. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chatterbots

A chatbot or chatterbot is a software application used to conduct an on-line chat conversation via text or text-to-speech, in lieu of providing direct contact with a live human agent. Designed to convincingly simulate the way a human would behave as a conversational partner, chatbot systems typically require continuous tuning and testing, and many in production remain unable to adequately converse, while none of them can pass the standard Turing test. The term "ChatterBot" was originally coined by Michael Mauldin (creator of the first Verbot) in 1994 to describe these conversational programs. Chatbots are used in dialog systems for various purposes including customer service, request routing, or information gathering. While some chatbot applications use extensive word-classification processes, natural-language processors, and sophisticated AI, others simply scan for general keywords and generate responses using common phrases obtained from an associated library or database. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite State Automata

A finite-state machine (FSM) or finite-state automaton (FSA, plural: ''automata''), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number of '' states'' at any given time. The FSM can change from one state to another in response to some inputs; the change from one state to another is called a ''transition''. An FSM is defined by a list of its states, its initial state, and the inputs that trigger each transition. Finite-state machines are of two types— deterministic finite-state machines and non-deterministic finite-state machines. A deterministic finite-state machine can be constructed equivalent to any non-deterministic one. The behavior of state machines can be observed in many devices in modern society that perform a predetermined sequence of actions depending on a sequence of events with which they are presented. Simple examples are vending machines, which dispense p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

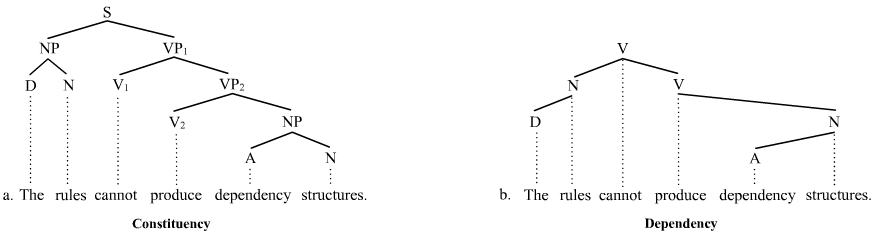

Phrase Structure Rules

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories ( parts of speech) and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a ''constituency grammar''; as such, it stands in contrast to ''dependency grammars'', which are based on the dependency relation. Definition and examples Phrase structure rules are usually of the following form: :A \to B \quad C meaning that the constituent A is separated into the two subconstituents B and C. Some examples for English are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Augmented Transition Network

An augmented transition network or ATN is a type of graph theoretic structure used in the operational definition of formal languages, used especially in parsing relatively complex natural languages, and having wide application in artificial intelligence. An ATN can, theoretically, analyze the structure of any sentence, however complicated. ATN are modified transition networks and an extension of RTNs. ATNs build on the idea of using finite state machines (Markov model) to parse sentences. W. A. Woods in "Transition Network Grammars for Natural Language Analysis" claims that by adding a recursive mechanism to a finite state model, parsing can be achieved much more efficiently. Instead of building an automaton for a particular sentence, a collection of transition graphs are built. A grammatically correct sentence is parsed by reaching a final state in any state graph. Transitions between these graphs are simply subroutine calls from one state to any initial state on any graph in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Janet Kolodner

Janet Lynne Kolodner is an American cognitive scientist and learning scientist and Regents' Professor Emerita in the School of Interactive Computing, College of Computing at the Georgia Institute of Technology. She was Founding Editor in Chief of '' The Journal of the Learning Sciences'' and served in that role for 19 years. She was Founding Executive Officer of the International Society of the Learning Sciences (ISLS). From August, 2010 through July, 2014, she was a program officer at the National Science Foundation and headed up the Cyberlearning and Future Learning Technologies program (originally called Cyberlearning: Transforming Education). Since finishing at NSF, she is working toward a set of projects that will integrate learning technologies coherently to support disciplinary and everyday learning, support project-based pedagogy that works, and connect to the best in curriculum for active learning. As of July, 2020, she is a Professor of the Practice at the Lynch Sch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

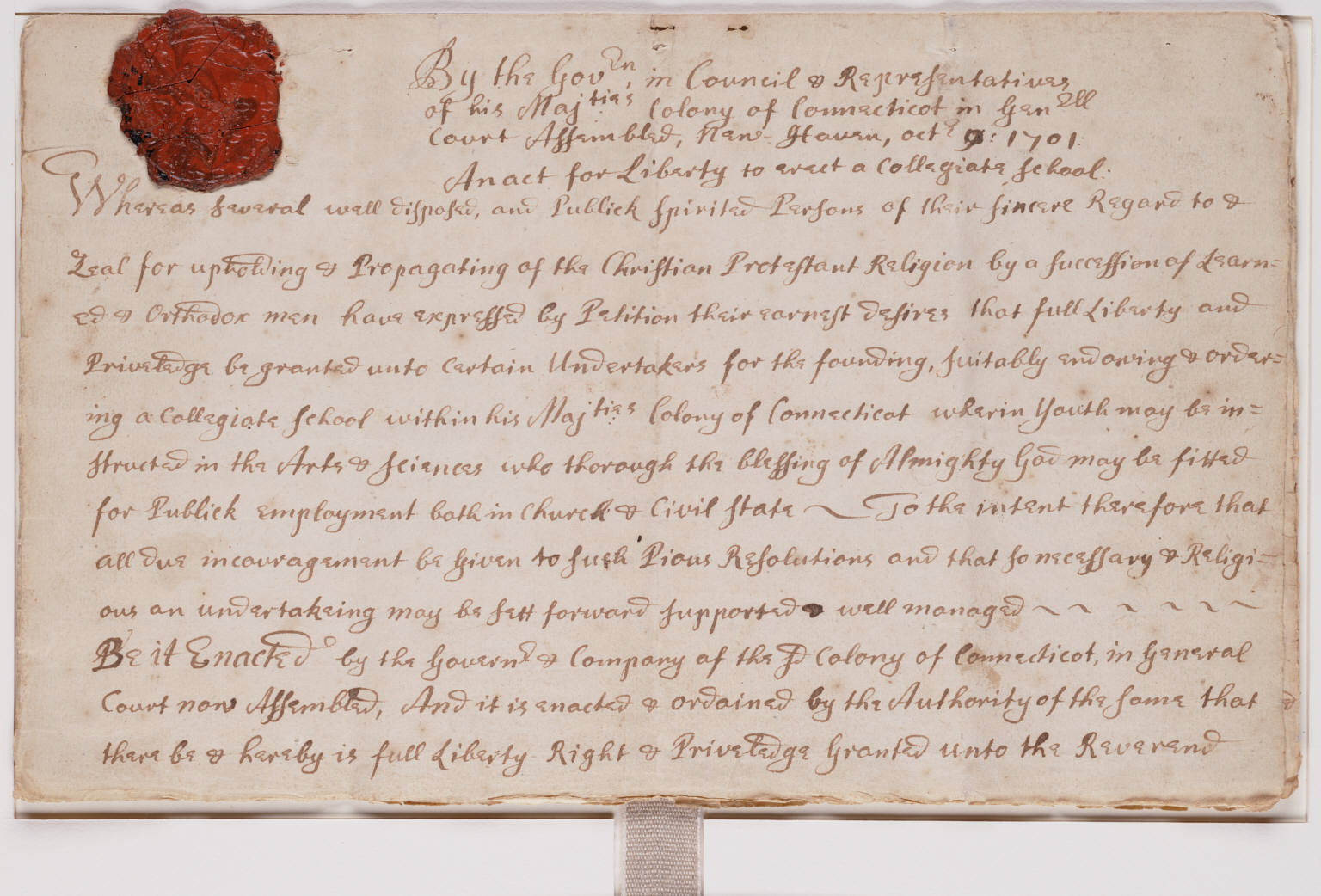

Yale University

Yale University is a Private university, private research university in New Haven, Connecticut. Established in 1701 as the Collegiate School, it is the List of Colonial Colleges, third-oldest institution of higher education in the United States and among the most prestigious in the world. It is a member of the Ivy League. Chartered by the Connecticut Colony, the Collegiate School was established in 1701 by clergy to educate Congregationalism in the United States, Congregational ministers before moving to New Haven in 1716. Originally restricted to theology and sacred languages, the curriculum began to incorporate humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first Doctor of Philosophy, PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew after 1890 with rapid expansion of the physical campus and sc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sydney Lamb

Sydney MacDonald Lamb (born May 4, 1929 in Denver, Colorado) is an American linguist and professor at Rice University, whose stratificational grammar is a significant alternative theory to Chomsky's transformational grammar. He has specialized in Neurocognitive Linguistics and a stratificational approach to language understanding. Lamb earned his Ph.D. from the University of California, Berkeley in 1958 and taught there from 1956 to 1964. His dissertation was a grammar of the Uto-Aztecan language Mono, under the direction of Mary Haas and Murray B. Emeneau. In 1964, he began teaching at Yale University before joining the Semionics Associates in Berkeley, California in 1977. Lamb did research in North American Indian languages specifically in those geographically centered on California. His contributions have been wide-ranging, including those to historical linguistics, computational linguistics, and the theory of linguistic structure. His work led to innovative designs of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conceptual Dependency Theory

Conceptual dependency theory is a model of natural language understanding used in artificial intelligence systems. Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence. This model was extensively used by Schank's students at Yale University such as Robert Wilensky, Wendy Lehnert, and Janet Kolodner. Schank developed the model to represent knowledge for natural language input into computers. Partly influenced by the work of Sydney Lamb, his goal was to make the meaning independent of the words used in the input, i.e. two sentences identical in meaning, would have a single representation. The system was also intended to draw logical inferences. The model uses the following basic representational tokens:''Language, mind, and brain'' by Thomas W. Simon, Robert J. Scholes 1982 page 105 :* ''real world objects'', each with some ''attributes''. :* ''real world actions'', each with attributes :* ''times'' :* ''locations'' A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Roger Schank

Roger Carl Schank (born 1946) is an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur. Beginning in the late 1960s, he pioneered conceptual dependency theory (within the context of natural language understanding) and case-based reasoning, both of which challenged cognitivist views of memory and reasoning. In 1989, Schank was granted $30 million in a 10-year commitment to his research and development by Andersen Consulting, through which he founded the Institute for the Learning Sciences (ILS) at Northwestern University in Chicago. Academic career For his undergraduate degree, Schank studied mathematics at Carnegie Mellon University in Pittsburgh PA, and later was awarded a PhD in linguistics at the University of Texas in Austin and went on to work in faculty positions at Stanford University and then at Yale University. In 1974, he became professor of computer science and psychology at Yale University. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blocks World

The blocks world is a planning domain in artificial intelligence. The algorithm is similar to a set of wooden blocks of various shapes and colors sitting on a table. The goal is to build one or more vertical stacks of blocks. Only one block may be moved at a time: it may either be placed on the table or placed atop another block. Because of this, any blocks that are, at a given time, under another block cannot be moved. Moreover, some kinds of blocks cannot have other blocks stacked on top of them. The simplicity of this toy world lends itself readily to classical symbolic artificial intelligence approaches, in which the world is modeled as a set of abstract symbols which may be reasoned about. Motivation Artificial Intelligence can be researched in theory and with practical applications. The problem with most practical application is, that the engineers don't know how to program an AI system. Instead of rejecting the challenge at all the idea is to invent an easy to solve domai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |