|

High Performance Fortran

High Performance Fortran (HPF) is an extension of Fortran 90 with constructs that support parallel computing, published by the ''High Performance Fortran Forum'' (HPFF). The HPFF was convened and chaired by Ken Kennedy of Rice University. The first version of the HPF Report was published in 1993. Building on the array syntax introduced in Fortran 90, HPF uses a data parallel model of computation to support spreading the work of a single array computation over multiple processors. This allows efficient implementation on both SIMD and MIMD style architectures. HPF features included: * New Fortran statements, such as FORALL, and the ability to create PURE (side effect free) procedures * Compiler directives for recommended distributions of array data * ''Extrinsic procedure'' interface for interfacing to non-HPF parallel procedures such as those using message passing * Additional library routines - including environmental inquiry, parallel prefix/suffix (e.g., 'scan'), data sca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ken Kennedy (computer Scientist)

Ken Kennedy (August 12, 1945 – February 7, 2007) was an American computer scientist and professor at Rice University. He was the founding chairman of Rice's Computer Science Department. Kennedy directed the construction of several substantial software systems for programming parallel computers, including an automatic vectorizer for Fortran 77, an integrated scientific programming environment, compilers for Fortran 90 and High Performance Fortran, and a compilation system for domain languages based on the numerical computing environment MATLAB. He wrote over 200 articles and book chapters, plus numerous conference addresses. Kennedy was elected to the National Academy of Engineering in 1990. He was named a Fellow of the AAAS in 1994 and of the ACM and IEEE in 1995. In recognition of his achievements in compilation for high performance computer systems, he was honored as the recipient of the 1995 W. W. McDowell Award, the highest research award of the IEEE Computer Society. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rice University

William Marsh Rice University (Rice University) is a private research university in Houston, Texas. It is on a 300-acre campus near the Houston Museum District and adjacent to the Texas Medical Center. Rice is ranked among the top universities in the United States. Opened in 1912 as the Rice Institute after the murder of its namesake William Marsh Rice, Rice is a research university with an undergraduate focus. Its emphasis on undergraduate education is demonstrated by its 6:1 student-faculty ratio. The university has a very high level of research activity, with $156 million in sponsored research funding in 2019. Rice is noted for its applied science programs in the fields of artificial heart research, structural chemical analysis, signal processing, space science, and nanotechnology. Rice has been a member of the Association of American Universities since 1985 and is classified among "R1: Doctoral Universities – Very high research activity". The university is orga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Parallelism

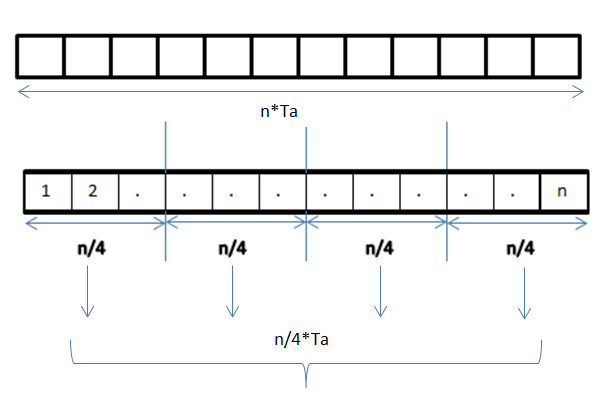

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. One important thing to no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Array Data Structure

In computer science, an array is a data structure consisting of a collection of ''elements'' ( values or variables), each identified by at least one ''array index'' or ''key''. An array is stored such that the position of each element can be computed from its index tuple by a mathematical formula. The simplest type of data structure is a linear array, also called one-dimensional array. For example, an array of ten 32-bit (4-byte) integer variables, with indices 0 through 9, may be stored as ten words at memory addresses 2000, 2004, 2008, ..., 2036, (in hexadecimal: 0x7D0, 0x7D4, 0x7D8, ..., 0x7F4) so that the element with index ''i'' has the address 2000 + (''i'' × 4). The memory address of the first element of an array is called first address, foundation address, or base address. Because the mathematical concept of a matrix can be represented as a two-dimensional grid, two-dimensional arrays are also sometimes called "matrices". In some cases the term "vector" is used in com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single Instruction, Multiple Data

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software thre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Instruction, Multiple Data

In computing, multiple instruction, multiple data (MIMD) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. These classifications are based on how MIMD processors access memory. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. Examples An example of MIMD system is Intel Xeon Phi, descended from Larrabee microarchitecture. These processors have multiple processing cores (up to 61 as of 2015) that can execute different ins ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pure Function

In computer programming, a pure function is a function that has the following properties: # the function return values are identical for identical arguments (no variation with local static variables, non-local variables, mutable reference arguments or input streams), and # the function has no side effects (no mutation of local static variables, non-local variables, mutable reference arguments or input/output streams). Thus a pure function is a computational analogue of a mathematical function. Some authors, particularly from the imperative language community, use the term "pure" for all functions that just have the above property 2 (discussed below). Examples Pure functions The following examples of C++ functions are pure: Impure functions The following C++ functions are impure as they lack the above property 1: The following C++ functions are impure as they lack the above property 2: The following C++ functions are impure as they lack both the above properti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Side Effect (computer Science)

In computer science, an operation, function or expression is said to have a side effect if it modifies some state variable value(s) outside its local environment, which is to say if it has any observable effect other than its primary effect of returning a value to the invoker of the operation. Example side effects include modifying a non-local variable, modifying a static local variable, modifying a mutable argument passed by reference, performing I/O or calling other functions with side-effects. In the presence of side effects, a program's behaviour may depend on history; that is, the order of evaluation matters. Understanding and debugging a function with side effects requires knowledge about the context and its possible histories. Side effects play an important role in the design and analysis of programming languages. The degree to which side effects are used depends on the programming paradigm. For example, imperative programming is commonly used to produce side effects ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Message Passing

In computer science, message passing is a technique for invoking behavior (i.e., running a program) on a computer. The invoking program sends a message to a process (which may be an actor or object) and relies on that process and its supporting infrastructure to then select and run some appropriate code. Message passing differs from conventional programming where a process, subroutine, or function is directly invoked by name. Message passing is key to some models of concurrency and object-oriented programming. Message passing is ubiquitous in modern computer software. It is used as a way for the objects that make up a program to work with each other and as a means for objects and systems running on different computers (e.g., the Internet) to interact. Message passing may be implemented by various mechanisms, including channels. Overview Message passing is a technique for invoking behavior (i.e., running a program) on a computer. In contrast to the traditional technique of c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefix Sum

In computer science, the prefix sum, cumulative sum, inclusive scan, or simply scan of a sequence of numbers is a second sequence of numbers , the sums of prefixes (running totals) of the input sequence: : : : :... For instance, the prefix sums of the natural numbers are the triangular numbers: : Prefix sums are trivial to compute in sequential models of computation, by using the formula to compute each output value in sequence order. However, despite their ease of computation, prefix sums are a useful primitive in certain algorithms such as counting sort,. and they form the basis of the scan higher-order function in functional programming languages. Prefix sums have also been much studied in parallel algorithms, both as a test problem to be solved and as a useful primitive to be used as a subroutine in other parallel algorithms.. Abstractly, a prefix sum requires only a binary associative operator ⊕, making it useful for many applications from calculating well-separated pair ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |