|

HPCC

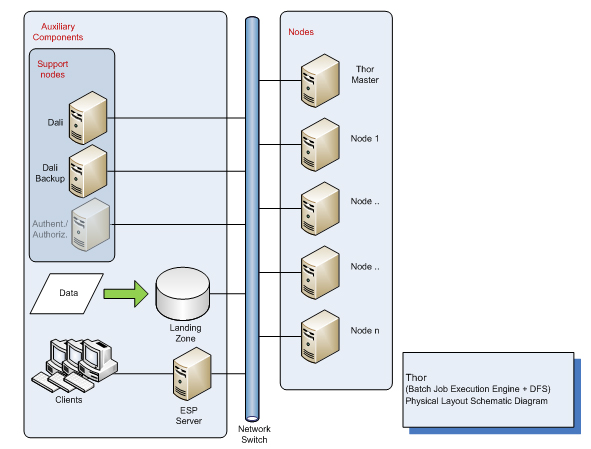

HPCC (High-Performance Computing Cluster), also known as DAS (Data Analytics Supercomputer), is an open source, data-intensive computing system platform developed by LexisNexis Risk Solutions. The HPCC platform incorporates a software architecture implemented on commodity computing clusters to provide high-performance, data-parallel processing for applications utilizing big data. The HPCC platform includes system configurations to support both parallel batch data processing (Thor) and high-performance online query applications using indexed data files (Roxie). The HPCC platform also includes a data-centric declarative programming language for parallel data processing called ECL. The public release of HPCC waannouncedin 2011, after ten years of in-house development (according to LexisNexis). It is an alternative to Hadoop and other Big data platforms. System architecture The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LexisNexis Risk Solutions

LexisNexis Risk Solutions is a global data and analytics company that provides data and technology services, analytics, predictive insights and fraud prevention for a wide range of industries. It is headquartered in Alpharetta, Georgia (part of the Atlanta metropolitan area) and has offices throughout the U.S. and in Australia, Brazil, China, France, Hong Kong SAR, India, Ireland, Israel, Philippines and the U.K. The company's customers include businesses within the insurance, financial services, healthcare and corporate sectors as well as the local, state and federal government, law enforcement and public safety. LexisNexis Risk Solutions operates within the Risk & Business Analytics market segment of RELX, a multinational information and analytics company based in London. Overview Market Segments LexisNexis Risk Solutions operates in four market segments: * Insurance Services * Business Services * Health Care Services * Government Services Technology LexisNexis Risk Solut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data-intensive Computing

Data-intensive computing is a class of parallel computing applications which use a data parallel approach to process large volumes of data typically terabytes or petabytes in size and typically referred to as big data. Computing applications which devote most of their execution time to computational requirements are deemed compute-intensive, whereas computing applications which require large volumes of data and devote most of their processing time to I/O and manipulation of data are deemed data-intensive. Introduction The rapid growth of the Internet and World Wide Web led to vast amounts of information available online. In addition, business and government organizations create large amounts of both structured and unstructured information which needs to be processed, analyzed, and linked. Vinton Cerf described this as an “information avalanche” and stated “we must harness the Internet’s energy before the information it has unleashed buries us”. An IDC white paper spo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ECL (data-centric Programming Language)

ECL (Enterprise Control Language) is a declarative, data-centric programming language designed in 2000 to allow a team of programmers to process big data across a high performance computing cluster without the programmer being involved in many of the lower level, imperative decisions. History ECL was initially designed and developed in 2000 by David Bayliss as an in-house productivity tool within Seisint Inc and was considered to be a ‘secret weapon’ that allowed Seisint to gain market share in its data business. Equifax had an SQL-based process for predicting who would go bankrupt in the next 30 days, but it took 26 days to run the data. The first ECL implementation solved the same problem in 6 minutes. The technology was cited as a driving force behind the acquisition of Seisint by LexisNexis and then again as a major source of synergies when LexisNexis acquired ChoicePoint Inc. Language constructs ECL, at least in its purest form, is a declarative, data-centric langua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller amounts. In it primary definition though, Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller amounts. In it primary definition though, Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sector/Sphere

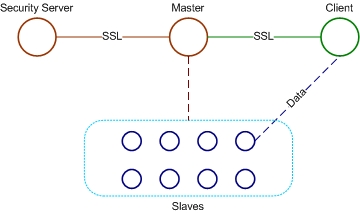

Sector/Sphere is an open source software suite for high-performance distributed data storage and processing. It can be broadly compared to Google's GFS and MapReduce technology. Sector is a distributed file system targeting data storage over a large number of commodity computers. Sphere is the programming architecture framework that supports in-storage parallel data processing for data stored in Sector. Sector/Sphere operates in a wide area network (WAN) setting. The system was created by Yunhong Gu (the author of UDP-based Data Transfer Protocol) in 2006 and was then maintained by a group of other developers. Architecture Sector/Sphere consists of four components. The security server maintains the system security policies such as user accounts and the IP access control list. One or more master servers control operations of the overall system in addition to responding to various user requests. The slave nodes store the data files and process them upon request. The clients are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This appro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Computing

A distributed system is a system whose components are located on different computer network, networked computers, which communicate and coordinate their actions by message passing, passing messages to one another from any system. Distributed computing is a field of computer science that studies distributed systems. The components of a distributed system interact with one another in order to achieve a common goal. Three significant challenges of distributed systems are: maintaining concurrency of components, overcoming the clock synchronization, lack of a global clock, and managing the independent failure of components. When a component of one system fails, the entire system does not fail. Examples of distributed systems vary from service-oriented architecture, SOA-based systems to massively multiplayer online games to peer-to-peer, peer-to-peer applications. A computer program that runs within a distributed system is called a distributed program, and ''distributed programming' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Declarative Programming Languages

Declarative may refer to: * Declarative learning, acquiring information that one can speak about * Declarative memory, one of two types of long term human memory * Declarative programming, a computer programming paradigm * Declarative sentence, a type of sentence that makes a statement * Declarative mood A realis mood (abbreviated ) is a grammatical mood which is used principally to indicate that something is a statement of fact; in other words, to express what the speaker considers to be a known state of affairs, as in declarative sentences. Mos ..., a grammatical verb form used in declarative sentences See also * Declaration (other) {{disamb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |