|

Grand Alliance (HDTV)

The Grand Alliance (GA) was a consortium created in 1993 at the behest of the Federal Communications Commission (FCC) to develop the American digital television (SDTV, EDTV) and HDTV specification, with the aim of pooling the best work from different companies. It consisted of AT&T Corporation, General Instrument Corporation, Massachusetts Institute of Technology, Philips Consumer Electronics, David Sarnoff Research Center, Thomson Consumer Electronics, and Zenith Electronics Corporation. The Grand Alliance DTV system is the basis for the ATSC standard. Recognizing that earlier proposed systems demonstrated particular strengths in the FCC's Advisory Committee on Advanced Television Service (ACATS) testing and evaluation process, the Grand Alliance system was proposed to combine the advantages of all of the previously proposed terrestrial digital HDTV systems. At the time of its inception, the Grand Alliance HDTV system was specified to include: * Flexible picture formats with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consortium

A consortium (plural: consortia) is an association of two or more individuals, companies, organizations or governments (or any combination of these entities) with the objective of participating in a common activity or pooling their resources for achieving a common goal. is a Latin word meaning "partnership", "association" or "society", and derives from ("shared in property"), itself from ("together") and ("fate"). Examples Educational The Big Ten Academic Alliance in the Midwest and Mid-Atlantic U.S., Claremont Colleges consortium in Southern California, Five College Consortium in Massachusetts, and Consórcio Nacional Honda are among the oldest and most successful higher education consortia in the World. The Big Ten Academic Alliance, formerly known as the Committee on Institutional Cooperation, includes the members of the Big Ten athletic conference. The participants in Five Colleges, Inc. are: Amherst College, Hampshire College, Mount Holyoke College, Smith College, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Visual Descriptors

The visual system comprises the sensory organ (the eye) and parts of the central nervous system (the retina containing photoreceptor cells, the optic nerve, the optic tract and the visual cortex) which gives organisms the sense of sight (the ability to detect and process visible light) as well as enabling the formation of several non-image photo response functions. It detects and interprets information from the optical spectrum perceptible to that species to "build a representation" of the surrounding environment. The visual system carries out a number of complex tasks, including the reception of light and the formation of monocular neural representations, colour vision, the neural mechanisms underlying stereopsis and assessment of distances to and between objects, the identification of a particular object of interest, motion perception, the analysis and integration of visual information, pattern recognition, accurate motor coordination under visual guidance, and more. The n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TV And FM DX

TV DX and FM DX is the active search for distant radio or television stations received during unusual atmospheric conditions. The term DX is an old telegraphic term meaning "long distance." VHF/UHF television and radio signals are normally limited to a maximum "deep fringe" reception service area of approximately in areas where the broadcast spectrum is congested, and about 50 percent farther in the absence of interference. However, providing favourable atmospheric conditions are present, television and radio signals sometimes can be received hundreds or even thousands of miles outside their intended coverage area. These signals are often received using a large outdoor antenna system connected to a sensitive TV or FM receiver, although this may not always be the case. Many times smaller antennas and receivers such as those in vehicles will receive stations farther than normal depending on how favourable conditions are. While only a limited number of local stations can normally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

COFDM

In telecommunications, orthogonal frequency-division multiplexing (OFDM) is a type of digital transmission and a method of encoding digital data on multiple carrier frequencies. OFDM has developed into a popular scheme for wideband digital communication, used in applications such as digital television and audio broadcasting, DSL internet access, wireless networks, power line networks, and 4G/ 5G mobile communications. OFDM is a frequency-division multiplexing (FDM) scheme that was introduced by Robert W. Chang of Bell Labs in 1966. In OFDM, multiple closely spaced orthogonal subcarrier signals with overlapping spectra are transmitted to carry data in parallel.webe.org - 2GHz BAS Relocation Tech-Fair, COFDM Technology Basics 2007-03-02 Demodula ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trellis Modulation

In telecommunication, trellis modulation (also known as trellis coded modulation, or simply TCM) is a modulation scheme that transmits information with high efficiency over band-limited channels such as telephone lines. Gottfried Ungerboeck invented trellis modulation while working for IBM in the 1970s, and first described it in a conference paper in 1976. It went largely unnoticed, however, until he published a new, detailed exposition in 1982 that achieved sudden and widespread recognition. In the late 1980s, modems operating over plain old telephone service (''POTS'') typically achieved 9.6 kbit/s by employing four bits per symbol QAM modulation at 2,400 baud (symbols/second). This bit rate ceiling existed despite the best efforts of many researchers, and some engineers predicted that without a major upgrade of the public phone infrastructure, the maximum achievable rate for a POTS modem might be 14 kbit/s for two-way communication (3,429 baud × 4 bits/symbol, using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vestigial Sideband

In radio communications, single-sideband modulation (SSB) or single-sideband suppressed-carrier modulation (SSB-SC) is a type of modulation used to transmit information, such as an audio signal, by radio waves. A refinement of amplitude modulation, it uses transmitter power and bandwidth more efficiently. Amplitude modulation produces an output signal the bandwidth of which is twice the maximum frequency of the original baseband signal. Single-sideband modulation avoids this bandwidth increase, and the power wasted on a carrier, at the cost of increased device complexity and more difficult tuning at the receiver. Basic concept Radio transmitters work by mixing a radio frequency (RF) signal of a specific frequency, the carrier wave, with the audio signal to be broadcast. In AM transmitters this mixing usually takes place in the final RF amplifier (high level modulation). It is less common and much less efficient to do the mixing at low power and then amplify it in a linear ampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quadrature Amplitude Modulation

Quadrature amplitude modulation (QAM) is the name of a family of digital modulation methods and a related family of analog modulation methods widely used in modern telecommunications to transmit information. It conveys two analog message signals, or two digital bit streams, by changing (''modulating'') the amplitudes of two carrier waves, using the amplitude-shift keying (ASK) digital modulation scheme or amplitude modulation (AM) analog modulation scheme. The two carrier waves are of the same frequency and are out of phase with each other by 90°, a condition known as orthogonality or quadrature. The transmitted signal is created by adding the two carrier waves together. At the receiver, the two waves can be coherently separated (demodulated) because of their orthogonality property. Another key property is that the modulations are low-frequency/low-bandwidth waveforms compared to the carrier frequency, which is known as the narrowband assumption. Phase modulation (analog PM) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MUSICAM

MPEG-1 Audio Layer II or MPEG-2 Audio Layer II (MP2, sometimes incorrectly called Musicam or MUSICAM) is a lossy audio compression format defined by ISO/IEC 11172-3 alongside MPEG-1 Audio Layer I and MPEG-1 Audio Layer III (MP3). While MP3 is much more popular for PC and Internet applications, MP2 remains a dominant standard for audio broadcasting. History of development from MP2 to MP3 MUSICAM MPEG-1 Audio Layer 2 encoding was derived from the MUSICAM (''Masking pattern adapted Universal Subband Integrated Coding And Multiplexing'') audio codec, developed by Centre commun d'études de télévision et télécommunications (CCETT), Philips, and the Institut für Rundfunktechnik (IRT) in 1989 as part of the EUREKA 147 pan-European inter-governmental research and development initiative for the development of a system for the broadcasting of audio and data to fixed, portable or mobile receivers (established in 1987). It began as the Digital Audio Broadcast (DAB) project manage ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

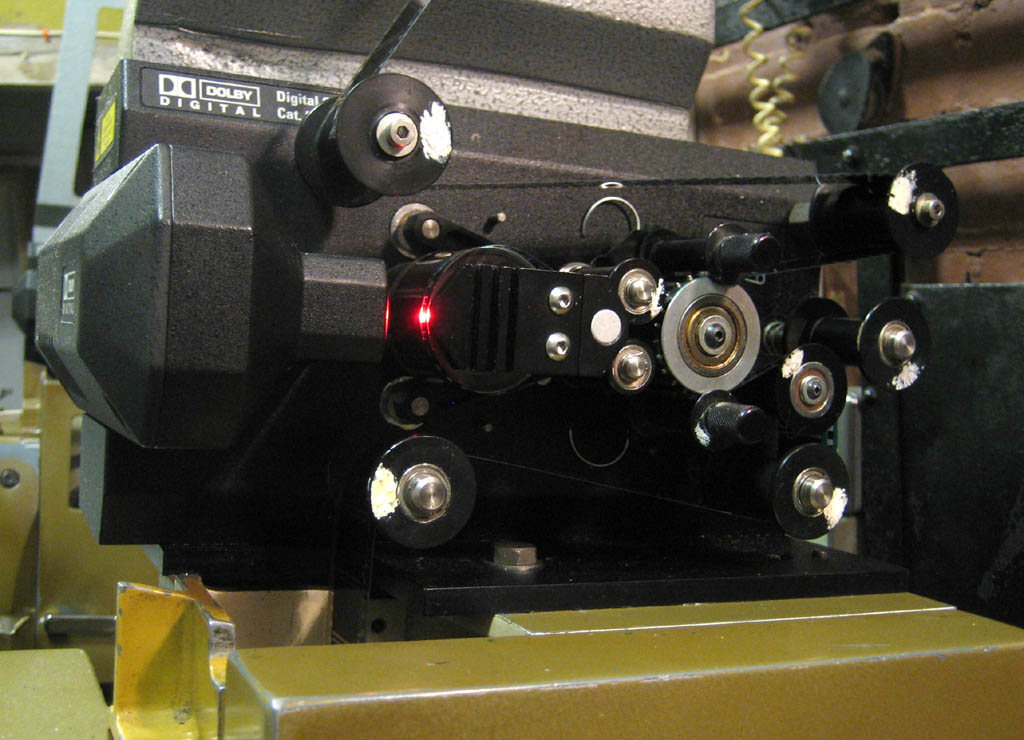

Dolby Digital

Dolby Digital, originally synonymous with Dolby AC-3, is the name for what has now become a family of audio compression technologies developed by Dolby Laboratories. Formerly named Dolby Stereo Digital until 1995, the audio compression is lossy (except for Dolby TrueHD), based on the modified discrete cosine transform (MDCT) algorithm. The first use of Dolby Digital was to provide digital sound in cinemas from 35 mm film prints; today, it is also used for applications such as TV broadcast, radio broadcast via satellite, digital video streaming, DVDs, Blu-ray discs and game consoles. The main basis of the Dolby AC-3 multi-channel audio coding standard is the modified discrete cosine transform (MDCT), a lossy audio compression algorithm. It is a modification of the discrete cosine transform (DCT) algorithm, which was first proposed by Nasir Ahmed in 1972 and was originally intended for image compression. The DCT was adapted into the modified discrete cosine transform (MD ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-2

MPEG-2 (a.k.a. H.222/H.262 as was defined by the ITU) is a standard for "the generic video coding format, coding of moving pictures and associated audio information". It describes a combination of Lossy compression, lossy video compression and Lossy compression, lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and HEVC, H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard. Main characteristics MPEG-2 is widely used as the format of digital television signals that are broadcast by terrestrial television, terrestrial (over-the-air), Cable television, cable, and direct broadcast satellite Television, TV systems. It also specifies the format of movies and other programs th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Video Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signal. C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interlaced Scanning

Interlaced video (also known as interlaced scan) is a technique for doubling the perceived frame rate of a video display without consuming extra bandwidth. The interlaced signal contains two fields of a video frame captured consecutively. This enhances motion perception to the viewer, and reduces flicker by taking advantage of the phi phenomenon. This effectively doubles the time resolution (also called ''temporal resolution'') as compared to non-interlaced footage (for frame rates equal to field rates). Interlaced signals require a display that is natively capable of showing the individual fields in a sequential order. CRT displays and ALiS plasma displays are made for displaying interlaced signals. Interlaced scan refers to one of two common methods for "painting" a video image on an electronic display screen (the other being progressive scan) by scanning or displaying each line or row of pixels. This technique uses two fields to create a frame. One field contains all odd-n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |