|

Gibbs Paradox

In statistical mechanics, a semi-classical derivation of entropy that does not take into account the indistinguishability of particles yields an expression for entropy which is not extensive (is not proportional to the amount of substance in question). This leads to a paradox known as the Gibbs paradox, after Josiah Willard Gibbs, who proposed this thought experiment in 1874‒1875. The paradox allows for the entropy of closed systems to decrease, violating the second law of thermodynamics. A related paradox is the " mixing paradox". If one takes the perspective that the definition of entropy must be changed so as to ignore particle permutation, in the thermodynamic limit, the paradox is averted. Illustration of the problem Gibbs himself considered the following problem that arises if the ideal gas entropy is not extensive. Reprinted in and in Two identical containers of an ideal gas sit side-by-side. The gas in container #1 is identical in every respect to the gas in co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Mechanics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles. Statistical mechanics arose out of the development of classical thermodynamics, a field for which it was successful in explaining macroscopic physical properties—such as temperature, pressure, and heat capacity—in terms of microscopic parameters that fluctuate about average values and are characterized by probability distributions. This established the fields of statistical thermodynamics and statistical physics. The founding of the field of statistical mechanics is generally credited to three physicists: *Ludwig Boltzmann, who developed the fundamental interpretation of entropy in terms of a collection of microstates *James Clerk Maxwell, who developed models of probability distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

N-sphere

In mathematics, an -sphere or a hypersphere is a topological space that is homeomorphic to a ''standard'' -''sphere'', which is the set of points in -dimensional Euclidean space that are situated at a constant distance from a fixed point, called the ''center''. It is the generalization of an ordinary sphere in the ordinary three-dimensional space. The "radius" of a sphere is the constant distance of its points to the center. When the sphere has unit radius, it is usual to call it the unit -sphere or simply the -sphere for brevity. In terms of the standard norm, the -sphere is defined as : S^n = \left\ , and an -sphere of radius can be defined as : S^n(r) = \left\ . The dimension of -sphere is , and must not be confused with the dimension of the Euclidean space in which it is naturally embedded. An -sphere is the surface or boundary of an -dimensional ball. In particular: *the pair of points at the ends of a (one-dimensional) line segment is a 0-sphere, *a circle, which i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classical Mechanics

Classical mechanics is a physical theory describing the motion of macroscopic objects, from projectiles to parts of machinery, and astronomical objects, such as spacecraft, planets, stars, and galaxies. For objects governed by classical mechanics, if the present state is known, it is possible to predict how it will move in the future (determinism), and how it has moved in the past (reversibility). The earliest development of classical mechanics is often referred to as Newtonian mechanics. It consists of the physical concepts based on foundational works of Sir Isaac Newton, and the mathematical methods invented by Gottfried Wilhelm Leibniz, Joseph-Louis Lagrange, Leonhard Euler, and other contemporaries, in the 17th century to describe the motion of bodies under the influence of a system of forces. Later, more abstract methods were developed, leading to the reformulations of classical mechanics known as Lagrangian mechanics and Hamiltonian mechanics. These advances, ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

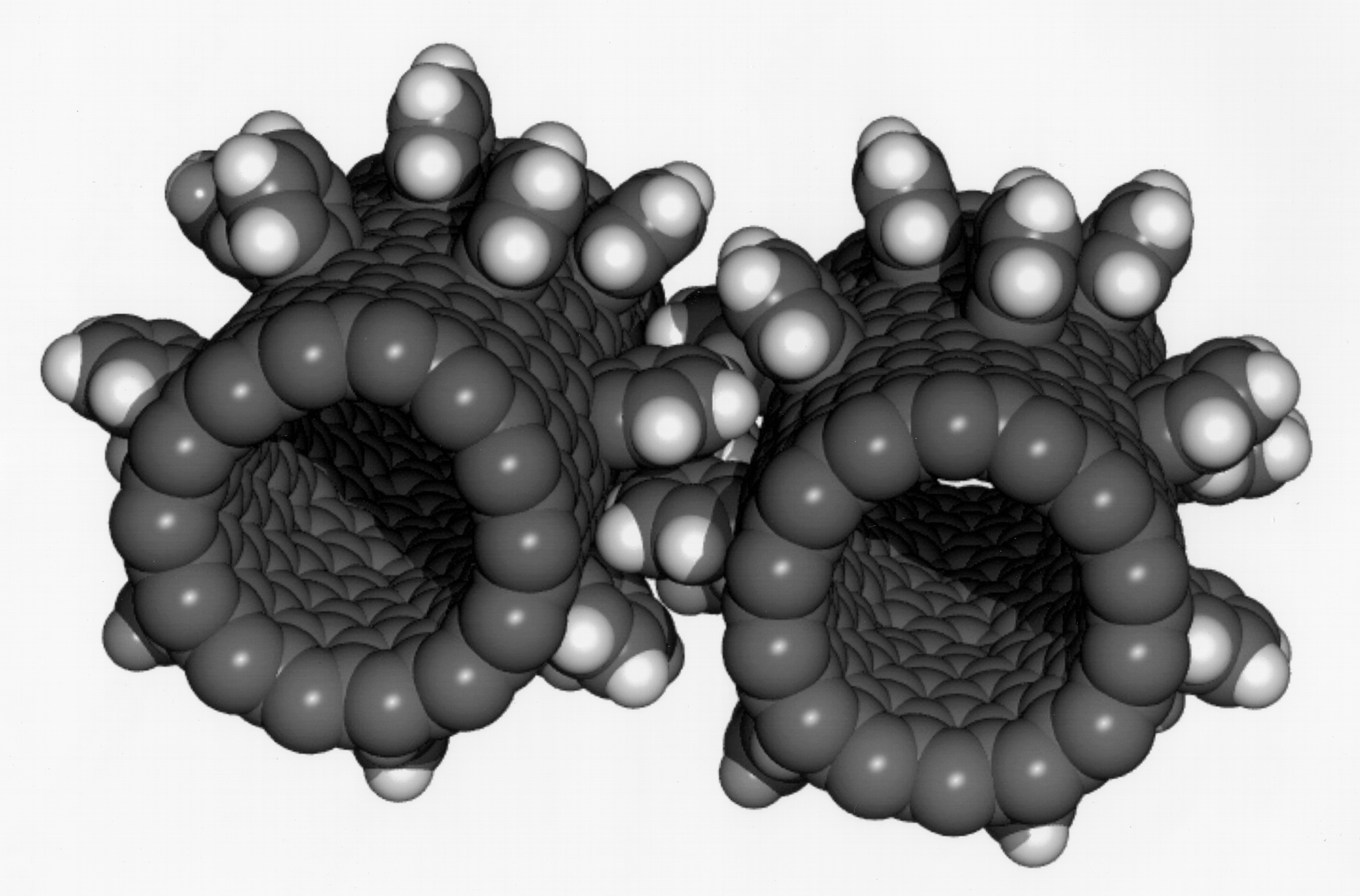

Nanotechnology

Nanotechnology, also shortened to nanotech, is the use of matter on an atomic, molecular, and supramolecular scale for industrial purposes. The earliest, widespread description of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabrication of macroscale products, also now referred to as molecular nanotechnology. A more generalized description of nanotechnology was subsequently established by the National Nanotechnology Initiative, which defined nanotechnology as the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). This definition reflects the fact that quantum mechanical effects are important at this quantum-realm scale, and so the definition shifted from a particular technological goal to a research category inclusive of all types of research and technologies that deal with the special properties of matter which occur below the given size threshold. It is therefore common to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Simulation

Computer simulation is the process of mathematical modelling, performed on a computer, which is designed to predict the behaviour of, or the outcome of, a real-world or physical system. The reliability of some mathematical models can be determined by comparing their results to the real-world outcomes they aim to predict. Computer simulations have become a useful tool for the mathematical modeling of many natural systems in physics (computational physics), astrophysics, climatology, chemistry, biology and manufacturing, as well as human systems in economics, psychology, social science, health care and engineering. Simulation of a system is represented as the running of the system's model. It can be used to explore and gain new insights into new technology and to estimate the performance of systems too complex for analytical solutions. Computer simulations are realized by running computer programs that can be either small, running almost instantly on small devices, or large ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correct Boltzmann Counting

{{disambiguation ...

Correct or Correctness may refer to: * What is true * Accurate; Error-free * Correctness (computer science), in theoretical computer science * Political correctness, a sociolinguistic concept * Correct, Indiana, an unincorporated community in the United States See also * Correct Craft, a U.S.-based builder of powerboats * Correct sampling, a sampling scenario in Gy's sampling theory * Right (other) A right is a legal or moral entitlement or permission. Right may also refer to: * Right, synonym of true or accurate, opposite of wrong * Morally right, opposite of morally wrong * Right (direction), the relative direction opposite of left * Rig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Indistinguishable Particles

In quantum mechanics, identical particles (also called indistinguishable or indiscernible particles) are particles that cannot be distinguished from one another, even in principle. Species of identical particles include, but are not limited to, elementary particles (such as electrons), composite subatomic particles (such as atomic nuclei), as well as atoms and molecules. Quasiparticles also behave in this way. Although all known indistinguishable particles only exist at the quantum scale, there is no exhaustive list of all possible sorts of particles nor a clear-cut limit of applicability, as explored in quantum statistics. There are two main categories of identical particles: bosons, which can share quantum states, and fermions, which cannot (as described by the Pauli exclusion principle). Examples of bosons are photons, gluons, phonons, helium-4 nuclei and all mesons. Examples of fermions are electrons, neutrinos, quarks, protons, neutrons, and helium-3 nuclei. The f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Edwin Thompson Jaynes

Edwin Thompson Jaynes (July 5, 1922 – April 30, 1998) was the Wayman Crow Distinguished Professor of Physics at Washington University in St. Louis. He wrote extensively on statistical mechanics and on foundations of probability and statistical inference, initiating in 1957 the maximum entropy interpretation of thermodynamics as being a particular application of more general Bayesian/information theory techniques (although he argued this was already implicit in the works of Josiah Willard Gibbs). Jaynes strongly promoted the interpretation of probability theory as an extension of logic. In 1963, together with Fred Cummings, he modeled the evolution of a two-level atom in an electromagnetic field, in a fully quantized way. This model is known as the Jaynes–Cummings model. A particular focus of his work was the construction of logical principles for assigning prior probability distributions; see the principle of maximum entropy, the principle of maximum caliber, the prin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stirling Approximation

In mathematics, Stirling's approximation (or Stirling's formula) is an approximation for factorials. It is a good approximation, leading to accurate results even for small values of n. It is named after James Stirling, though a related but less precise result was first stated by Abraham de Moivre. One way of stating the approximation involves the logarithm of the factorial: \ln(n!) = n\ln n - n +O(\ln n), where the big O notation means that, for all sufficiently large values of n, the difference between \ln(n!) and n\ln n-n will be at most proportional to the logarithm. In computer science applications such as the worst-case lower bound for comparison sorting, it is convenient to use instead the binary logarithm, giving the equivalent form \log_2 (n!) = n\log_2 n - n\log_2 e +O(\log_2 n). The error term in either base can be expressed more precisely as \tfrac12\log(2\pi n)+O(\tfrac1n), corresponding to an approximate formula for the factorial itself, n! \sim \sqrt\left(\frac\righ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Particle Number

The particle number (or number of particles) of a thermodynamic system, conventionally indicated with the letter ''N'', is the number of constituent particles in that system. The particle number is a fundamental parameter in thermodynamics which is conjugate to the chemical potential. Unlike most physical quantities, particle number is a dimensionless quantity. It is an extensive parameter, as it is directly proportional to the size of the system under consideration, and thus meaningful only for closed systems. A constituent particle is one that cannot be broken into smaller pieces at the scale of energy ''k·T'' involved in the process (where ''k'' is the Boltzmann constant and ''T'' is the temperature). For example, for a thermodynamic system consisting of a piston containing water vapour, the particle number is the number of water molecules in the system. The meaning of constituent particle, and thereby of particle number, is thus temperature-dependent. Determining the particle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gamma Function

In mathematics, the gamma function (represented by , the capital letter gamma from the Greek alphabet) is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except the non-positive integers. For every positive integer , \Gamma(n) = (n-1)!\,. Derived by Daniel Bernoulli, for complex numbers with a positive real part, the gamma function is defined via a convergent improper integral: \Gamma(z) = \int_0^\infty t^ e^\,dt, \ \qquad \Re(z) > 0\,. The gamma function then is defined as the analytic continuation of this integral function to a meromorphic function that is holomorphic in the whole complex plane except zero and the negative integers, where the function has simple poles. The gamma function has no zeroes, so the reciprocal gamma function is an entire function. In fact, the gamma function corresponds to the Mellin transform of the negative exponential function: \Gamma(z) = \mathcal M \ (z ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |