|

Generalized Normal Distribution

The generalized normal distribution or generalized Gaussian distribution (GGD) is either of two families of parametric statistics, parametric continuous probability distributions on the real number, real line. Both families add a shape parameter to the normal distribution. To distinguish the two families, they are referred to below as "symmetric" and "asymmetric"; however, this is not a standard nomenclature. Symmetric version The symmetric generalized normal distribution, also known as the exponential power distribution or the generalized error distribution, is a parametric family of Symmetric probability distribution, symmetric distributions. It includes all normal distribution, normal and Laplace distribution, Laplace distributions, and as limiting cases it includes all continuous uniform distributions on bounded intervals of the real line. This family includes the normal distribution when \textstyle\beta=2 (with mean \textstyle\mu and variance \textstyle \frac) and it in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parametric Statistics

Parametric statistics is a branch of statistics which assumes that sample data comes from a population that can be adequately modeled by a probability distribution that has a fixed set of Statistical parameter, parameters. Conversely a non-parametric model does not assume an explicit (finite-parametric) mathematical form for the distribution when modeling the data. However, it may make some assumptions about that distribution, such as continuity or symmetry. Most well-known statistical methods are parametric. Regarding nonparametric (and semiparametric) models, David Cox (statistician), Sir David Cox has said, "These typically involve fewer assumptions of structure and distributional form but usually contain strong assumptions about independencies". Example The normal distribution, normal family of distributions all have the same general shape and are ''parameterized'' by mean and standard deviation. That means that if the mean and standard deviation are known and if the distribu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peakedness

In probability theory and statistics, a shape parameter (also known as form parameter) is a kind of numerical parameter of a parametric family of probability distributionsEveritt B.S. (2002) Cambridge Dictionary of Statistics. 2nd Edition. CUP. that is neither a location parameter nor a scale parameter (nor a function of these, such as a rate parameter). Such a parameter must affect the ''shape'' of a distribution rather than simply shifting it (as a location parameter does) or stretching/shrinking it (as a scale parameter does). For example, "peakedness" refers to how round the main peak is. Estimation Many estimators measure location or scale; however, estimators for shape parameters also exist. Most simply, they can be estimated in terms of the higher moments, using the method of moments, as in the ''skewness'' (3rd moment) or ''kurtosis'' (4th moment), if the higher moments are defined and finite. Estimators of shape often involve higher-order statistics (non-linear functio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stable Count Distribution

In probability theory, the stable count distribution is the conjugate prior of a one-sided stable distribution. This distribution was discovered by Stephen Lihn (Chinese: 藺鴻圖) in his 2017 study of daily distributions of the S&P 500 and the VIX. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it. Of the three parameters defining the distribution, the stability parameter \alpha is most important. Stable count distributions have 0<\alpha<1. The known analytical case of is related to the distribution (See Section 7 of ). All the moments are finite for the distribution. Definition Its standard distribution is defined as : |

Cusp (singularity)

In mathematics, a cusp, sometimes called spinode in old texts, is a point on a curve where a moving point must reverse direction. A typical example is given in the figure. A cusp is thus a type of singular point of a curve. For a plane curve defined by an analytic, parametric equation :\begin x &= f(t)\\ y &= g(t), \end a cusp is a point where both derivatives of and are zero, and the directional derivative, in the direction of the tangent, changes sign (the direction of the tangent is the direction of the slope \lim (g'(t)/f'(t))). Cusps are ''local singularities'' in the sense that they involve only one value of the parameter , in contrast to self-intersection points that involve more than one value. In some contexts, the condition on the directional derivative may be omitted, although, in this case, the singularity may look like a regular point. For a curve defined by an implicit equation :F(x,y) = 0, which is smooth, cusps are points where the terms of lowest degree ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Student T Distribution

In probability and statistics, Student's ''t''-distribution (or simply the ''t''-distribution) is any member of a family of continuous probability distributions that arise when estimating the mean of a normally distributed population in situations where the sample size is small and the population's standard deviation is unknown. It was developed by English statistician William Sealy Gosset under the pseudonym "Student". The ''t''-distribution plays a role in a number of widely used statistical analyses, including Student's ''t''-test for assessing the statistical significance of the difference between two sample means, the construction of confidence intervals for the difference between two population means, and in linear regression analysis. Student's ''t''-distribution also arises in the Bayesian analysis of data from a normal family. If we take a sample of n observations from a normal distribution, then the ''t''-distribution with \nu=n-1 degrees of freedom can be defined ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skew Normal Distribution

In probability theory and statistics, the skew normal distribution is a continuous probability distribution that generalises the normal distribution In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu ... to allow for non-zero skewness. Definition Let \phi(x) denote the Normal distribution, standard normal probability density function :\phi(x)=\frace^ with the cumulative distribution function given by :\Phi(x) = \int_^ \phi(t)\ dt = \frac \left[ 1 + \operatorname \left(\frac\right)\right], where "erf" is the error function. Then the probability density function (pdf) of the skew-normal distribution with parameter \alpha is given by :f(x) = 2\phi(x)\Phi(\alpha x). \, This distribution was first introduced by O'Hagan and Leonard (1976). Alternative forms to this distribution, with the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Distribution

In statistics, a symmetric probability distribution is a probability distribution—an assignment of probabilities to possible occurrences—which is unchanged when its probability density function (for continuous probability distribution) or probability mass function (for discrete random variables) is reflected around a vertical line at some value of the random variable represented by the distribution. This vertical line is the line of symmetry of the distribution. Thus the probability of being any given distance on one side of the value about which symmetry occurs is the same as the probability of being the same distance on the other side of that value. Formal definition A probability distribution is said to be symmetric if and only if there exists a value x_0 such that : f(x_0-\delta) = f(x_0+\delta) for all real numbers \delta , where ''f'' is the probability density function if the distribution is continuous or the probability mass function if the distribution is d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trigamma Function

In mathematics, the trigamma function, denoted or , is the second of the polygamma functions, and is defined by : \psi_1(z) = \frac \ln\Gamma(z). It follows from this definition that : \psi_1(z) = \frac \psi(z) where is the digamma function. It may also be defined as the sum of the series : \psi_1(z) = \sum_^\frac, making it a special case of the Hurwitz zeta function : \psi_1(z) = \zeta(2,z). Note that the last two formulas are valid when is not a natural number. Calculation A double integral representation, as an alternative to the ones given above, may be derived from the series representation: : \psi_1(z) = \int_0^1\!\!\int_0^x\frac\,dy\,dx using the formula for the sum of a geometric series. Integration over yields: : \psi_1(z) = -\int_0^1\frac\,dx An asymptotic expansion as a Laurent series is : \psi_1(z) = \frac + \frac + \sum_^\frac = \sum_^\frac if we have chosen , i.e. the Bernoulli numbers of the second kind. Recurrence and reflection formu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

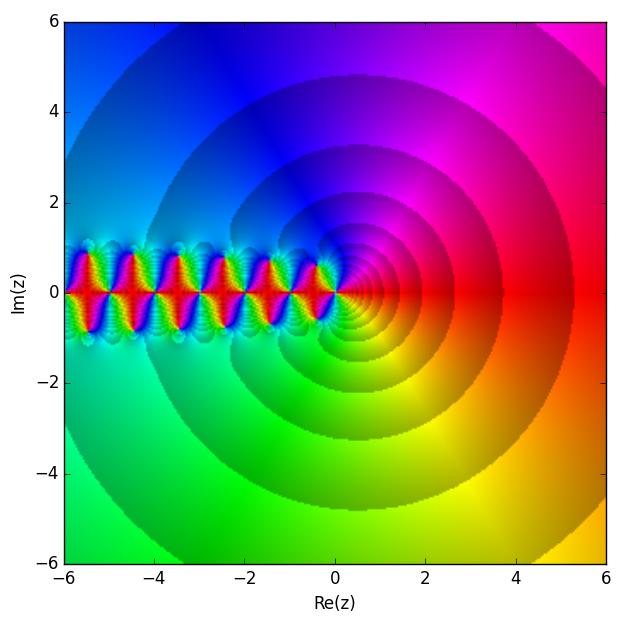

Digamma Function

In mathematics, the digamma function is defined as the logarithmic derivative of the gamma function: :\psi(x)=\frac\ln\big(\Gamma(x)\big)=\frac\sim\ln-\frac. It is the first of the polygamma functions. It is strictly increasing and strictly concave on (0,\infty). The digamma function is often denoted as \psi_0(x), \psi^(x) or (the uppercase form of the archaic Greek consonant digamma meaning double-gamma). Relation to harmonic numbers The gamma function obeys the equation :\Gamma(z+1)=z\Gamma(z). \, Taking the derivative with respect to gives: :\Gamma'(z+1)=z\Gamma'(z)+\Gamma(z) \, Dividing by or the equivalent gives: :\frac=\frac+\frac or: :\psi(z+1)=\psi(z)+\frac Since the harmonic numbers are defined for positive integers as :H_n=\sum_^n \frac 1 k, the digamma function is related to them by :\psi(n)=H_-\gamma, where and is the Euler–Mascheroni constant. For half-integer arguments the digamma function takes the values : \psi \left(n+\tfrac12\ri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment (mathematics)

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. The mathematical concept is closely related to the concept of moment in physics. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution (Hausdorff moment problem). The same is not true on unbounded intervals (Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Newton's Method

In numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a single-variable function defined for a real variable , the function's derivative , and an initial guess for a root of . If the function satisfies sufficient assumptions and the initial guess is close, then :x_ = x_0 - \frac is a better approximation of the root than . Geometrically, is the intersection of the -axis and the tangent of the graph of at : that is, the improved guess is the unique root of the linear approximation at the initial point. The process is repeated as :x_ = x_n - \frac until a sufficiently precise value is reached. This algorithm is first in the class of Householder's methods, succeeded by Halley's method. The method can also be extended to complex functions an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |