|

Gustafson's Law

In computer architecture, Gustafson's law (or Gustafson–Barsis's law) gives the speedup in the execution time of a task that theoretically gains from parallel computing, using a hypothetical run of ''the task'' on a single-core machine as the baseline. To put it another way, it is the theoretical "slowdown" of an ''already parallelized'' task if running on a serial machine. It is named after computer scientist John L. Gustafson and his colleague Edwin H. Barsis, and was presented in the article ''Reevaluating Amdahl's Law'' in 1988. Definition Gustafson estimated the speedup S of a program gained by using parallel computing as follows: : \begin S &= s + p \times N \\ &= s + (1 - s) \times N \\ &= N + (1 - N) \times s \end where * S is the theoretical speedup of the program with parallelism (scaled speedup); *N is the number of processors; * s and p are the fractions of time spent executing the serial parts and the parallel parts of the program on the ''parallel' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gustafson

A derivative of the name Gustav, Gustafson, Gustafsson, Gustavson, or Gustavsson, is a group of fairly common surnames of Scandinavian origin, and may refer to any of the following people: Gustafson *Andy Gustafson, American collegiate football coach *Axel Carl Johan Gustafson, Swedish author *Ben E. Gustafson, American politician *Barry Gustafson, New Zealand political scientist and historian *Bob Gustafson, American cartoonist * Cliff Gustafson, collegiate baseball coach *Derek Gustafson, American pro hockey goalie * Dwight Gustafson (1930–2014), American composer and conductor * Earl B. Gustafson, American politician, judge, and lawyer * Fredrik Gustafson, Swedish football player *Gabriel Gustafson, Swedish archaeologist *Gerald Gustafson, U.S. Air Force pilot *James Gustafson, American theological ethicist *James Gustafson (politician), American politician * John Gustafson, English rock bassist * John L. Gustafson (born 1955), American computer scientist * Kathryn Gustafson, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Architecture

In computer engineering, computer architecture is a description of the structure of a computer system made from component parts. It can sometimes be a high-level description that ignores details of the implementation. At a more detailed level, the description may include the instruction set architecture design, microarchitecture design, logic design, and implementation. History The first documented computer architecture was in the correspondence between Charles Babbage and Ada Lovelace, describing the analytical engine. When building the computer Z1 (computer), Z1 in 1936, Konrad Zuse described in two patent applications for his future projects that machine instructions could be stored in the same storage used for data, i.e., the Stored-program computer, stored-program concept. Two other early and important examples are: * John von Neumann's 1945 paper, First Draft of a Report on the EDVAC, which described an organization of logical elements; and *Alan M. Turing, Alan Turing's mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speedup

In computer architecture, speedup is a number that measures the relative performance of two systems processing the same problem. More technically, it is the improvement in speed of execution of a task executed on two similar architectures with different resources. The notion of speedup was established by Amdahl's law, which was particularly focused on parallel processing. However, speedup can be used more generally to show the effect on performance after any resource enhancement. Definitions Speedup can be defined for two different types of quantities: '' latency'' and ''throughput''. ''Latency'' of an architecture is the reciprocal of the execution speed of a task: : L = \frac = \frac, where * ''v'' is the execution speed of the task; * ''T'' is the execution time of the task; * ''W'' is the execution workload of the task. ''Throughput'' of an architecture is the execution rate of a task: : Q = \rho vA = \frac = \frac, where * ''ρ'' is the execution density (e.g., the number ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John L

John Lasarus Williams (29 October 1924 – 15 June 2004), known as John L, was a Welsh nationalist activist. Williams was born in Llangoed on Anglesey, but lived most of his life in nearby Llanfairpwllgwyngyll. In his youth, he was a keen footballer, and he also worked as a teacher. His activism started when he campaigned against the refusal of Brewer Spinks, an employer in Blaenau Ffestiniog, to permit his staff to speak Welsh. This inspired him to become a founder of Undeb y Gymraeg Fyw, and through this organisation was the main organiser of ''Sioe Gymraeg y Borth'' (the Welsh show for Menai Bridge using the colloquial form of its Welsh name).Colli John L Williams , '''', ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Edwin H

The name Edwin means "rich friend". It comes from the Old English elements "ead" (rich, blessed) and "ƿine" (friend). The original Anglo-Saxon form is Eadƿine, which is also found for Anglo-Saxon figures. People * Edwin of Northumbria (died 632 or 633), King of Northumbria and Christian saint * Edwin (son of Edward the Elder) (died 933) * Eadwine of Sussex (died 982), King of Sussex * Eadwine of Abingdon (died 990), Abbot of Abingdon * Edwin, Earl of Mercia (died 1071), brother-in-law of Harold Godwinson (Harold II) * Edwin (director) (born 1978), Indonesian filmmaker * Edwin (musician) (born 1968), Canadian musician * Edwin Abeygunasekera, Sri Lankan Sinhala politician, member of the 1st and 2nd State Council of Ceylon * Edwin Ariyadasa (1922-2021), Sri Lankan Sinhala journalist * Edwin Austin Abbey (1852–1911) British artist * Edwin Eugene Aldrin (born 1930), although he changed it to Buzz Aldrin, American astronaut * Edwin Howard Armstrong (1890–1954), Americ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications Of The ACM

''Communications of the ACM'' is the monthly journal of the Association for Computing Machinery (ACM). It was established in 1958, with Saul Rosen as its first managing editor. It is sent to all ACM members. Articles are intended for readers with backgrounds in all areas of computer science and information systems. The focus is on the practical implications of advances in information technology and associated management issues; ACM also publishes a variety of more theoretical journals. The magazine straddles the boundary of a science magazine, trade magazine, and a scientific journal. While the content is subject to peer review, the articles published are often summaries of research that may also be published elsewhere. Material published must be accessible and relevant to a broad readership. From 1960 onward, ''CACM'' also published algorithms, expressed in ALGOL. The collection of algorithms later became known as the Collected Algorithms of the ACM. See also * ''Journal of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amdahl's Law

In computer architecture, Amdahl's law (or Amdahl's argument) is a formula which gives the theoretical speedup in latency of the execution of a task at fixed workload that can be expected of a system whose resources are improved. It states that "the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used". It is named after computer scientist Gene Amdahl, and was presented at the American Federation of Information Processing Societies (AFIPS) Spring Joint Computer Conference in 1967. Amdahl's law is often used in parallel computing to predict the theoretical speedup when using multiple processors. For example, if a program needs 20 hours to complete using a single thread, but a one-hour portion of the program cannot be parallelized, therefore only the remaining 19 hours' () execution time can be parallelized, then regardless of how many threads are devoted to a parallelized execu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Problem Size

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broade ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thread (computing)

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems. In Modern Operating Systems, Tanenbaum shows that many distinct models of process organization are possible.TANENBAUM, Andrew S. Modern Operating Systems. 1992. Prentice-Hall International Editions, ISBN 0-13-595752-4. In many cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently (via multithreading capabilities), sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non- thread-local global variables at any given time. History Threads made an early appearance under the name of "t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Parallelism

Software is said to exhibit scalable parallelism if it can make use of additional processors to solve larger problems, i.e. this term refers to software for which Gustafson's law holds. Consider a program whose execution time is dominated by one or more loops, each of that updates every element of an array --- for example, the following finite difference heat equation stencil calculation: for t := 0 to T do for i := 1 to N-1 do new(i) := (A(i-1) + A(i) + A(i) + A(i+1)) * .25 // explicit forward-difference with R = 0.25 end for i := 1 to N-1 do A(i) := new(i) end end In the above code, we can execute all iterations of each "i" loop concurrently, i.e., turn each into a parallel loop. In such cases, it is often possible to make effective use of twice as many processors for a problem of array size 2N as for a problem of array size N. As in this example, scalable parallelism is typically a form of data parallelism. This form of parallel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

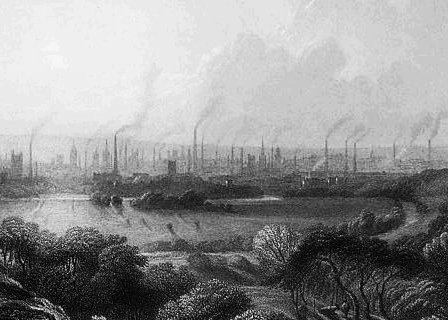

Jevons Paradox

In economics, the Jevons paradox (; sometimes Jevons effect) occurs when technological progress or government policy increases the efficiency with which a resource is used (reducing the amount necessary for any one use), but the falling cost of use increases its demand, increasing, rather than reducing, resource use. The Jevons' effect is perhaps the most widely known paradox in environmental economics. However, governments and environmentalists generally assume that efficiency gains will lower resource consumption, ignoring the possibility of the effect arising. In 1865, the English economist William Stanley Jevons observed that technological improvements that increased the efficiency of coal use led to the increased consumption of coal in a wide range of industries. He argued that, contrary to common intuition, technological progress could not be relied upon to reduce fuel consumption. The issue has been re-examined by modern economists studying consumption rebound effects f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |