|

Geoffrey Hinton

Geoffrey Everest Hinton One or more of the preceding sentences incorporates text from the royalsociety.org website where: (born 6 December 1947) is a British-Canadian cognitive psychologist and computer scientist, most noted for his work on artificial neural networks. Since 2013, he has divided his time working for Google (Google Brain) and the University of Toronto. In 2017, he co-founded and became the Chief Scientific Advisor of the Vector Institute in Toronto. With David Rumelhart and Ronald J. Williams, Hinton was co-author of a highly cited paper published in 1986 that popularized the backpropagation algorithm for training multi-layer neural networks, although they were not the first to propose the approach. Hinton is viewed as a leading figure in the deep learning community. The dramatic image-recognition milestone of the AlexNet designed in collaboration with his students Alex Krizhevsky and Ilya Sutskever for the ImageNet challenge 2012 was a breakthrough in the f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oxford University Press

Oxford University Press (OUP) is the university press of the University of Oxford. It is the largest university press in the world, and its printing history dates back to the 1480s. Having been officially granted the legal right to print books by decree in 1586, it is the second oldest university press after Cambridge University Press. It is a department of the University of Oxford and is governed by a group of 15 academics known as the Delegates of the Press, who are appointed by the vice-chancellor of the University of Oxford. The Delegates of the Press are led by the Secretary to the Delegates, who serves as OUP's chief executive and as its major representative on other university bodies. Oxford University Press has had a similar governance structure since the 17th century. The press is located on Walton Street, Oxford, opposite Somerville College, in the inner suburb of Jericho. For the last 500 years, OUP has primarily focused on the publication of pedagogical texts an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Radford M

Radford may refer to: Places England *Radford, Coventry, West Midlands *Radford, Nottingham, Nottinghamshire *Radford, Plymstock, Devon * Radford, Oxfordshire * Radford, Somerset * Radford, Worcestershire * Radford Cave in Devon *Radford Semele, Warwickshire United States * Radford, Alabama * Radford, Illinois *Radford, Virginia Elsewhere * Radford Island, an island in the Antarctic Ocean People * Radford (surname) * Radford family, a British reality TV family with many children * Radford Davis, an author of ninjutsu works *Radford Gamack (1897–1979) Australian politician * Radford M. Neal (born 1956) Canadian computer scientist Facilities and structures *Radford railway station, a former train station in Nottingham, England, UK * Radford railway station, Queensland, Australia *Radford Army Ammunition Plant, Radford, Virginia, USA * Radford College, Canberra, Australia; a coeducational day school * Radford University, Radford, Virginia, USA ** Radford Baseball Stadium * Radfo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Capsule Neural Network

A capsule neural network (CapsNet) is a machine learning system that is a type of artificial neural network (ANN) that can be used to better model hierarchical relationships. The approach is an attempt to more closely mimic biological neural organization. The idea is to add structures called “capsules” to a convolutional neural network (CNN), and to reuse output from several of those capsules to form more stable (with respect to various perturbations) representations for higher capsules. The output is a vector consisting of the probability of an observation, and a pose for that observation. This vector is similar to what is done for example when doing '' classification with localization'' in CNNs. Among other benefits, capsnets address the "Picasso problem" in image recognition: images that have all the right parts but that are not in the correct spatial relationship (e.g., in a "face", the positions of the mouth and one eye are switched). For image recognition, capsnets explo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised. Deep-learning architectures such as deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems. ANNs have various differences from biological brains. Specifically, artificial neu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising–Lenz–Little model) is a stochastic spin-glass model with an external field, i.e., a Sherrington–Kirkpatrick model, that is a stochastic Ising model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as a Markov random field. Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their parallelism and the resemblance of their dynamics to simple physical processes. Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems. They are named after the Boltzmann distribution in statistical mechanics, which i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally. These classes of algorithms are all referred to generically as "backpropagation". In fitting a neural network, backpropagation computes the gradient of the loss function with respect to the weights of the network for a single input–output example, and does so efficiently, unlike a naive direct computation of the gradient with respect to each weight individually. This efficiency makes it feasible to use gradient methods for training multilayer networks, updating weights to minimize loss; gradient descent, or variants such as stochastic gradient descent, are commonly used. The backpropagation algorithm works by computing the gradient of the loss function with respect to each weight by the chain rule, computing the gradient one lay ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alex Graves (computer Scientist)

Alex Graves was a research scientist at DeepMind. He did a BSc in Theoretical Physics at Edinburgh and obtained a PhD in AI under Jürgen Schmidhuber at IDSIA. He was also a postdoc under Jürgen Schmidhuber at TU Munich and under Geoffrey Hinton at the University of Toronto. At IDSIA, he trained long short-term memory neural networks by a novel method called connectionist temporal classification (CTC).Alex Graves, Santiago Fernandez, Faustino Gomez, and Jürgen Schmidhuber (2006). Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural nets. Proceedings of ICML’06, pp. 369–376. This method outperformed traditional speech recognition models in certain applications.Santiago Fernandez, Alex Graves, and Jürgen Schmidhuber (2007). An application of recurrent neural networks to discriminative keyword spotting. Proceedings of ICANN (2), pp. 220–229. In 2009, his CTC-trained LSTM was the first recurrent neural network to win pattern recogn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zoubin Ghahramani

Zoubin Ghahramani FRS ( fa, زوبین قهرمانی; born 8 February 1970) is a British-Iranian researcher and Professor of Information Engineering at the University of Cambridge. He holds joint appointments at University College London and the Alan Turing Institute. and has been a Fellow of St John's College, Cambridge since 2009. He was Associate Research Professor at Carnegie Mellon University School of Computer Science from 2003–2012. He was also the Chief Scientist of Uber from 2016 until 2020. He joined Google Brain in 2020 as senior research director. He is also Deputy Director of the Leverhulme Centre for the Future of Intelligence. Education Ghahramani was educated at the American School of Madrid in Spain and the University of Pennsylvania where he was awarded a double major degree in Cognitive Science and Computer Science in 1990. He obtained his Ph.D. from the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology, supervised ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Max Welling

Max Welling (born 1968) is a Dutch computer scientist in machine learning at the University of Amsterdam. In August 2017, the university spin-off ''Scyfer BV'', co-founded by Welling, was acquired by Qualcomm. He has since then served as a Vice President of Technology at Qualcomm Netherlands. He is also currently the Lead Scientist of the new Microsoft Research Lab in Amsterdam. Welling received his PhD in physics with a thesis on quantum gravity under the supervision of Nobel laureate Gerard 't Hooft (1998) at the Utrecht University. He has published over 250 peer-reviewed articles in machine learning, computer vision, statistics and physics, and has most notably invented variational autoencoder In machine learning, a variational autoencoder (VAE), is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling, belonging to the families of probabilistic graphical models and variational Bayesian methods. ...s (VAEs), together with Diede ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peter Dayan

Peter Dayan is director at the Max Planck Institute for Biological Cybernetics in Tübingen, Germany. He is co-author of ''Theoretical Neuroscience'', an influential textbook on computational neuroscience. He is known for applying Bayesian methods from machine learning and artificial intelligence to understand neural function and is particularly recognized for relating neurotransmitter levels to prediction errors and Bayesian uncertainties. He has pioneered the field of reinforcement learning (RL) where he helped develop the Q-learning algorithm, and made contributions to unsupervised learning, including the wake-sleep algorithm for neural networks and the Helmholtz machine. Education Dayan studied mathematics at the University of Cambridge and then continued for a PhD in artificial intelligence at the University of Edinburgh School of Informatics on statistical learning supervised by David Willshaw and David Wallace, focusing on associative memory and reinforcement learn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Postdoctoral Researcher

A postdoctoral fellow, postdoctoral researcher, or simply postdoc, is a person professionally conducting research after the completion of their doctoral studies (typically a PhD). The ultimate goal of a postdoctoral research position is to pursue additional research, training, or teaching in order to have better skills to pursue a career in academia, research, or any other field. Postdocs often, but not always, have a temporary academic appointment, sometimes in preparation for an academic faculty position. They continue their studies or carry out research and further increase expertise in a specialist subject, including integrating a team and acquiring novel skills and research methods. Postdoctoral research is often considered essential while advancing the scholarly mission of the host institution; it is expected to produce relevant publications in peer-reviewed academic journals or conferences. In some countries, postdoctoral research may lead to further formal qualification ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yann LeCun

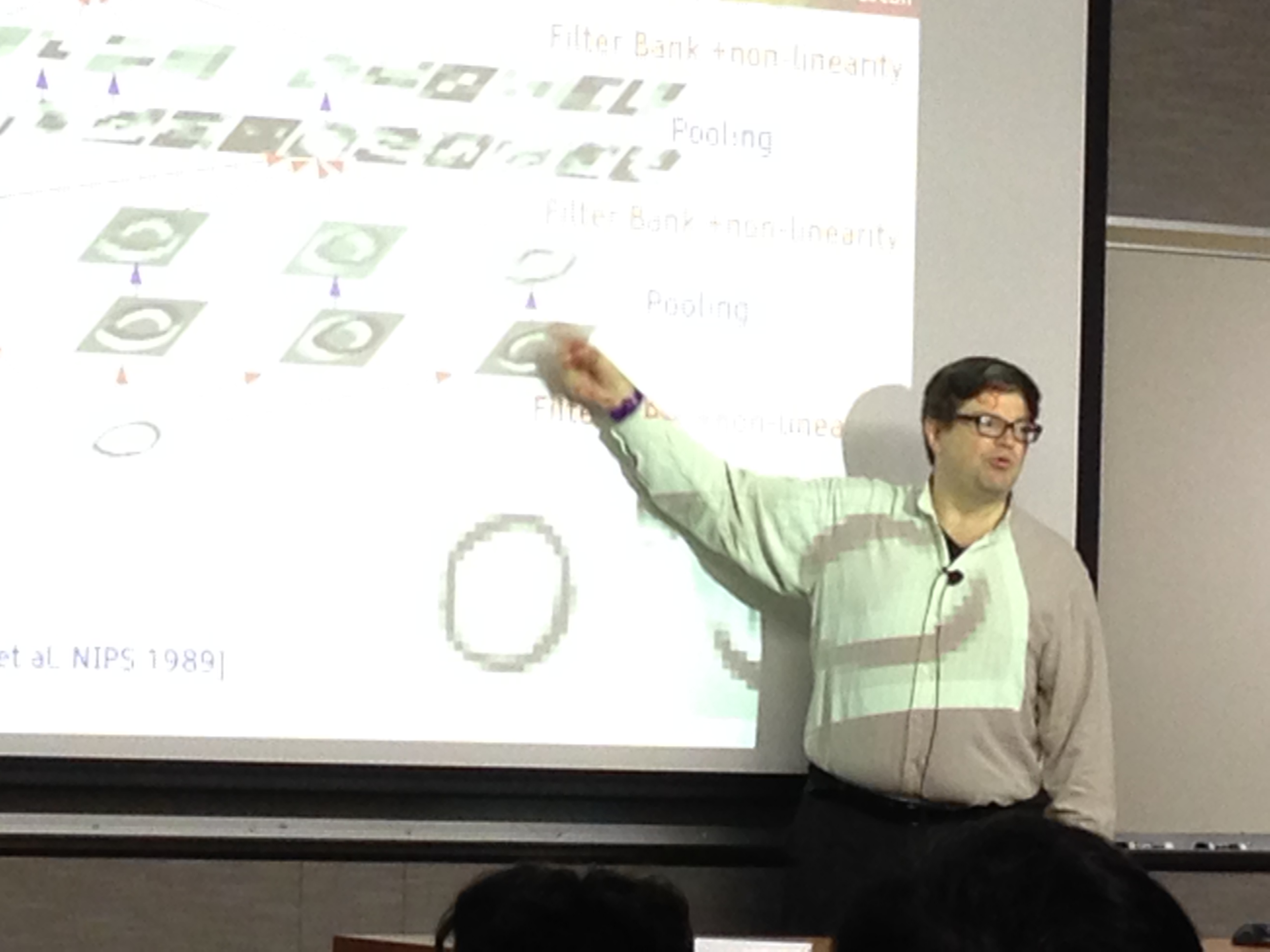

Yann André LeCun ( , ; originally spelled Le Cun; born 8 July 1960) is a French computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at New York University and Vice-President, Chief AI Scientist at Meta. He is well known for his work on optical character recognition and computer vision using convolutional neural networks (CNN), and is a founding father of convolutional nets. He is also one of the main creators of the DjVu image compression technology (together with Léon Bottou and Patrick Haffner). He co-developed the Lush programming language with Léon Bottou. LeCun received the 2018 Turing Award (often referred to as " Nobel Prize of Computing"), together with Yoshua Bengio and Geoffrey Hinton, for their work on deep learning. The three are sometimes referred to as the "Godfathers of AI" and "Godfathers of Deep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)