|

Fractional Programming

In mathematical optimization, fractional programming is a generalization of linear-fractional programming. The objective function in a fractional program is a ratio of two functions that are in general nonlinear. The ratio to be optimized often describes some kind of efficiency of a system. Definition Let f, g, h_j, j=1, \ldots, m be real-valued functions defined on a set \mathbf_0 \subset \mathbb^n. Let \mathbf = \. The nonlinear program : \underset \quad \frac, where g(\boldsymbol) > 0 on \mathbf, is called a fractional program. Concave fractional programs A fractional program in which ''f'' is nonnegative and concave, ''g'' is positive and convex, and S is a convex set is called a concave fractional program. If ''g'' is affine, ''f'' does not have to be restricted in sign. The linear fractional program is a special case of a concave fractional program where all functions f, g, h_j, j=1, \ldots, m are affine. Properties The function q(\boldsymbol) = f(\boldsymbol) / g(\bol ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maximizing or minimizing a real function by systematically choosing input values from within an allowed set and computing the value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. More generally, optimization includes finding "best available" values of some objective function given a defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear-fractional Programming

In mathematical optimization, linear-fractional programming (LFP) is a generalization of linear programming (LP). Whereas the objective function in a linear program is a linear function, the objective function in a linear-fractional program is a ratio of two linear functions. A linear program can be regarded as a special case of a linear-fractional program in which the denominator is the constant function one. Relation to linear programming Both linear programming and linear-fractional programming represent optimization problems using linear equations and linear inequalities, which for each problem-instance define a feasible set. Fractional linear programs have a richer set of objective functions. Informally, linear programming computes a policy delivering the best outcome, such as maximum profit or lowest cost. In contrast, a linear-fractional programming is used to achieve the highest ''ratio'' of outcome to cost, the ratio representing the highest efficiency. For example, in th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Real-valued Function

In mathematics, a real-valued function is a function whose values are real numbers. In other words, it is a function that assigns a real number to each member of its domain. Real-valued functions of a real variable (commonly called ''real functions'') and real-valued functions of several real variables are the main object of study of calculus and, more generally, real analysis. In particular, many function spaces consist of real-valued functions. Algebraic structure Let (X,) be the set of all functions from a set to real numbers \mathbb R. Because \mathbb R is a field, (X,) may be turned into a vector space and a commutative algebra over the reals with the following operations: *f+g: x \mapsto f(x) + g(x) – vector addition *\mathbf: x \mapsto 0 – additive identity *c f: x \mapsto c f(x),\quad c \in \mathbb R – scalar multiplication *f g: x \mapsto f(x)g(x) – pointwise multiplication These operations extend to partial functions from to \mathbb R, with the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonlinear Programming

In mathematics, nonlinear programming (NLP) is the process of solving an optimization problem where some of the constraints or the objective function are nonlinear. An optimization problem is one of calculation of the extrema (maxima, minima or stationary points) of an objective function over a set of unknown real variables and conditional to the satisfaction of a system of equalities and inequalities, collectively termed constraints. It is the sub-field of mathematical optimization that deals with problems that are not linear. Applicability A typical non-convex problem is that of optimizing transportation costs by selection from a set of transportation methods, one or more of which exhibit economies of scale, with various connectivities and capacity constraints. An example would be petroleum product transport given a selection or combination of pipeline, rail tanker, road tanker, river barge, or coastal tankship. Owing to economic batch size the cost functions may have disco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convex Set

In geometry, a subset of a Euclidean space, or more generally an affine space over the reals, is convex if, given any two points in the subset, the subset contains the whole line segment that joins them. Equivalently, a convex set or a convex region is a subset that intersects every line into a single line segment (possibly empty). For example, a solid cube is a convex set, but anything that is hollow or has an indent, for example, a crescent shape, is not convex. The boundary of a convex set is always a convex curve. The intersection of all the convex sets that contain a given subset of Euclidean space is called the convex hull of . It is the smallest convex set containing . A convex function is a real-valued function defined on an interval with the property that its epigraph (the set of points on or above the graph of the function) is a convex set. Convex minimization is a subfield of optimization that studies the problem of minimizing convex functions over convex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quasiconcave Function

In mathematics, a quasiconvex function is a real-valued function defined on an interval or on a convex subset of a real vector space such that the inverse image of any set of the form (-\infty,a) is a convex set. For a function of a single variable, along any stretch of the curve the highest point is one of the endpoints. The negative of a quasiconvex function is said to be quasiconcave. All convex functions are also quasiconvex, but not all quasiconvex functions are convex, so quasiconvexity is a generalization of convexity. ''Univariate'' unimodal functions are quasiconvex or quasiconcave, however this is not necessarily the case for functions with multiple arguments. For example, the 2-dimensional Rosenbrock function is unimodal but not quasiconvex and functions with star-convex sublevel sets can be unimodal without being quasiconvex. Definition and properties A function f:S \to \mathbb defined on a convex subset S of a real vector space is quasiconvex if for all x, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudoconcave Function

In convex analysis and the calculus of variations, both branches of mathematics, a pseudoconvex function is a function that behaves like a convex function with respect to finding its local minima, but need not actually be convex. Informally, a differentiable function is pseudoconvex if it is increasing in any direction where it has a positive directional derivative. The property must hold in all of the function domain, and not only for nearby points. Formal definition Consider a differentiable function f:X \subseteq \mathbb^ \rightarrow \mathbb, defined on a (nonempty) convex open set X of the finite-dimensional Euclidean space \mathbb^n. This function is said to be pseudoconvex if the following property holds: Equivalently: Here \nabla f is the gradient of f, defined by: \nabla f = \left(\frac,\dots,\frac\right). Note that the definition may also be stated in terms of the directional derivative of f, in the direction given by the vector v=y-x. This is because, as f is d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudolinear Function

In convex analysis and the calculus of variations, both branches of mathematics, a pseudoconvex function is a function that behaves like a convex function with respect to finding its local minima, but need not actually be convex. Informally, a differentiable function is pseudoconvex if it is increasing in any direction where it has a positive directional derivative. The property must hold in all of the function domain, and not only for nearby points. Formal definition Consider a differentiable function f:X \subseteq \mathbb^ \rightarrow \mathbb, defined on a (nonempty) convex open set X of the finite-dimensional Euclidean space \mathbb^n. This function is said to be pseudoconvex if the following property holds: Equivalently: Here \nabla f is the gradient of f, defined by: \nabla f = \left(\frac,\dots,\frac\right). Note that the definition may also be stated in terms of the directional derivative of f, in the direction given by the vector v=y-x. This is because, as f is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concave Program

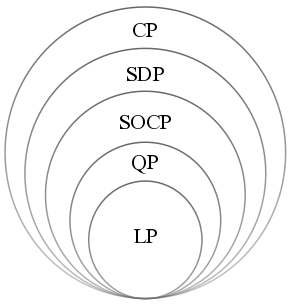

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Convex optimization has applications in a wide range of disciplines, such as automatic control systems, estimation and signal processing, communications and networks, electronic circuit design, data analysis and modeling, finance, statistics ( optimal experimental design), and structural optimization, where the approximation concept has proven to be efficient. With recent advancements in computing and optimization algorithms, convex programming is nearly as straightforward as linear programming. Definition A convex optimization problem is an optimization problem in which the objective function is a convex function and the feasible set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |