|

Flex Lexical Analyser

Flex (fast lexical analyzer generator) is a free and open-source software alternative to lex. It is a computer program that generates lexical analyzers (also known as "scanners" or "lexers"). It is frequently used as the lex implementation together with Berkeley Yacc parser generator on BSD-derived operating systems (as both lex and yacc are part of POSIX), or together with GNU bison (a version of yacc) in *BSD ports and in Linux distributions. Unlike Bison, flex is not part of the GNU Project and is not released under the GNU General Public License, although a manual for Flex was produced and published by the Free Software Foundation. History Flex was written in C around 1987 by Vern Paxson, with the help of many ideas and much inspiration from Van Jacobson. Original version by Jef Poskanzer. The fast table representation is a partial implementation of a design done by Van Jacobson. The implementation was done by Kevin Gong and Vern Paxson. Example lexical analyzer Thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vern Paxson

Vern Edward Paxson is a Professor of Computer Science at the University of California, Berkeley. He also leads the Networking and Security Group at the International Computer Science Institute in Berkeley, California. His interests range from transport protocols to intrusion detection and worms. He is an active member of the Internet Engineering Task Force (IETF) community and served as the chair of the IRTF from 2001 until 2005. From 1998 to 1999 he served on the IESG as Transport Area Director for the IETF. In 2006 Paxson was inducted as a Fellow of the Association for Computing Machinery (ACM). The ACM's Special Interest Group on Data Communications (SIGCOMM) gave Paxson its 2011 award, "for his seminal contributions to the fields of Internet measurement and Internet security, and for distinguished leadership and service to the Internet community." The annual SIGCOMM Award recognizes lifetime contribution to the field of communication networks. Paxson is also the origi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ports Collection

Ports collections (or ''ports trees'', or just ''ports'') are the sets of makefiles and patches provided by the BSD-based operating systems, FreeBSD, NetBSD, and OpenBSD, as a simple method of installing software or creating binary packages. They are usually the base of a package management system, with ports handling package creation and additional tools managing package removal, upgrade, and other tasks. In addition to the BSDs, a few Linux distributions have implemented similar infrastructure, including Gentoo's Portage, Arch's Arch Build System (ABS), CRUX's Ports and Void Linux's Templates. The main advantage of the ports system when compared with a binary distribution model is that the installation can be tuned and optimized according to available resources. For example, the system administrator can easily install a 32 bit version of a package if the 64 bit version is not available or is not optimized for that machine. Conversely, the main disadvantage is compil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nondeterministic Finite Automaton

In automata theory, a finite-state machine is called a deterministic finite automaton (DFA), if * each of its transitions is ''uniquely'' determined by its source state and input symbol, and * reading an input symbol is required for each state transition. A nondeterministic finite automaton (NFA), or nondeterministic finite-state machine, does not need to obey these restrictions. In particular, every DFA is also an NFA. Sometimes the term NFA is used in a narrower sense, referring to an NFA that is ''not'' a DFA, but not in this article. Using the subset construction algorithm, each NFA can be translated to an equivalent DFA; i.e., a DFA recognizing the same formal language. Like DFAs, NFAs only recognize regular languages. NFAs were introduced in 1959 by Michael O. Rabin and Dana Scott, who also showed their equivalence to DFAs. NFAs are used in the implementation of regular expressions: Thompson's construction is an algorithm for compiling a regular expression to an NFA ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Expressions

A regular expression (shortened as regex or regexp; sometimes referred to as rational expression) is a sequence of characters that specifies a search pattern in text. Usually such patterns are used by string-searching algorithms for "find" or "find and replace" operations on strings, or for input validation. Regular expression techniques are developed in theoretical computer science and formal language theory. The concept of regular expressions began in the 1950s, when the American mathematician Stephen Cole Kleene formalized the concept of a regular language. They came into common use with Unix text-processing utilities. Different syntaxes for writing regular expressions have existed since the 1980s, one being the POSIX standard and another, widely used, being the Perl syntax. Regular expressions are used in search engines, in search and replace dialogs of word processors and text editors, in text processing utilities such as sed and AWK, and in lexical analysis. Most ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Read-only Right Moving Turing Machines

In the theory of computation, a branch of theoretical computer science, a deterministic finite automaton (DFA)—also known as deterministic finite acceptor (DFA), deterministic finite-state machine (DFSM), or deterministic finite-state automaton (DFSA)—is a finite-state machine that accepts or rejects a given string of symbols, by running through a state sequence uniquely determined by the string. Hopcroft 2001: ''Deterministic'' refers to the uniqueness of the computation run. In search of the simplest models to capture finite-state machines, Warren McCulloch and Walter Pitts were among the first researchers to introduce a concept similar to finite automata in 1943. The figure illustrates a deterministic finite automaton using a state diagram. In this example automaton, there are three states: S0, S1, and S2 (denoted graphically by circles). The automaton takes a finite sequence of 0s and 1s as input. For each state, there is a transition arrow leading out to a next state ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

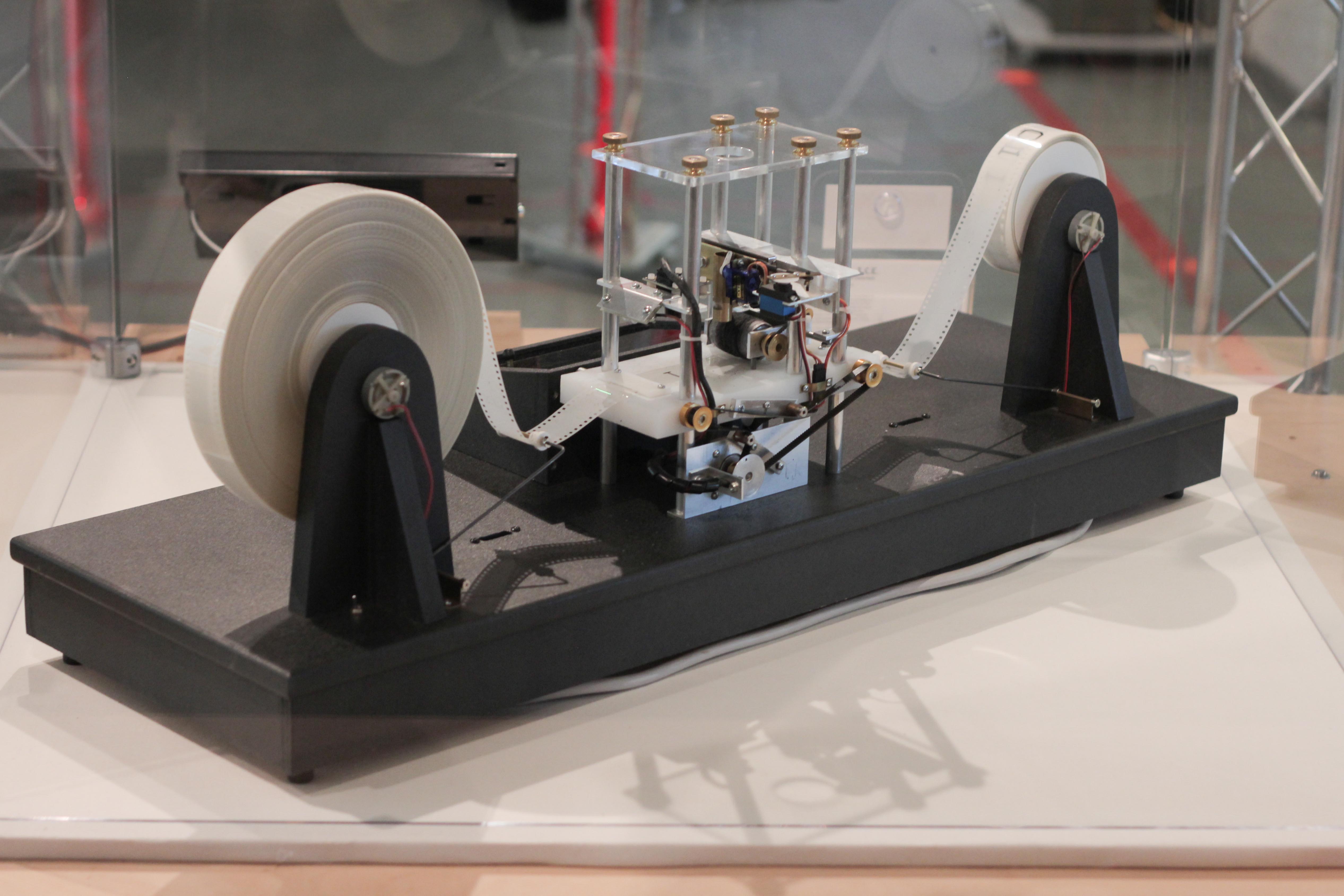

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write and which direction to move is based on a finite table that specifies what to do for each com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Language

In theoretical computer science and formal language theory, a regular language (also called a rational language) is a formal language that can be defined by a regular expression, in the strict sense in theoretical computer science (as opposed to many modern regular expressions engines, which are augmented with features that allow recognition of non-regular languages). Alternatively, a regular language can be defined as a language recognized by a finite automaton. The equivalence of regular expressions and finite automata is known as Kleene's theorem (after American mathematician Stephen Cole Kleene). In the Chomsky hierarchy, regular languages are the languages generated by Type-3 grammars. Formal definition The collection of regular languages over an alphabet Σ is defined recursively as follows: * The empty language Ø is a regular language. * For each ''a'' ∈ Σ (''a'' belongs to Σ), the singleton language is a regular language. * If ''A'' is a regular language, ''A'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deterministic Finite Automaton

In the theory of computation, a branch of theoretical computer science, a deterministic finite automaton (DFA)—also known as deterministic finite acceptor (DFA), deterministic finite-state machine (DFSM), or deterministic finite-state automaton (DFSA)—is a finite-state machine that accepts or rejects a given string of symbols, by running through a state sequence uniquely determined by the string. Hopcroft 2001: ''Deterministic'' refers to the uniqueness of the computation run. In search of the simplest models to capture finite-state machines, Warren McCulloch and Walter Pitts were among the first researchers to introduce a concept similar to finite automata in 1943. The figure illustrates a deterministic finite automaton using a state diagram. In this example automaton, there are three states: S0, S1, and S2 (denoted graphically by circles). The automaton takes a finite sequence of 0s and 1s as input. For each state, there is a transition arrow leading out to a next st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PL/0

PL/0 is a programming language, intended as an educational programming language, that is similar to but much simpler than Pascal, a general-purpose programming language. It serves as an example of how to construct a compiler. It was originally introduced in the book, ''Algorithms + Data Structures = Programs'', by Niklaus Wirth in 1976. It features quite limited language constructs: there are no real numbers, very few basic arithmetic operations and no control-flow constructs other than "if" and "while" blocks. While these limitations make writing real applications in this language impractical, it helps the compiler remain compact and simple. Grammar The following is the syntax rules of the model language defined in EBNF: program = block "." ; block = "const" ident "=" number ";" "var" ident ";" statement ; statement = "call" ident , "?" ident , "!" expression , "begin" statement "end" , "if" condition "th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jef Poskanzer

Jeffrey A. Poskanzer is a computer programmer. He was the first person to post a weekly FAQ to Usenet. He developed the portable pixmap file format and pbmplus (the precursor to the Netpbm package) to manipulate it. He has also worked on the team that ported A/UX. He has shared in two USENIX Lifetime Achievement Awards – in 1993 for Berkeley Unix, and in 1996 for the Software Tools Project. He owns the Internet address acme.com (which is notable for receiving over one million e-mail spams a day), which is the home page for ACME Laboratories. It hosts a number of open source software projects; major projects maintained include both pbmplus and thttpd thttpd (tiny/turbo/throttling HTTP server) is an open source software web server from ACME Laboratories, designed for simplicity, a small execution footprint and speed. Design and features thttpd is single-threaded and portable: it compiles ..., an open source web server. Notes External links ACME Laboratories A/UX ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Van Jacobson

Van Jacobson (born 1950) is an American computer scientist, renowned for his work on TCP/IP network performance and scaling.2001 SIGCOMM Award for Lifetime Achievement to Van Jacobson "for contributions to protocol architecture and congestion control." He is one of the primary contributors to the TCP/IP protocol stack—the technological foundation of today’s Internet. Since 2013, Jacobson is an adjunct professor at the University of California, Los Angeles (UCLA) working on [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |