|

Fusion Tree

In computer science, a fusion tree is a type of tree data structure that implements an associative array on -bit integers on a finite universe, where each of the input integer has size less than 2w and is non-negative. When operating on a collection of key–value pairs, it uses space and performs searches in time, which is asymptotically faster than a traditional self-balancing binary search tree, and also better than the van Emde Boas tree for large values of . It achieves this speed by using certain constant-time operations that can be done on a machine word. Fusion trees were invented in 1990 by Michael Fredman and Dan Willard. Several advances have been made since Fredman and Willard's original 1990 paper. In 1999 it was shown how to implement fusion trees under a model of computation in which all of the underlying operations of the algorithm belong to AC0, a model of circuit complexity that allows addition and bitwise Boolean operations but does not allow the multi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The ACM

The ''Journal of the ACM'' is a peer-reviewed scientific journal covering computer science in general, especially theoretical aspects. It is an official journal of the Association for Computing Machinery. Its current editor-in-chief An editor-in-chief (EIC), also known as lead editor or chief editor, is a publication's editorial leader who has final responsibility for its operations and policies. The highest-ranking editor of a publication may also be titled editor, managing ... is Venkatesan Guruswami. The journal was established in 1954 and "computer scientists universally hold the ''Journal of the ACM'' in high esteem". See also * '' Communications of the ACM'' References External links * Publications established in 1954 Computer science journals Association for Computing Machinery academic journals Bimonthly journals English-language journals {{compu-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIAM Journal On Computing

The ''SIAM Journal on Computing'' is a scientific journal focusing on the mathematical and formal aspects of computer science. It is published by the Society for Industrial and Applied Mathematics (SIAM). Although its official ISO abbreviation is ''SIAM J. Comput.'', its publisher and contributors frequently use the shorter abbreviation ''SICOMP''. SICOMP typically hosts the special issues of the IEEE Annual Symposium on Foundations of Computer Science (FOCS) and the Annual ACM Symposium on Theory of Computing (STOC), where about 15% of papers published in FOCS and STOC each year are invited to these special issues. For example, Volume 48 contains 11 out of 85 papers published in FOCS 2016. References * External linksSIAM Journal on Computing on |

Quasi-polynomial Time

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor. Since an algorithm's running time may vary among different inputs of the same size, one commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size. Less common, and usually specified explicitly, is the average-case complexity, which is the average of the time taken on inputs of a given size (this makes sense because there are only a finite number of possible inputs of a given size). In both cases, the time complexity is generally expresse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hash Table

In computing, a hash table, also known as hash map, is a data structure that implements an associative array or dictionary. It is an abstract data type that maps keys to values. A hash table uses a hash function to compute an ''index'', also called a ''hash code'', into an array of ''buckets'' or ''slots'', from which the desired value can be found. During lookup, the key is hashed and the resulting hash indicates where the corresponding value is stored. Ideally, the hash function will assign each key to a unique bucket, but most hash table designs employ an imperfect hash function, which might cause hash '' collisions'' where the hash function generates the same index for more than one key. Such collisions are typically accommodated in some way. In a well-dimensioned hash table, the average time complexity for each lookup is independent of the number of elements stored in the table. Many hash table designs also allow arbitrary insertions and deletions of key–value pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitwise XOR

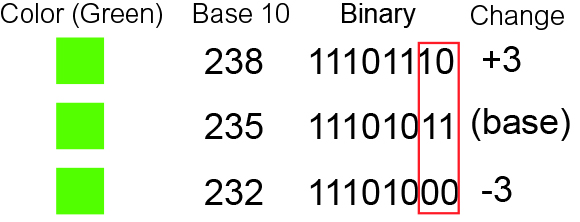

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's position is counted from the right (least sign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Most Significant Bit

In computing, bit numbering is the convention used to identify the bit positions in a binary number. Bit significance and indexing In computing, the least significant bit (LSB) is the bit position in a binary integer representing the binary 1s place of the integer. Similarly, the most significant bit (MSB) represents the highest-order place of the binary integer. The LSB is sometimes referred to as the ''low-order bit'' or ''right-most bit'', due to the convention in positional notation of writing less significant digits further to the right. The MSB is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSB and MSB correlate directly to the least significant digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit index is not affected by how the value is stored on the device, such as the value's byte order. Rather, it is a property of the num ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitwise AND

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's position is counted from the right (least sign ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

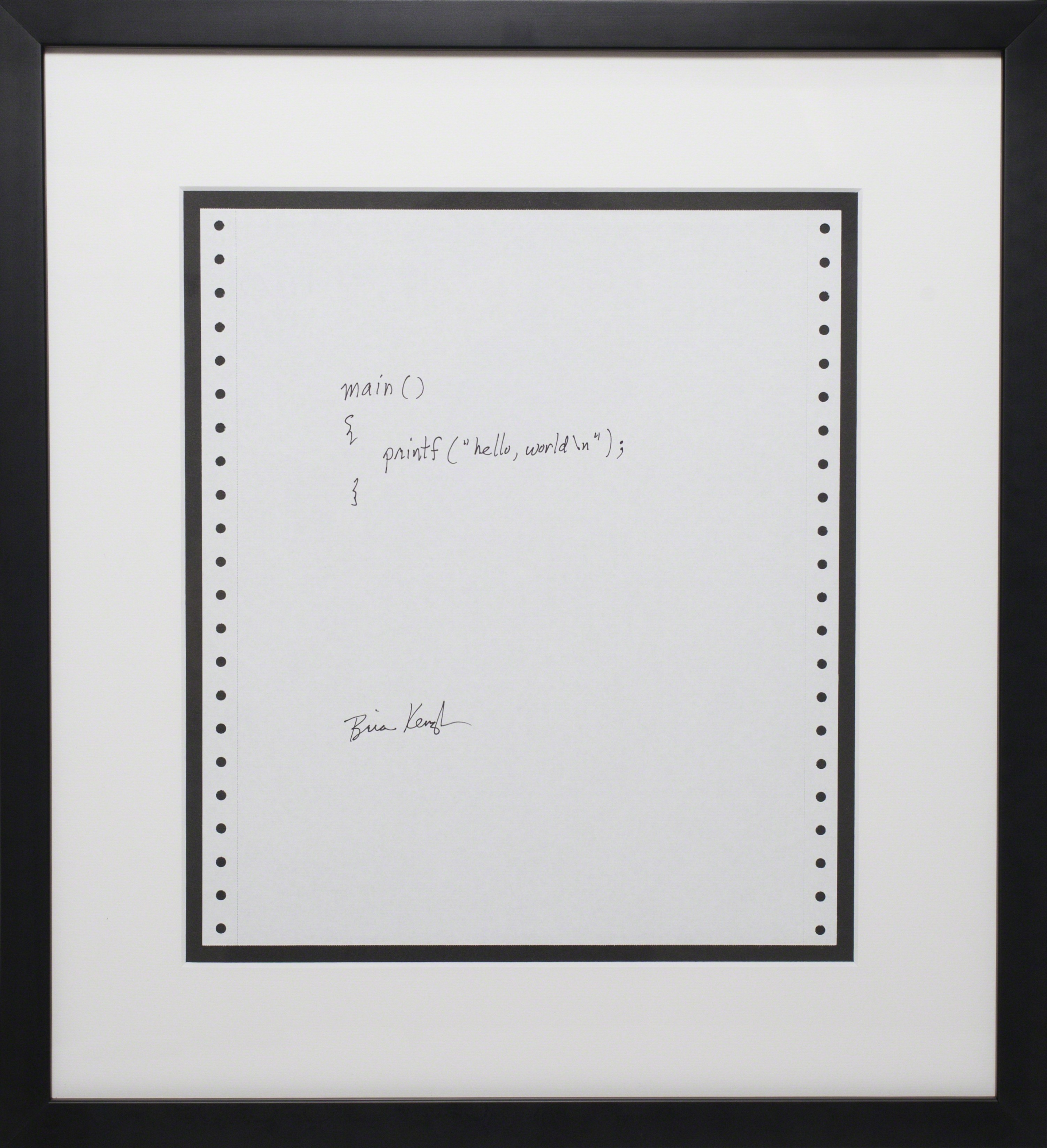

C Programming Language

''The C Programming Language'' (sometimes termed ''K&R'', after its authors' initials) is a computer programming book written by Brian Kernighan and Dennis Ritchie, the latter of whom originally designed and implemented the language, as well as co-designed the Unix operating system with which development of the language was closely intertwined. The book was central to the development and popularization of the C programming language and is still widely read and used today. Because the book was co-authored by the original language designer, and because the first edition of the book served for many years as the ''de facto'' standard for the language, the book was regarded by many to be the authoritative reference on C. History C was created by Dennis Ritchie at Bell Labs in the early 1970s as an augmented version of Ken Thompson's B. Another Bell Labs employee, Brian Kernighan, had written the first C tutorial, and he persuaded Ritchie to coauthor a book on the language. K ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

B-tree

In computer science, a B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time. The B-tree generalizes the binary search tree, allowing for nodes with more than two children. Unlike other self-balancing binary search trees, the B-tree is well suited for storage systems that read and write relatively large blocks of data, such as databases and file systems. Origin B-trees were invented by Rudolf Bayer and Edward M. McCreight while working at Boeing Research Labs, for the purpose of efficiently managing index pages for large random-access files. The basic assumption was that indices would be so voluminous that only small chunks of the tree could fit in main memory. Bayer and McCreight's paper, ''Organization and maintenance of large ordered indices'', was first circulated in July 1970 and later published in '' Acta Informatica''. Bayer and McCreight never explained what, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallelism (computing)

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than mak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |