|

Frontal Solver

A frontal solver, conceived by Bruce Irons (engineer), Bruce Irons, is an approach to solving sparse matrix, sparse linear systems which is used extensively in finite element analysis. It is a variant of Gauss elimination that automatically avoids a large number of operations involving zero terms. A frontal solver builds a LU decomposition, LU or Cholesky decomposition of a sparse matrix given as the assembly of element matrices by assembling the matrix and eliminating equations only on a subset of elements at a time. This subset is called the front and it is essentially the transition region between the part of the system already finished and the part not touched yet. The whole sparse matrix is never created explicitly. Only parts of the matrix are assembled as they enter the front. Processing the front involves dense matrix operations, which use the CPU efficiently. In a typical implementation, only the front is in computer memory, memory, while the factors in the decomposition are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bruce Irons (engineer)

Bruce Moncur Irons (6 October 1924 – 5 December 1983) was an engineer and mathematician, known for his fundamental contribution to the finite element method, including the patch test, the frontal solver and, along with Ian C. Taig, the isoparametric element concept. He developed multiple sclerosis; finding it difficult to accept anticipated relapses, he committed suicide Suicide is the act of intentionally causing one's own death. Mental disorders (including depression, bipolar disorder, schizophrenia, personality disorders, anxiety disorders), physical disorders (such as chronic fatigue syndrome), and s ... on 5 December 1983, and his wife followed suit. References External links Bruce M. Irons Memorial Scholarship 1924 births 20th-century Canadian mathematicians 1983 suicides {{mathematician-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Matrix

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

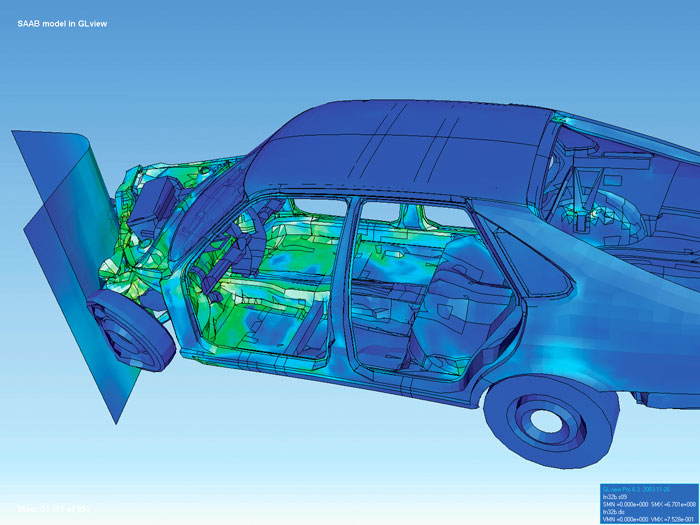

Finite Element Analysis

The finite element method (FEM) is a popular method for numerically solving differential equations arising in engineering and mathematical modeling. Typical problem areas of interest include the traditional fields of structural analysis, heat transfer, fluid flow, mass transport, and electromagnetic potential. The FEM is a general numerical method for solving partial differential equations in two or three space variables (i.e., some boundary value problems). To solve a problem, the FEM subdivides a large system into smaller, simpler parts that are called finite elements. This is achieved by a particular space discretization in the space dimensions, which is implemented by the construction of a mesh of the object: the numerical domain for the solution, which has a finite number of points. The finite element method formulation of a boundary value problem finally results in a system of algebraic equations. The method approximates the unknown function over the domain. The sim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gauss Elimination

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855) although some special cases of the method—albeit presented without proof—were known to Chinese mathematicians as early as circa 179 AD. To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations: * Swapping two rows, * Multiplying a row by a nonzero number, * Adding a multiple of one row to another row. (subtraction can be achieved by multiplying one row with -1 and adding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LU Decomposition

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix (see matrix decomposition). The product sometimes includes a permutation matrix as well. LU decomposition can be viewed as the matrix form of Gaussian elimination. Computers usually solve square systems of linear equations using LU decomposition, and it is also a key step when inverting a matrix or computing the determinant of a matrix. The LU decomposition was introduced by the Polish mathematician Tadeusz Banachiewicz in 1938. Definitions Let ''A'' be a square matrix. An LU factorization refers to the factorization of ''A'', with proper row and/or column orderings or permutations, into two factors – a lower triangular matrix ''L'' and an upper triangular matrix ''U'': : A = LU. In the lower triangular matrix all elements above the diagonal are zero, in the upper triangular matrix, all the e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cholesky Decomposition

In linear algebra, the Cholesky decomposition or Cholesky factorization (pronounced ) is a decomposition of a Hermitian, positive-definite matrix into the product of a lower triangular matrix and its conjugate transpose, which is useful for efficient numerical solutions, e.g., Monte Carlo simulations. It was discovered by André-Louis Cholesky for real matrices, and posthumously published in 1924. When it is applicable, the Cholesky decomposition is roughly twice as efficient as the LU decomposition for solving systems of linear equations. Statement The Cholesky decomposition of a Hermitian positive-definite matrix A, is a decomposition of the form : \mathbf = \mathbf^*, where L is a lower triangular matrix with real and positive diagonal entries, and L* denotes the conjugate transpose of L. Every Hermitian positive-definite matrix (and thus also every real-valued symmetric positive-definite matrix) has a unique Cholesky decomposition. The converse holds trivially: if A can be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dense Matrix

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Memory

In computing, memory is a device or system that is used to store information for immediate use in a computer or related computer hardware and digital electronic devices. The term ''memory'' is often synonymous with the term ''primary storage'' or '' main memory''. An archaic synonym for memory is store. Computer memory operates at a high speed compared to storage that is slower but less expensive and higher in capacity. Besides storing opened programs, computer memory serves as disk cache and write buffer to improve both reading and writing performance. Operating systems borrow RAM capacity for caching so long as not needed by running software. If needed, contents of the computer memory can be transferred to storage; a common way of doing this is through a memory management technique called ''virtual memory''. Modern memory is implemented as semiconductor memory, where data is stored within memory cells built from MOS transistors and other components on an integrated c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer File

A computer file is a computer resource for recording data in a computer storage device, primarily identified by its file name. Just as words can be written to paper, so can data be written to a computer file. Files can be shared with and transferred between computers and mobile devices via removable media, networks, or the Internet. Different types of computer files are designed for different purposes. A file may be designed to store an Image, a written message, a video, a computer program, or any wide variety of other kinds of data. Certain files can store multiple data types at once. By using computer programs, a person can open, read, change, save, and close a computer file. Computer files may be reopened, modified, and copied an arbitrary number of times. Files are typically organized in a file system, which tracks file locations on the disk and enables user access. Etymology The word "file" derives from the Latin ''filum'' ("a thread"). "File" was used in the conte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John K

John K may refer to: *John Kricfalusi Michael John Kricfalusi ( ; born September 9, 1955), known professionally as John K., is a Canadian illustrator, blogger, voice actor and former animator. He is the creator of the animated television series ''The Ren & Stimpy Show'', which was ..., Canadian animator and voice actor * John K (musician), American singer See also * John Kay (other) * John Kaye (other) * {{hndis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)