|

Fine Tuning

In theoretical physics, fine-tuning is the process in which parameters of a model must be adjusted very precisely in order to fit with certain observations. This had led to the discovery that the fundamental constants and quantities fall into such an extraordinarily precise range that if it did not, the origin and evolution of conscious agents in the universe would not be permitted. Theories requiring fine-tuning are regarded as problematic in the absence of a known mechanism to explain why the parameters happen to have precisely the observed values that they return. The heuristic rule that parameters in a fundamental physical theory should not be too fine-tuned is called naturalness. Background The idea that naturalness will explain fine tuning was brought into question by Nima Arkani-Hamed, a theoretical physicist, in his talk "Why is there a Macroscopic Universe?", a lecture from the mini-series "Multiverse & Fine Tuning" from the "Philosophy of Cosmology" project, a Univ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Physics

Theoretical physics is a branch of physics that employs mathematical models and abstractions of physical objects and systems to rationalize, explain and predict natural phenomena. This is in contrast to experimental physics, which uses experimental tools to probe these phenomena. The advancement of science generally depends on the interplay between experimental studies and theory. In some cases, theoretical physics adheres to standards of mathematical rigour while giving little weight to experiments and observations.There is some debate as to whether or not theoretical physics uses mathematics to build intuition and illustrativeness to extract physical insight (especially when normal experience fails), rather than as a tool in formalizing theories. This links to the question of it using mathematics in a less formally rigorous, and more intuitive or heuristic way than, say, mathematical physics. For example, while developing special relativity, Albert Einstein was concer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Naturalness (physics)

In physics, naturalness is the aesthetic property that the dimensionless ratios between free parameters or physical constants appearing in a physical theory should take values "of order 1" and that free parameters are not fine-tuned. That is, a natural theory would have parameter ratios with values like 2.34 rather than 234000 or 0.000234. The requirement that satisfactory theories should be "natural" in this sense is a current of thought initiated around the 1960s in particle physics. It is a criterion that arises from the seeming non-naturalness of the standard model and the broader topics of the hierarchy problem, fine-tuning, and the anthropic principle. However it does tend to suggest a possible area of weakness or future development for current theories such as the Standard Model, where some parameters vary by many orders of magnitude, and which require extensive " fine-tuning" of their current values of the models concerned. The concern is that it is not yet clear whether th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nima Arkani-Hamed

Nima Arkani-Hamed ( fa, نیما ارکانی حامد; born April 5, 1972) is an American-Canadian "Curriculum Vita, updated 4-17-15" sns.ias.edu; accessed December 4, 2015. theoretical physics, theoretical physicist of Iranian descent, with interests in high-energy physics, quantum field theory, string theory, physical cosmology, cosmology and accelerator physics, collider physics. Arkani-Hamed is a member of the permanent faculty at the Institute for Advanced Study in Princeton, New Jersey. He is also Director of the Carl P. Feinberg Cross-Disciplinary Program in Innovation at the Institute and director of The Center for Future High Energy Physics (CFHEP) in Beijing, China. Early life Arkani-Hamed's parents, Jafarghol ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cosmological Constant

In cosmology, the cosmological constant (usually denoted by the Greek capital letter lambda: ), alternatively called Einstein's cosmological constant, is the constant coefficient of a term that Albert Einstein temporarily added to his field equations of general relativity. He later removed it. Much later it was revived and reinterpreted as the energy density of space, or vacuum energy, that arises in quantum mechanics. It is closely associated with the concept of dark energy. Einstein originally introduced the constant in 1917 to counterbalance the effect of gravity and achieve a static universe, a notion that was the accepted view at the time. Einstein's cosmological constant was abandoned after Edwin Hubble's confirmation that the universe was expanding. From the 1930s until the late 1990s, most physicists agreed with Einstein's choice of setting the cosmological constant to zero. That changed with the discovery in 1998 that the expansion of the universe is accelerating ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchy Problem

In theoretical physics, the hierarchy problem is the problem concerning the large discrepancy between aspects of the weak force and gravity. There is no scientific consensus on why, for example, the weak force is 1024 times stronger than gravity. Technical definition A hierarchy problem occurs when the fundamental value of some physical parameter, such as a coupling constant or a mass, in some Lagrangian is vastly different from its effective value, which is the value that gets measured in an experiment. This happens because the effective value is related to the fundamental value by a prescription known as renormalization, which applies corrections to it. Typically the renormalized value of parameters are close to their fundamental values, but in some cases, it appears that there has been a delicate cancellation between the fundamental quantity and the quantum corrections. Hierarchy problems are related to fine-tuning problems and problems of naturalness. Over the past dec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Strong CP Problem

The strong CP problem is a puzzling question in particle physics: Why does quantum chromodynamics (QCD) seem to preserve CP-symmetry? In particle physics, CP stands for the combination of charge conjugation symmetry (C) and parity symmetry (P). According to the current mathematical formulation of quantum chromodynamics, a violation of CP-symmetry in strong interactions could occur. However, no violation of the CP-symmetry has ever been seen in any experiment involving only the strong interaction. As there is no known reason in QCD for it to necessarily be conserved, this is a "fine tuning" problem known as the strong CP problem. The strong CP problem is sometimes regarded as an unsolved problem in physics, and has been referred to as "the most underrated puzzle in all of physics." There are several proposed solutions to solve the strong CP problem. The most well-known is Peccei–Quinn theory, involving new pseudoscalar particles called axions. Theory CP-symmetry states that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

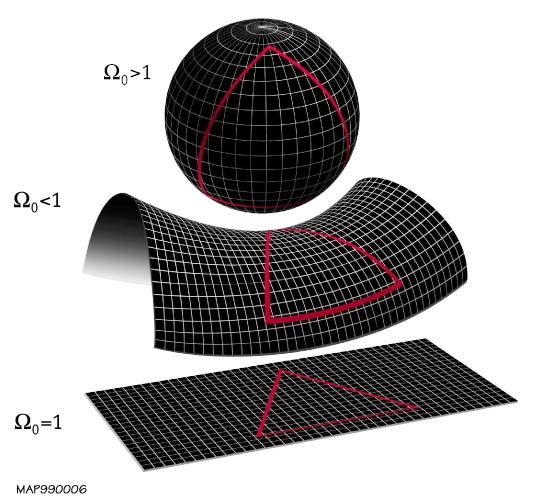

Flatness Problem

The flatness problem (also known as the oldness problem) is a cosmological fine-tuning problem within the Big Bang model of the universe. Such problems arise from the observation that some of the initial conditions of the universe appear to be fine-tuned to very 'special' values, and that small deviations from these values would have extreme effects on the appearance of the universe at the current time. In the case of the flatness problem, the parameter which appears fine-tuned is the density of matter and energy in the universe. This value affects the curvature of space-time, with a very specific critical value being required for a flat universe. The current density of the universe is observed to be very close to this critical value. Since any departure of the total density from the critical value would increase rapidly over cosmic time, the early universe must have had a density even closer to the critical density, departing from it by one part in 1062 or less. This leads c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inflationary Theory

In physical cosmology, cosmic inflation, cosmological inflation, or just inflation, is a theory of exponential expansion of space in the early universe. The inflationary epoch lasted from seconds after the conjectured Big Bang singularity to some time between and seconds after the singularity. Following the inflationary period, the universe continued to expand, but at a slower rate. The acceleration of this expansion due to dark energy began after the universe was already over 7.7 billion years old (5.4 billion years ago). Inflation theory was developed in the late 1970s and early 80s, with notable contributions by several theoretical physicists, including Alexei Starobinsky at Landau Institute for Theoretical Physics, Alan Guth at Cornell University, and Andrei Linde at Lebedev Physical Institute. Alexei Starobinsky, Alan Guth, and Andrei Linde won the 2014 Kavli Prize "for pioneering the theory of cosmic inflation." It was developed further in the earl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Statistics

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation that views probability as the limit of the relative frequency of an event after many trials. Bayesian statistical methods use Bayes' theorem to compute and update probabilities after obtaining new data. Bayes' theorem describes the conditional probability of an event based on data as well as prior information or beliefs about the event or conditions related to the event. For example, in Bayesian inference, Bayes' theorem can be used to estimate the parameters of a probability distribution or statistical model. Since Bayesian statistics treats pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anthropic Principle

The anthropic principle, also known as the "observation selection effect", is the hypothesis, first proposed in 1957 by Robert Dicke, that there is a restrictive lower bound on how statistically probable our observations of the universe are, because observations could only happen in a universe capable of developing intelligent life. Proponents of the anthropic principle argue that it explains why this universe has the age and the fundamental physical constants necessary to accommodate conscious life, since if either had been different, we would not have been around to make observations. Anthropic reasoning is often used to deal with the notion that the universe seems to be finely tuned for the existence of life. There are many different formulations of the anthropic principle. Philosopher Nick Bostrom counts them at thirty, but the underlying principles can be divided into "weak" and "strong" forms, depending on the types of cosmological claims they entail. The weak anthropic p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fine-tuned Universe

The characterization of the universe as finely tuned suggests that the occurrence of life in the universe is very sensitive to the values of certain fundamental physical constants and that the observed values are, for some reason, improbable. If the values of any of certain free parameters in contemporary physical theories had differed only slightly from those observed, the evolution of the universe would have proceeded very differently and life as it is understood may not have been possible.Gribbin. J and Rees. M, ''Cosmic Coincidences: Dark Matter, Mankind, and Anthropic Cosmology'' pp. 7, 269, 1989, History In 1913, the chemist Lawrence Joseph Henderson wrote ''The Fitness of the Environment,'' one of the first books to explore fine tuning in the universe. Henderson discusses the importance of water and the environment to living things, pointing out that life depends entirely on earth's very specific environmental conditions, especially the prevalence and properties of wate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchy Problem

In theoretical physics, the hierarchy problem is the problem concerning the large discrepancy between aspects of the weak force and gravity. There is no scientific consensus on why, for example, the weak force is 1024 times stronger than gravity. Technical definition A hierarchy problem occurs when the fundamental value of some physical parameter, such as a coupling constant or a mass, in some Lagrangian is vastly different from its effective value, which is the value that gets measured in an experiment. This happens because the effective value is related to the fundamental value by a prescription known as renormalization, which applies corrections to it. Typically the renormalized value of parameters are close to their fundamental values, but in some cases, it appears that there has been a delicate cancellation between the fundamental quantity and the quantum corrections. Hierarchy problems are related to fine-tuning problems and problems of naturalness. Over the past dec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |