|

Farkas' Lemma

In mathematics, Farkas' lemma is a solvability theorem for a finite system of linear inequalities. It was originally proven by the Hungarian mathematician Gyula Farkas. Farkas' lemma is the key result underpinning the linear programming duality and has played a central role in the development of mathematical optimization (alternatively, mathematical programming). It is used amongst other things in the proof of the Karush–Kuhn–Tucker theorem in nonlinear programming. Remarkably, in the area of the foundations of quantum theory, the lemma also underlies the complete set of Bell inequalities in the form of necessary and sufficient conditions for the existence of a local hidden-variable theory, given data from any specific set of measurements. Generalizations of the Farkas' lemma are about the solvability theorem for convex inequalities, i.e., infinite system of linear inequalities. Farkas' lemma belongs to a class of statements called "theorems of the alternative": a theo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Completeness (logic)

In mathematical logic and metalogic, a formal system is called complete with respect to a particular property if every formula having the property can be derived using that system, i.e. is one of its theorems; otherwise the system is said to be incomplete. The term "complete" is also used without qualification, with differing meanings depending on the context, mostly referring to the property of semantical validity. Intuitively, a system is called complete in this particular sense, if it can derive every formula that is true. Other properties related to completeness The property converse to completeness is called soundness: a system is sound with respect to a property (mostly semantical validity) if each of its theorems has that property. Forms of completeness Expressive completeness A formal language is ''expressively complete'' if it can express the subject matter for which it is intended. Functional completeness A set of logical connectives associated with a formal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slater's Condition

In mathematics, Slater's condition (or Slater condition) is a sufficient condition for strong duality to hold for a convex optimization problem, named after Morton L. Slater. Informally, Slater's condition states that the feasible region must have an interior point (see technical details below). Slater's condition is a specific example of a constraint qualification. In particular, if Slater's condition holds for the primal problem, then the duality gap is 0, and if the dual value is finite then it is attained. Formulation Let f_1,\ldots,f_m be real-valued functions on some subset D of \mathbb^n. We say that the functions satisfy the Slater condition if there exists some x in the relative interior of D, for which f_i(x) < 0 for all in . We say that the functions satisfy the relaxed Slater condition if: * Some functions (say ) are |

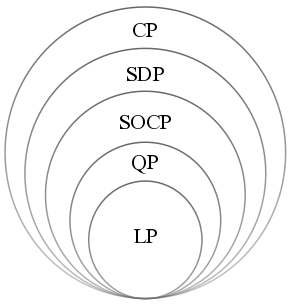

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperplane Separation Theorem

In geometry, the hyperplane separation theorem is a theorem about disjoint convex sets in ''n''-dimensional Euclidean space. There are several rather similar versions. In one version of the theorem, if both these sets are closed and at least one of them is compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal projections of the convex bodies onto the axis are disjoint. The hyperplane separation theorem is due to Hermann Minkowski. The Hahn–Banach separation theorem generalizes the result to topological vector spaces. A related result is the supporting hyperplane theorem. In the context of support-vector machines, the ''optimally separating hyperplane'' or ''maximum-margin hy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cone (linear Algebra)

In linear algebra, a cone—sometimes called a linear cone to distinguish it from other sorts of cones—is a subset of a real vector space that is closed under positive scalar multiplication; that is, C is a cone if x\in C implies sx\in C for every . This is a broad generalization of the standard cone in Euclidean space. A convex cone is a cone that is also closed under addition, or, equivalently, a subset of a vector space that is closed under linear combinations with positive coefficients. It follows that convex cones are convex sets. The definition of a convex cone makes sense in a vector space over any ordered field, although the field of real numbers is used most often. Definition A subset C of a vector space is a cone if x\in C implies sx\in C for every s>0. Here s>0 refers to (strict) positivity in the scalar field. Competing definitions Some other authors require ,\infty)C\subset C or even 0\in C. Some require a cone to be convex and/or satisfy C\cap-C\subset\. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual Cone

Dual cone and polar cone are closely related concepts in convex analysis, a branch of mathematics. Dual cone In a vector space The dual cone ''C'' of a subset ''C'' in a linear space ''X'' over the real numbers, reals, e.g. Euclidean space R''n'', with dual space ''X'' is the set :C^* = \left \, where \langle y, x \rangle is the dual system, duality pairing between ''X'' and ''X'', i.e. \langle y, x\rangle = y(x). ''C'' is always a convex cone, even if ''C'' is neither convex set, convex nor a linear cone, cone. In a topological vector space If ''X'' is a topological vector space over the real or complex numbers, then the dual cone of a subset ''C'' ⊆ ''X'' is the following set of continuous linear functionals on ''X'': :C^ := \left\, which is the polar set, polar of the set -''C''. No matter what ''C'' is, C^ will be a convex cone. If ''C'' ⊆ then C^ = X^. In a Hilbert space (internal dual cone) Alternatively, many authors define the dual cone in the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integer

An integer is the number zero (0), a positive natural number (1, 2, 3, ...), or the negation of a positive natural number (−1, −2, −3, ...). The negations or additive inverses of the positive natural numbers are referred to as negative integers. The set (mathematics), set of all integers is often denoted by the boldface or blackboard bold The set of natural numbers \mathbb is a subset of \mathbb, which in turn is a subset of the set of all rational numbers \mathbb, itself a subset of the real numbers \mathbb. Like the set of natural numbers, the set of integers \mathbb is Countable set, countably infinite. An integer may be regarded as a real number that can be written without a fraction, fractional component. For example, 21, 4, 0, and −2048 are integers, while 9.75, , 5/4, and Square root of 2, are not. The integers form the smallest Group (mathematics), group and the smallest ring (mathematics), ring containing the natural numbers. In algebraic number theory, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fredholm Alternative

In mathematics, the Fredholm alternative, named after Ivar Fredholm, is one of Fredholm's theorems and is a result in Fredholm theory. It may be expressed in several ways, as a theorem of linear algebra, a theorem of integral equations, or as a theorem on Fredholm operators. Part of the result states that a non-zero complex number in the spectrum of a compact operator is an eigenvalue. Linear algebra If ''V'' is an ''n''-dimensional vector space and T:V\to V is a linear transformation, then exactly one of the following holds: #For each vector ''v'' in ''V'' there is a vector ''u'' in ''V'' so that T(u) = v. In other words: ''T'' is surjective (and so also bijective, since ''V'' is finite-dimensional). #\dim(\ker(T)) > 0. A more elementary formulation, in terms of matrices, is as follows. Given an ''m''×''n'' matrix ''A'' and a ''m''×1 column vector b, exactly one of the following must hold: #''Either:'' ''A'' x = b has a solution x #''Or:'' ''A''T y = 0 has a so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ellipsoid Method

In mathematical optimization, the ellipsoid method is an iterative method for convex optimization, minimizing convex functions over convex sets. The ellipsoid method generates a sequence of ellipsoids whose volume uniformly decreases at every step, thus enclosing a minimizer of a convex function. When specialized to solving feasible linear optimization problems with rational data, the ellipsoid method is an algorithm which finds an optimal solution in a number of steps that is polynomial in the input size. History The ellipsoid method has a long history. As an iterative method, a preliminary version was introduced by Naum Z. Shor. In 1972, an approximation algorithm for real convex optimization, convex minimization was studied by Arkadi Nemirovski and David B. Yudin (Judin). As an algorithm for solving linear programming problems with rational data, the ellipsoid algorithm was studied by Leonid Khachiyan; Khachiyan's achievement was to prove the Polynomial time, polynomial-time ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P (complexity)

In computational complexity theory, P, also known as PTIME or DTIME(''n''O(1)), is a fundamental complexity class. It contains all decision problems that can be solved by a deterministic Turing machine using a polynomial amount of computation time, or polynomial time. Cobham's thesis holds that P is the class of computational problems that are "efficiently solvable" or " tractable". This is inexact: in practice, some problems not known to be in P have practical solutions, and some that are in P do not, but this is a useful rule of thumb. Definition A language ''L'' is in P if and only if there exists a deterministic Turing machine ''M'', such that * ''M'' runs for polynomial time on all inputs * For all ''x'' in ''L'', ''M'' outputs 1 * For all ''x'' not in ''L'', ''M'' outputs 0 P can also be viewed as a uniform family of Boolean circuits. A language ''L'' is in P if and only if there exists a polynomial-time uniform family of Boolean circuits \, such that * For all n \in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Co-NP

In computational complexity theory, co-NP is a complexity class. A decision problem X is a member of co-NP if and only if its complement is in the complexity class NP. The class can be defined as follows: a decision problem is in co-NP if and only if for every ''no''-instance we have a polynomial-length " certificate" and there is a polynomial-time algorithm that can be used to verify any purported certificate. That is, co-NP is the set of decision problems where there exists a polynomial and a polynomial-time bounded Turing machine ''M'' such that for every instance ''x'', ''x'' is a ''no''-instance if and only if: for some possible certificate ''c'' of length bounded by , the Turing machine ''M'' accepts the pair . Complementary problems While an NP problem asks whether a given instance is a ''yes''-instance, its ''complement'' asks whether an instance is a ''no''-instance, which means the complement is in co-NP. Any ''yes''-instance for the original NP problem becomes a '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |